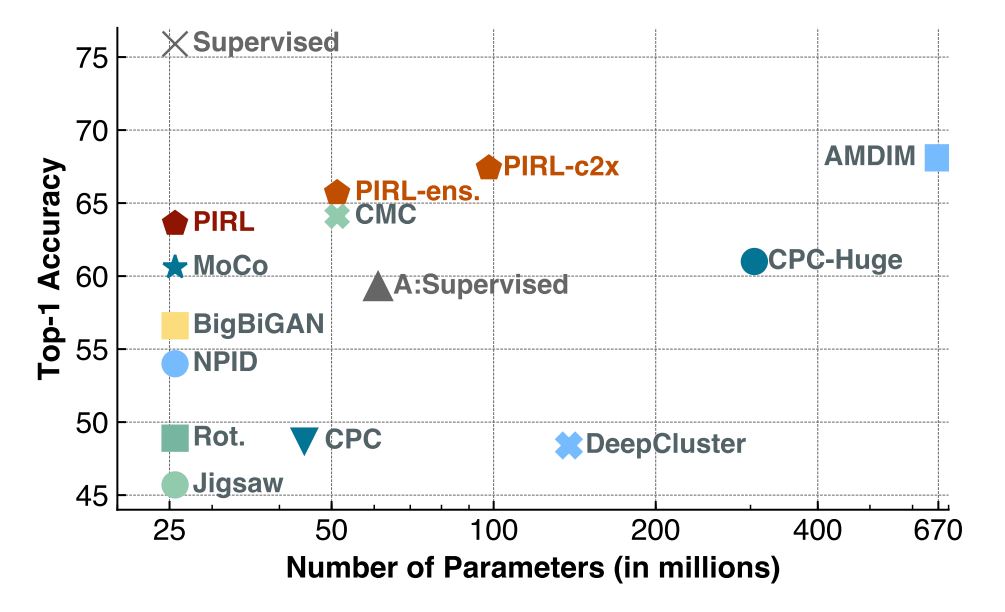

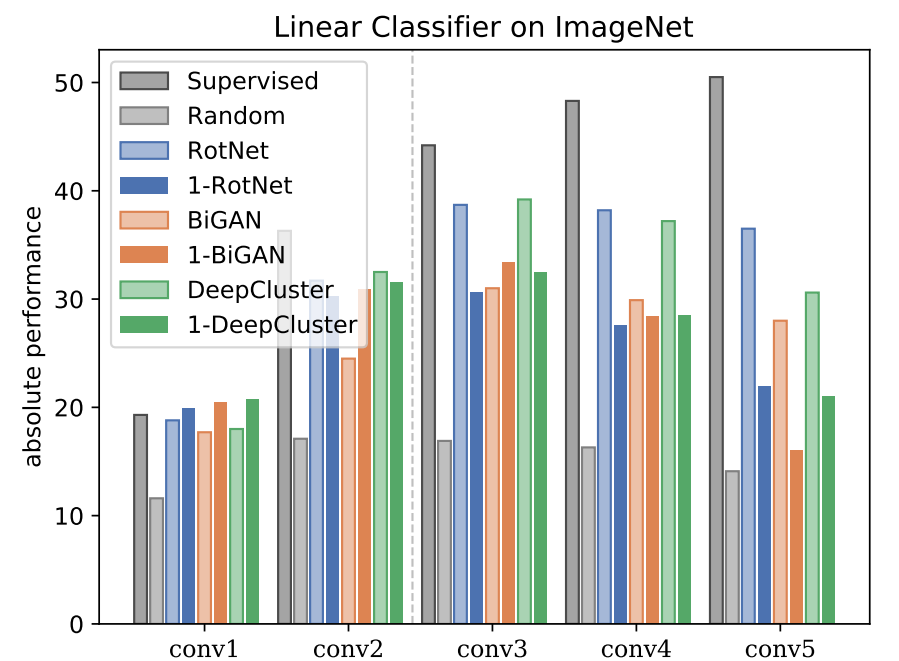

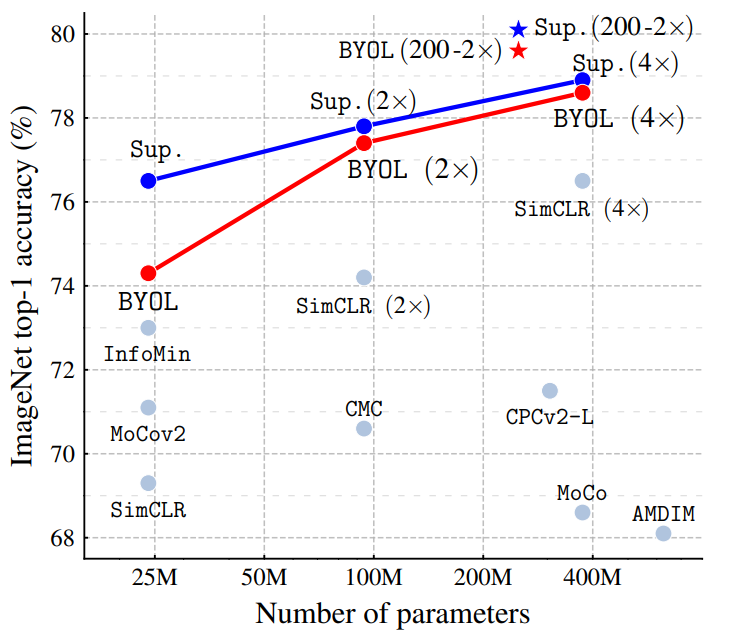

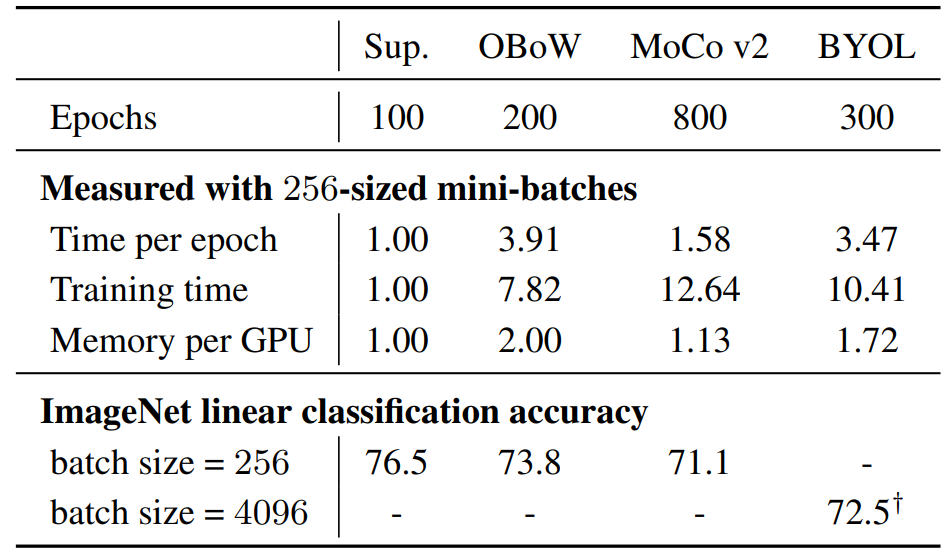

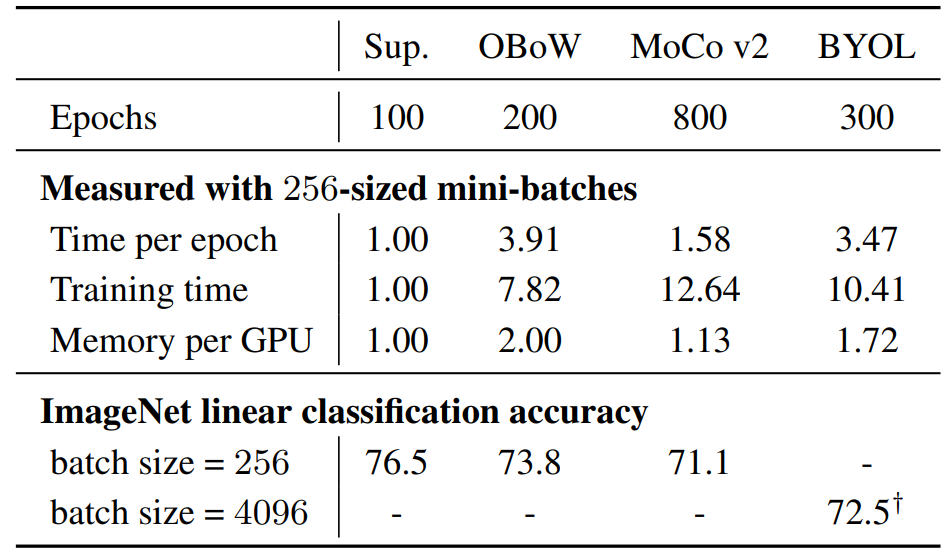

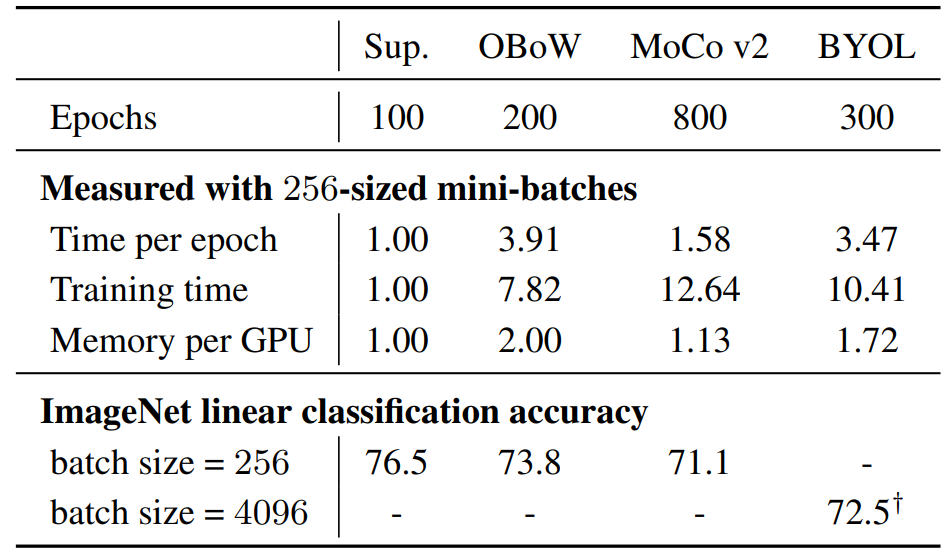

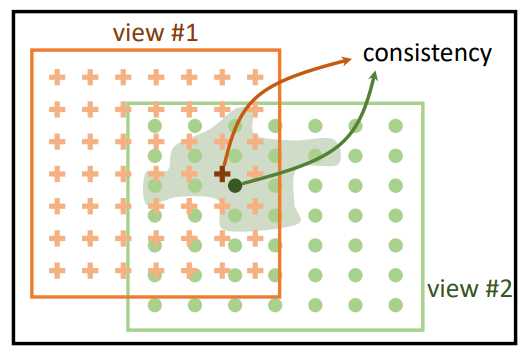

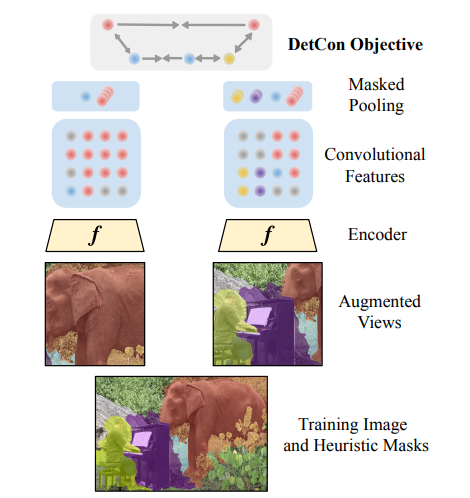

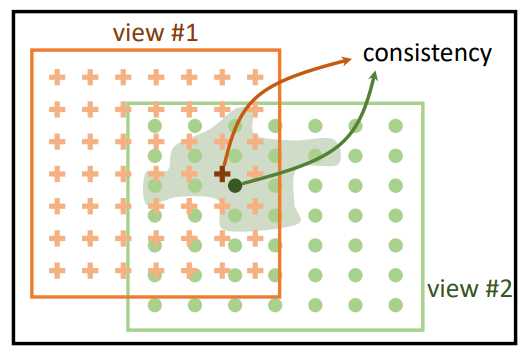

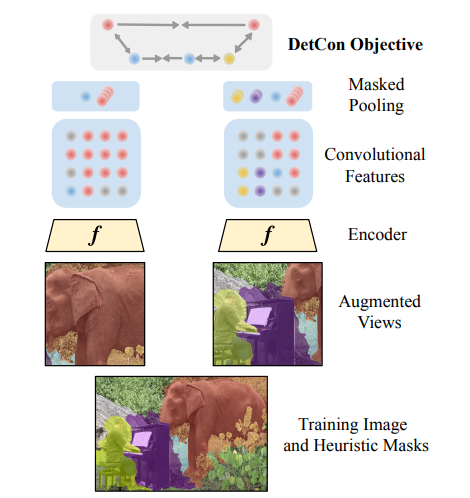

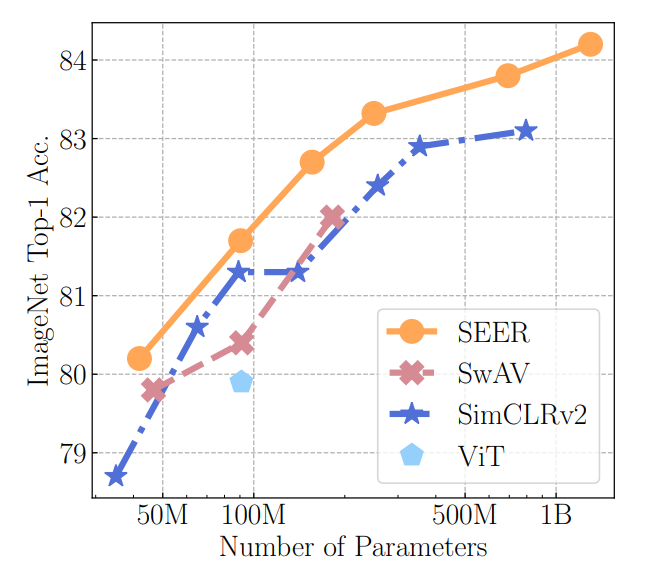

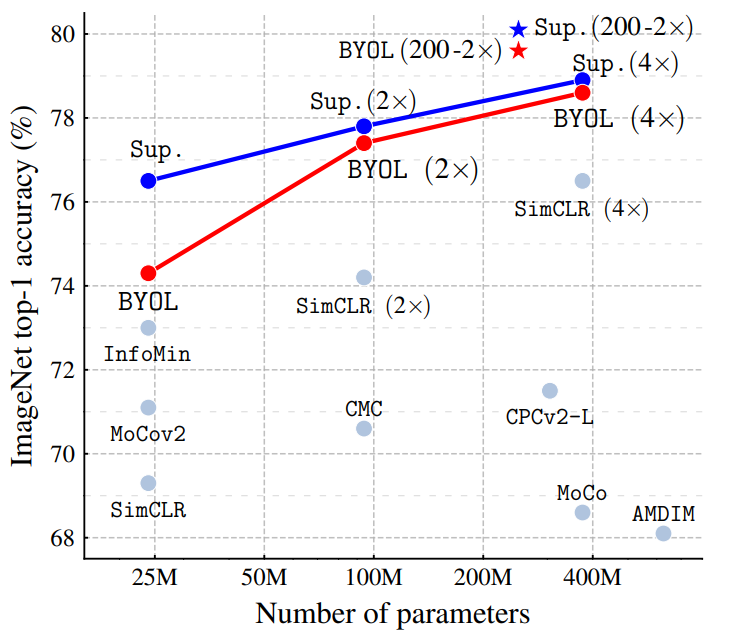

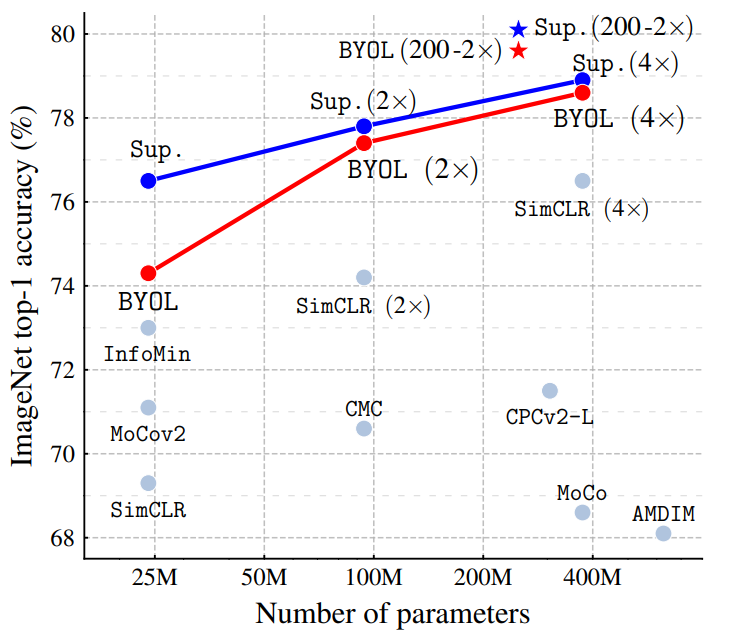

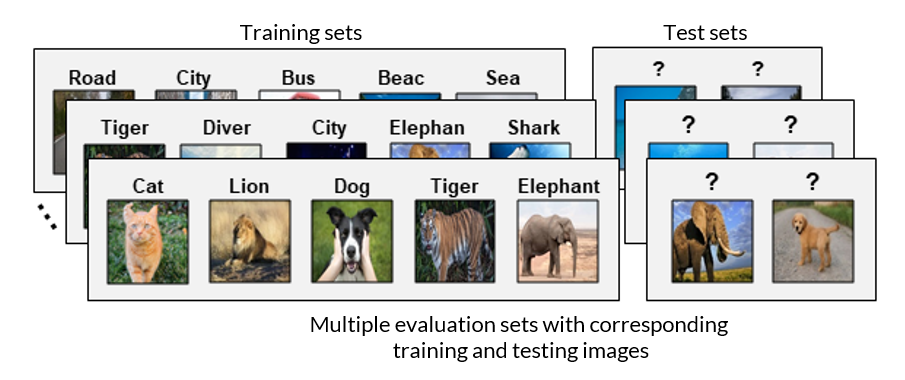

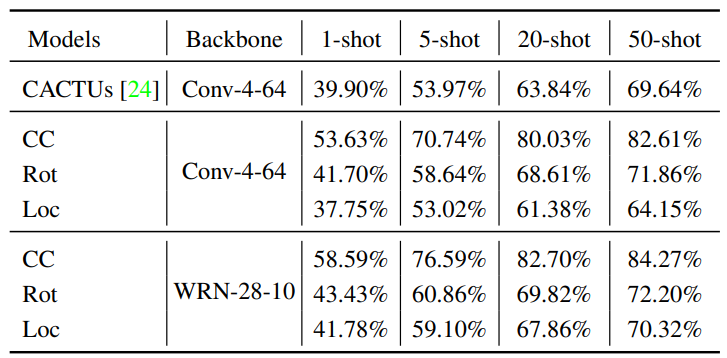

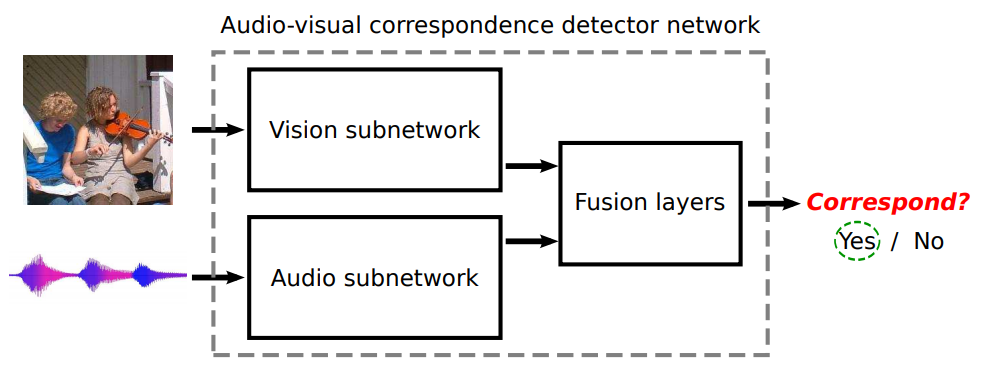

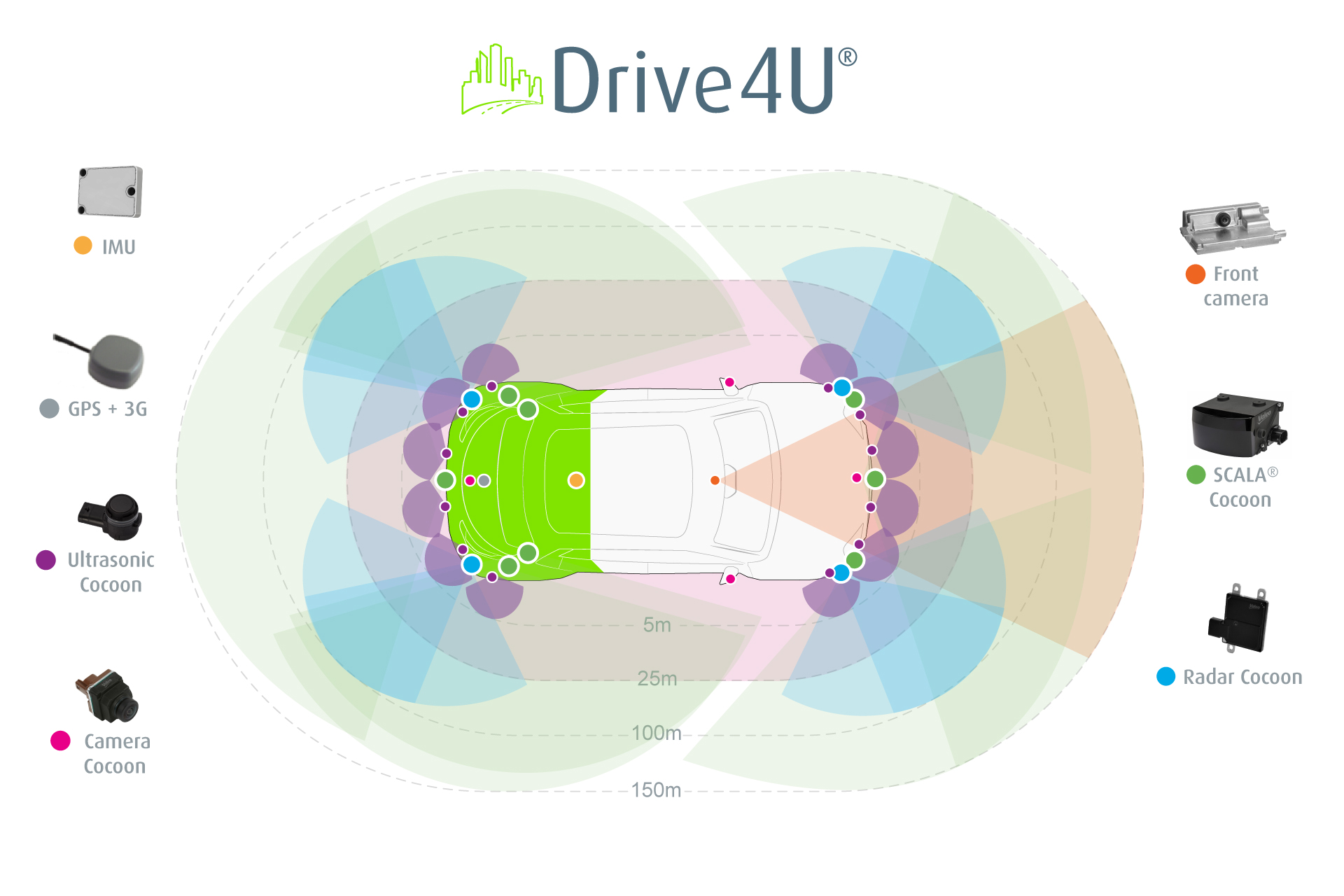

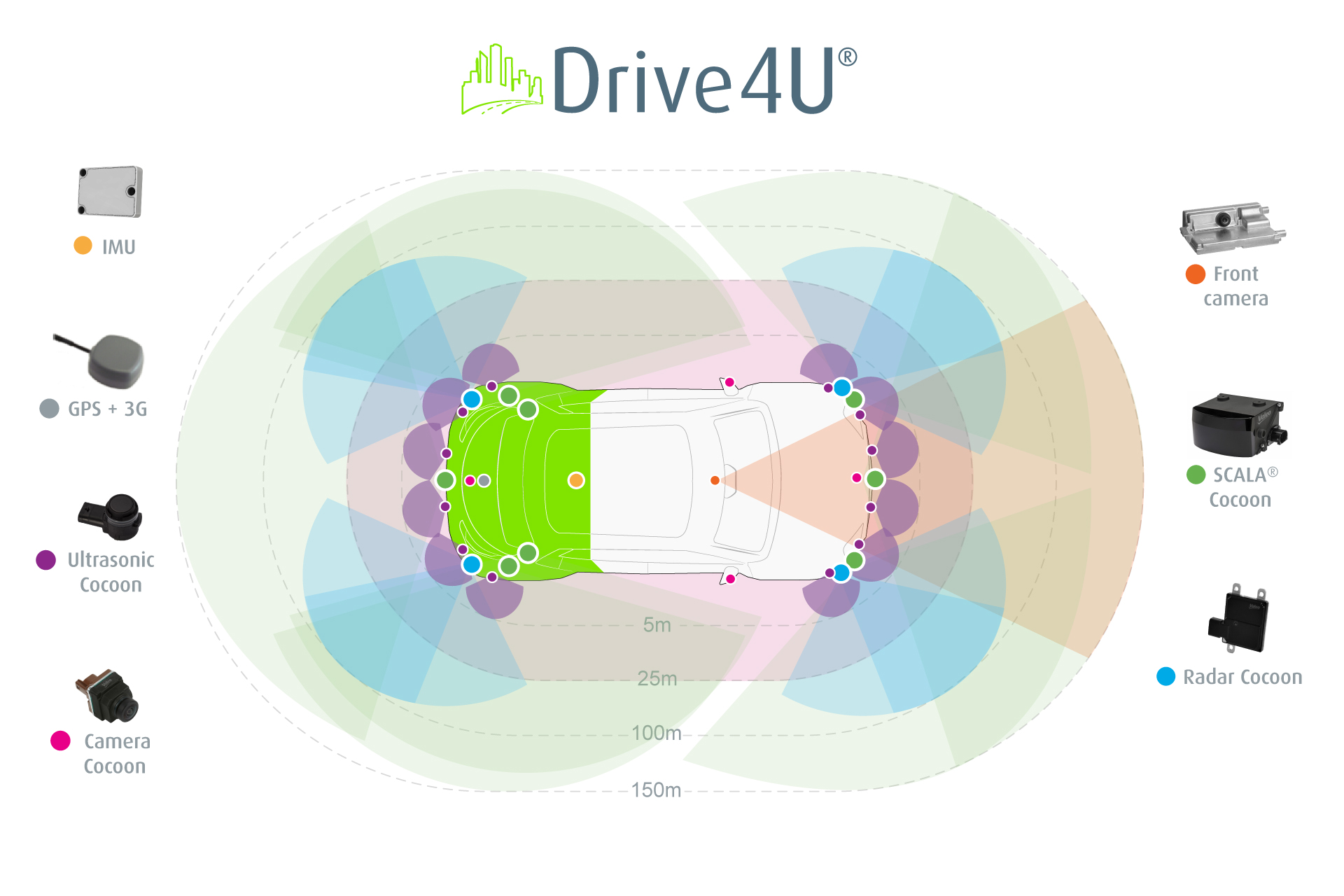

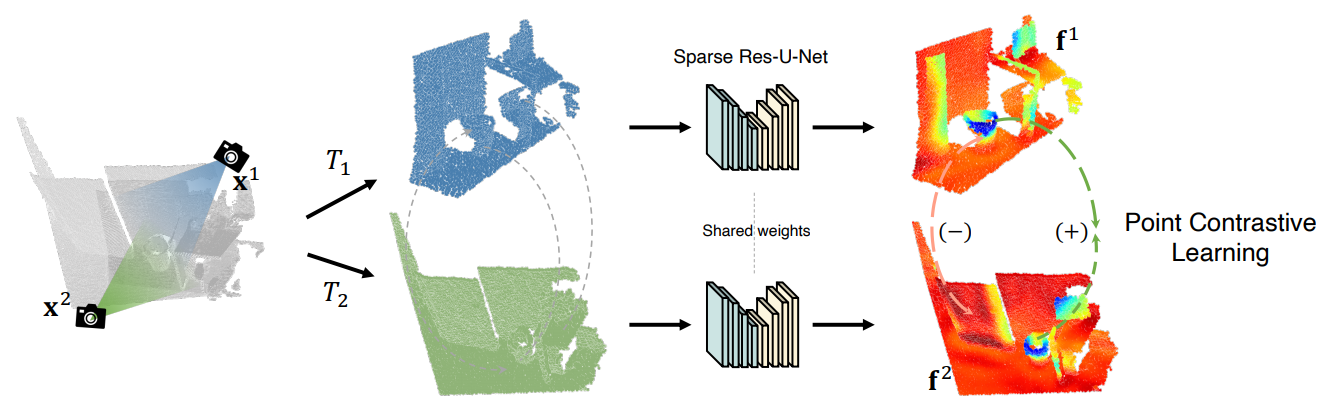

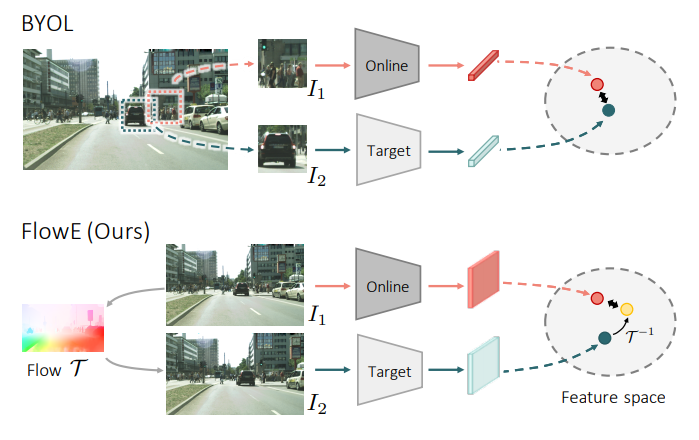

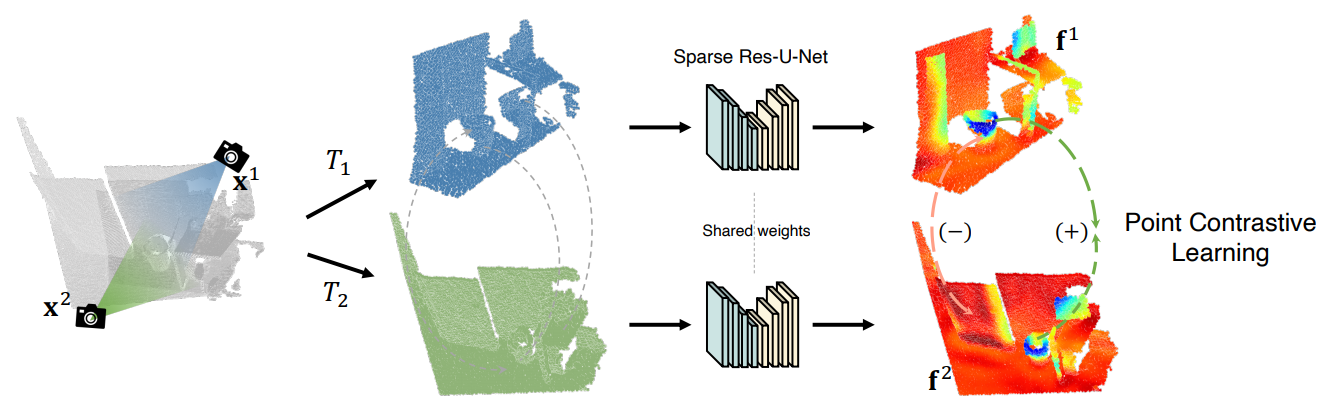

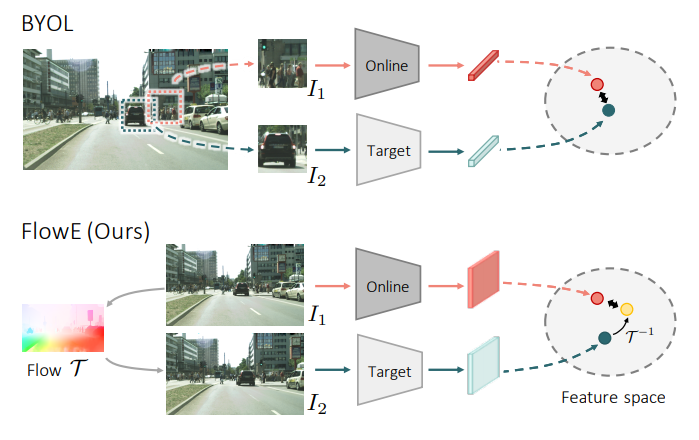

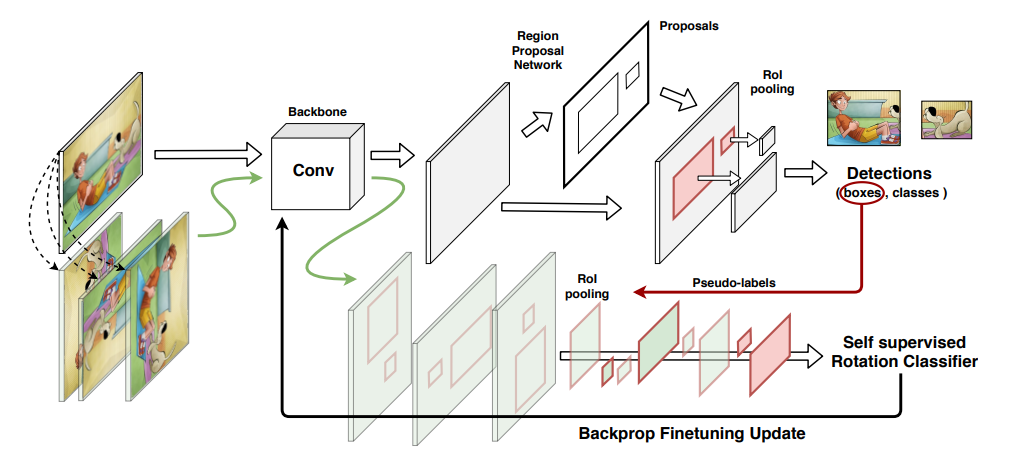

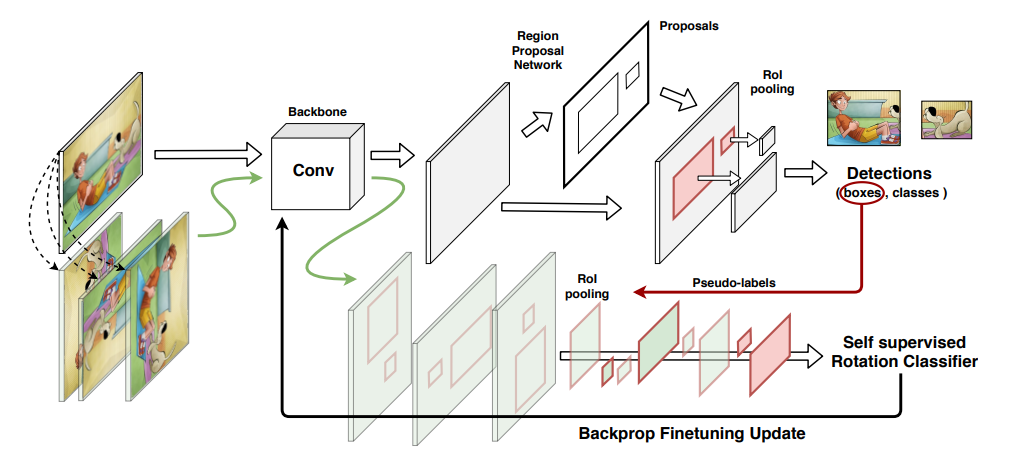

layout: true <div class="header-logo"><img src="images/logos/cvpr21.jpg" /></div> .center.footer[Spyros GIDARIS and Andrei BURSUC | Advances in Self-Supervised Learning: What is next?] --- class: center, middle, title-slide count: false ## CVPR 2020 Tutorial ## .bold[Leave Those Nets Alone: <br> Advances in Self-Supervised Learning] # What is next? <br> .grid[ .kol-3-12[ .bold[Spyros Gidaris] ] .kol-3-12[ .bold[Andrei Bursuc] ] .kol-3-12[ .bold[Jean-Baptiste Alayrac] ] .kol-3-12[ .bold[Adrià Recasens] ] ] .grid[ .kol-3-12[ .bold[Mathilde Caron] ] .kol-3-12[ .bold[Olivier Hénaff] ] .kol-3-12[ .bold[Aäron van den Oord] ] .kol-3-12[ .bold[Relja Arandjelović] ] ] .foot[https://gidariss.github.io/self-supervised-learning-cvpr2021/] --- class: middle .center.width-60[] The first generation of self-supervised approaches ($\texttt{RotNet}$, $\texttt{RelPatch}$, $\texttt{JigSaw}$, $\texttt{Colorization}$, $\texttt{Exemplar}$, $\texttt{DeepCluster}$,) explored a new paradigm and achieved interesting results. --- class: middle .center.width-60[] .caption[ImageNet classification with linear models] However performance is still far from the performance of their supervised counterparts. .citation[I. Misra and L. van der Maaten, Self-Supervised Learning of Pretext-Invariant Representations, CVPR 2020] --- class: middle .bigger[.citet[Asano et al. (2020)] show that **as little as a single image is sufficient**, when combined with self-supervision and data augmentation, to learn the first few layers of standard deep networks as well as using millions of images and full supervision.] .citation[Y.M. Asano, A critical analysis of self-supervision, or what we can learn from a single image, ICLR 2020] --- class: middle .grid[ .kol-6-12[ .center[1 - Take a high-resolution image] <br/> .center.width-80[] ] .hidden[ .kol-6-12[ .center[2 - Generate 1M images of crops and augmentations] <br/> .center.width-80[] ] ] ] .citation[Y.M. Asano, A critical analysis of self-supervision, or what we can learn from a single image, ICLR 2020] --- count: false class: middle .grid[ .kol-6-12[ .center[1 - Take a high-resolution image] <br/> .center.width-80[] ] .kol-6-12[ .center[2 - Generate 1M images of crops and augmentations] <br/> .center.width-80[] ] ] .citation[Y.M. Asano, A critical analysis of self-supervision, or what we can learn from a single image, ICLR 2020] --- class: middle .grid[ .kol-6-12[ .center.width-100[] .caption[Accuracies of linear classifiers trained on the representations from intermediate layers of supervised and self-supervised network ] ] .kol-6-12[ <br/><br/><br/> - With sufficient data augmentation, one image allows self-supervision to learn good and generalizable features - At deeper layers there is a gap with supervised methods, that is mitigated to some extent by large datasets for self-supervised methods ] ] .citation[Y.M. Asano, A critical analysis of self-supervision, or what we can learn from a single image, ICLR 2020] --- class: middle, center .center.width-80[] The recent line of approaches ($\texttt{contrastive}$, $\texttt{feature reconstruction}$, $\texttt{clustering}$, $\texttt{multi-modal supervision}$) achieved remarkable results outperforming supervised variants on several benchmarks. --- class: middle .grid[ .kol-6-12[ .center.width-90[] .caption[ImageNet Top-1 accuracy of linear classifiers trained on representations learned with different self-supervised methods (pretrained on ImageNet). ] ] .kol-6-12[ <br> - The performance on this benchmark has accelerated strongly in the past year closing the gap w.r.t. supervised methods - The main contributors: - *contrastive learning with more negatives* - *feature reconstruction* - *momentum update* - *output projection head* - *better designed and stronger data augmentation* - *longer training* ] ] .citation[J.B. Grill et al., Bootstrap your own latent: A new approach to self-supervised Learning, NeurIPS 2020] --- class: middle, center .center.width-80[] What should we expect from the next generation to solve? --- class: middle Although they achieve outstanding performance, contrastive methods require long training and complex setups, e.g., TPUs, large mini-batches. .hidden[Recent works have showed that reconstruction methods can achieve competitive performance by reconstructing features instead of inputs.] .hidden.center.width-40[] .hidden.caption[Time and memory consumption relative to supervised training.] .hidden.citation[S. Gidaris et al., Learning Representations by Predicting Bags of Visual Words, CVPR 2020 <br/> J. B. Grill et al., Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning, NeurIPS 2020 <br/> M. Caron et al., Unsupervised Learning of Visual Features by Contrasting Cluster Assignments, NeurIPS 2020 <br/> S. Gidaris et al., Online Bag-of-Visual-Words Generation for Unsupervised Representation Learning, CVPR 2021] --- count: false class: middle Although they achieve outstanding performance, contrastive methods require long training and complex setups, e.g., TPUs, large mini-batches. Recent works have showed that reconstruction methods can achieve competitive performance by reconstructing features instead of inputs. .center.width-40[] .caption[Time and memory consumption relative to supervised training.] .citation[S. Gidaris et al., Learning Representations by Predicting Bags of Visual Words, CVPR 2020 <br/> J. B. Grill et al., Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning, NeurIPS 2020 <br/> M. Caron et al., Unsupervised Learning of Visual Features by Contrasting Cluster Assignments, NeurIPS 2020 <br/> S. Gidaris et al., Online Bag-of-Visual-Words Generation for Unsupervised Representation Learning, CVPR 2021 ] --- class: middle, center .center.width-40[] .caption[Time and memory consumption relative to supervised training.] .center.Q[How to improve data and compute efficiency?] --- class: middle .grid[ .kol-7-12[ .center.width-100[] ] .kol-5-12[ <br/><br/> - With few exceptions most self-supervised methods deal with ImageNet-like data with one dominant object per image. - In the case of autonomous driving data with HD images and large complex scenes, these strategies might be cumbersome to apply. ] ] .hidden.center.Q[How to go beyond single object images?] .citation[H. Caesar et al., nuScenes: A multimodal dataset for autonomous driving, CVPR 2020 ] --- count: false class: middle .grid[ .kol-7-12[ .center.width-100[] ] .kol-5-12[ <br/><br/> - With few exceptions most self-supervised methods deal with ImageNet-like data with one dominant object per image. - In the case of autonomous driving data with HD images and large complex scenes, these strategies might be cumbersome to apply. ] ] .center.Q[How to go beyond single object images?] .citation[H. Caesar et al., nuScenes: A multimodal dataset for autonomous driving, CVPR 2020 ] --- class: middle ## .center[Targetting object detection in the self-supervised task] .grid[ .kol-6-12[ <br/> <br/> .center.width-70[] .caption[ PixPro is based on a pixel-to-propagation consistency pretext task for pixellevel visual representation learning.] ] .kol-6-12[ .hidden.center.width-70[] .hidden.caption[DetCon: The contrastive detection objective pulls together pooled feature vectors from the same mask (across views) and pushes apart features from different masks and different images.] ] ] .citation[Z. Xie et al.,Propagate Yourself: Exploring Pixel-Level Consistency for Unsupervised Visual Representation Learning, CVPR 2021 <br/> .hidden[O. Henaff et al., Efficient Visual Pretraining with Contrastive Detection, arXiv 2021]] --- count: false class: middle ## .center[Targetting object detection in the self-supervised task] .grid[ .kol-6-12[ <br/> <br/> .center.width-70[] .caption[ PixPro is based on a pixel-to-propagation consistency pretext task for pixellevel visual representation learning.] ] .kol-6-12[ .center.width-70[] .caption[ DetCon: The contrastive detection objective then pulls together pooled feature vectors from the same mask (across views) and pushes apart features from different masks and different images.] ] ] .citation[Z. Xie et al.,Propagate Yourself: Exploring Pixel-Level Consistency for Unsupervised Visual Representation Learning, CVPR 2021 <br/> O. Henaff et al., Efficient Visual Pretraining with Contrastive Detection, arXiv 2021] --- class: middle .bigger[Most self-supervised methods are pre-trained on ImageNet, which is a *curated* and *perfectly balanced* dataset.] .hidden.bigger[In self-supervised learning **the dataset itself is a form of supervision**.] .hidden[ <br><br> .center.Q[Shifting towards uncurated and "boring" data and better understand its impact.] ] --- count: false class: middle .bigger[Most self-supervised methods are pre-trained on ImageNet, which is a *curated* and *perfectly balanced* dataset.] .bigger[In self-supervised learning **the dataset itself is a form of supervision**.] .hidden[ <br><br> .center.Q[Shifting towards uncurated and "boring" data and better understand its impact.] ] --- count: false class: middle .bigger[Most self-supervised methods are pre-trained on ImageNet, which is a *curated* and *perfectly balanced* dataset.] .bigger[In self-supervised learning **the dataset itself is a form of supervision**.] <br><br> .center.Q[Shifting towards uncurated and "boring" data and better understand its impact.] --- class: middle ## .center[Self-supervision pre-training on Instagram images] .center.width-40[] .caption[SEER is pre-trained on uncurated random images from Instagram] .citation[P.Goyal et al., Self-supervised Pretraining of Visual Features in the Wild, arXiv 2021] --- class: middle .grid[ .kol-6-12[ .center.width-90[] ] .kol-6-12[ Most approaches compete on a few popular benchmarks (`ImageNet`, `Places205`, `VOC07+12`, `COCO14`): - `ImageNet` is also used for pre-training - finetuning on some downstream tasks can still be overly long, e.g., object detection - the amount of datasets to evaluate on is still limited $\rightarrow$ risk of optimizing for specific datasets ] ] .hidden.center.Q[Finding better and more compelling evaluation strategies.] .citation[J.B. Grill et al., Bootstrap your own latent: A new approach to self-supervised Learning, NeurIPS 2020] --- count: false class: middle .grid[ .kol-6-12[ .center.width-90[] ] .kol-6-12[ Most approaches compete on a few popular benchmarks (`ImageNet`, `Places205`, `VOC07+12`, `COCO14`): - `ImageNet` is also used for pre-training - finetuning on some downstream tasks can still be overly long, e.g., object detection - the amount of datasets to evaluate on is still limited $\rightarrow$ risk of optimizing for specific datasets ] ] .center.Q[Finding better and more compelling evaluation strategies.] .citation[J.B. Grill et al., Bootstrap your own latent: A new approach to self-supervised Learning, NeurIPS 2020] --- class: middle ## .center[Evaluating self-supervised methods with few-shot protocols] .grid[ .kol-8-12[ .center.width-100[] ] .kol-4-12[ <br> .center.width-100[] ] ] .reset-columns[] Assess the quality of self-supervised representations through few-shot object recognition: .smaller[ - large pool of train-test sets ($2k$) - low-cost evaluation - can be easily extended to other datasets ] .citation[S. Gidaris et al., Boosting Few-Shot Visual Learning With Self-Supervision, ICCV 2019] --- class: middle .bigger[A few other works have recently proposed a variety of datasets and tasks to evaluate on (see below).] .citation[ X. Zhai et al., A Large-scale Study of Representation Learning with the Visual Task Adaptation Benchmark, arXiv 2019 <br/> A. Newell and J. Deng, How Useful is Self-Supervised Pretraining for Visual Tasks?, CVPR 2020 <br/> B. Wallace and B. Hariharan, Extending and Analyzing Self-Supervised Learning Across Domains, ECCV 2020 <br/> L. Ericsson et al., How Well Do Self-Supervised Models Transfer?, CVPR 2021 <br/> G.V. Horn et al.,Benchmarking Representation Learning for Natural World Image Collections, CVPR 2021] --- class: middle .center.width-70[] .center[Traditionally, multi-modal self-supervised methods rely on mode supervision and process each modality individually] .citation[R. Arandjelovic and A. Zisserman, Look, listen and learn, ICCV 2017] --- class: middle .center.width-60[] .center[However, most robots rely on a range of complementary sensors to understand their enviroment] .hidden.center.Q[How to leverage better information from different sensors and their interplay, in particular for robotics?] --- class: middle .center.width-60[] .center[However, most robots rely on a range of complementary sensors to understand their enviroment] .center.Q[How to leverage better information from different sensors and their interplay, in particular for robotics?] --- class: middle ## .center[Self-supervision for other sensors and modalities] .grid[ .kol-7-12[ <br/> <br/> .center.width-100[] .caption[PointContrast: recognize a point across views] ] .kol-5-12[ .hidden.center.width-90[] .hidden.caption[FlowE: Encourage features to obey the same transformation as the input image pairs.] ] ] .citation[S. Xie et al.,PointContrast: Unsupervised Pre-training for 3D Point Cloud Understanding, ECCV 2020 <br/> .hidden[Y. Xiong et al., Self-Supervised Representation Learning from Flow Equivariance, arXiv 2021]] --- count: false class: middle ## .center[Self-supervision for other sensors and modalities] .grid[ .kol-7-12[ <br/> <br/> .center.width-100[] .caption[PointContrast: recognize a point across views] ] .kol-5-12[ .center.width-90[] .caption[FlowE: Encourage features to obey the same transformation as the input image pairs.] ] ] .citation[S. Xie et al.,PointContrast: Unsupervised Pre-training for 3D Point Cloud Understanding, ECCV 2020 <br/> Y. Xiong et al., Self-Supervised Representation Learning from Flow Equivariance, arXiv 2021] --- class: middle, center .bigger[In spite of the impressive progress in the past few years, the most common usage of self-supervised methods is still just for pre-training.] .hidden.center.Q[Finding new applications of self-supervised learning.] --- count: false class: middle, center .bigger[In spite of the impressive progress in the past few years, the most common usage of self-supervised methods is still just for pre-training.] .center.Q[Finding new applications of self-supervised learning.] --- class: middle ## .center[Cross-domain detection with test-time training] .center.width-55[] .caption[Using self-supervision and self-training for unsupervised domain adaptation over a single (test) image] .hidden[ .center.width-50[] .caption[The Social Bikes concept-dataset acquired from different social networks] ] .citation[A. d'Innocente et al., One-Shot Unsupervised Cross-Domain Detection, ECCV 2020 <br> Y. Sun et al., Test-Time Training with Self-Supervision for Generalization under Distribution Shifts, ICML 2020] --- count: false class: middle ## .center[Cross-domain detection with test-time training] .center.width-55[] .caption[Using self-supervision and self-training for unsupervised domain adaptation over a single (test) image] .center.width-50[] .caption[Using self-supervision and self-training for unsupervised domain adaptation over a single (test) image] .citation[A. d'Innocente et al., One-Shot Unsupervised Cross-Domain Detection, ECCV 2020 <br> Y. Sun et al., Test-Time Training with Self-Supervision for Generalization under Distribution Shifts, ICML 2020] --- class: middle # What is next? ## Improving data and compute efficiency ## Going beyond single object images ## Going beyond curated datasets ## Towards better evaluation practices ## Multi-modal reasoning with self-supervised learning ## New applications of self-supervised learning --- layout: false class: end-slide, center count: false The end.