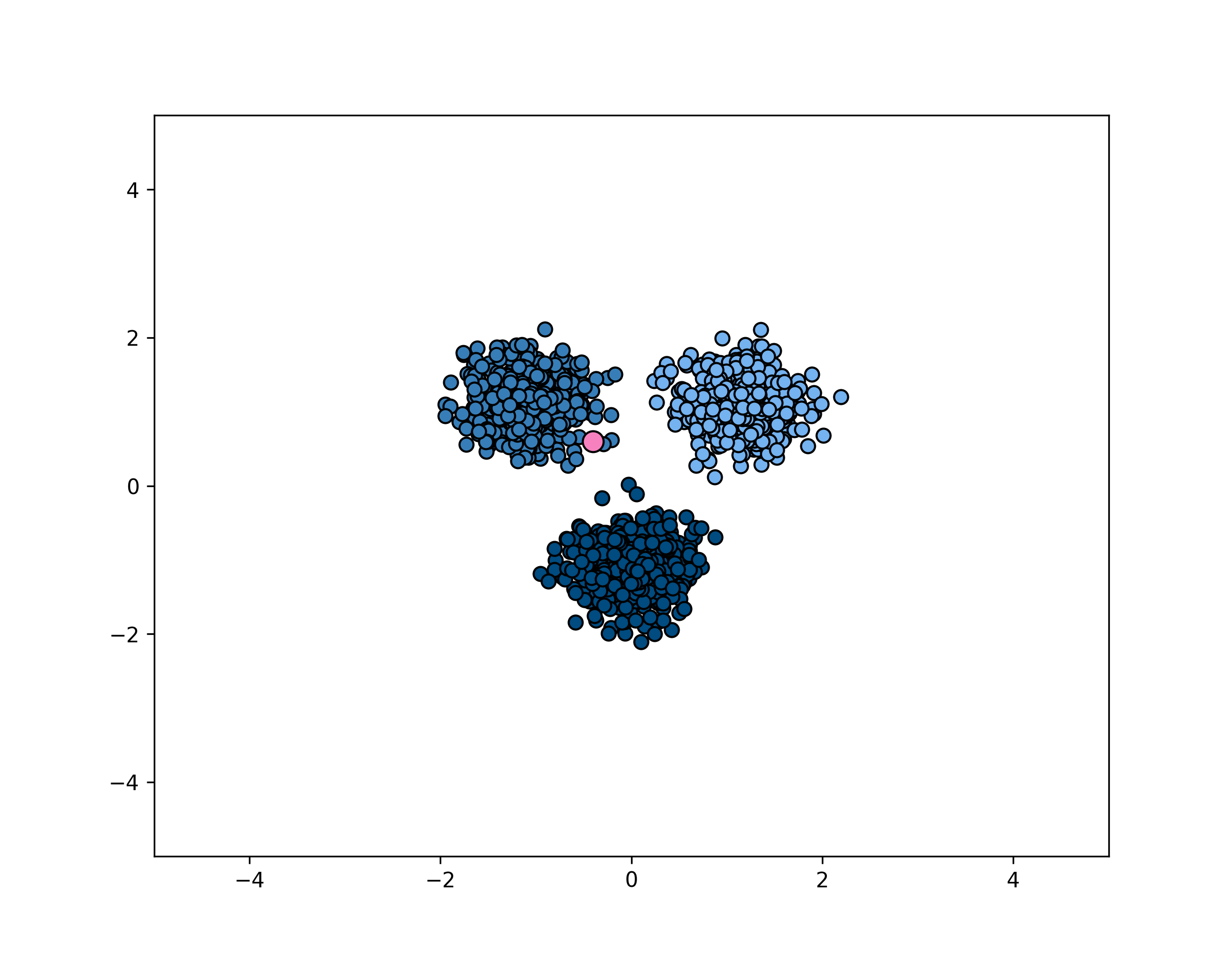

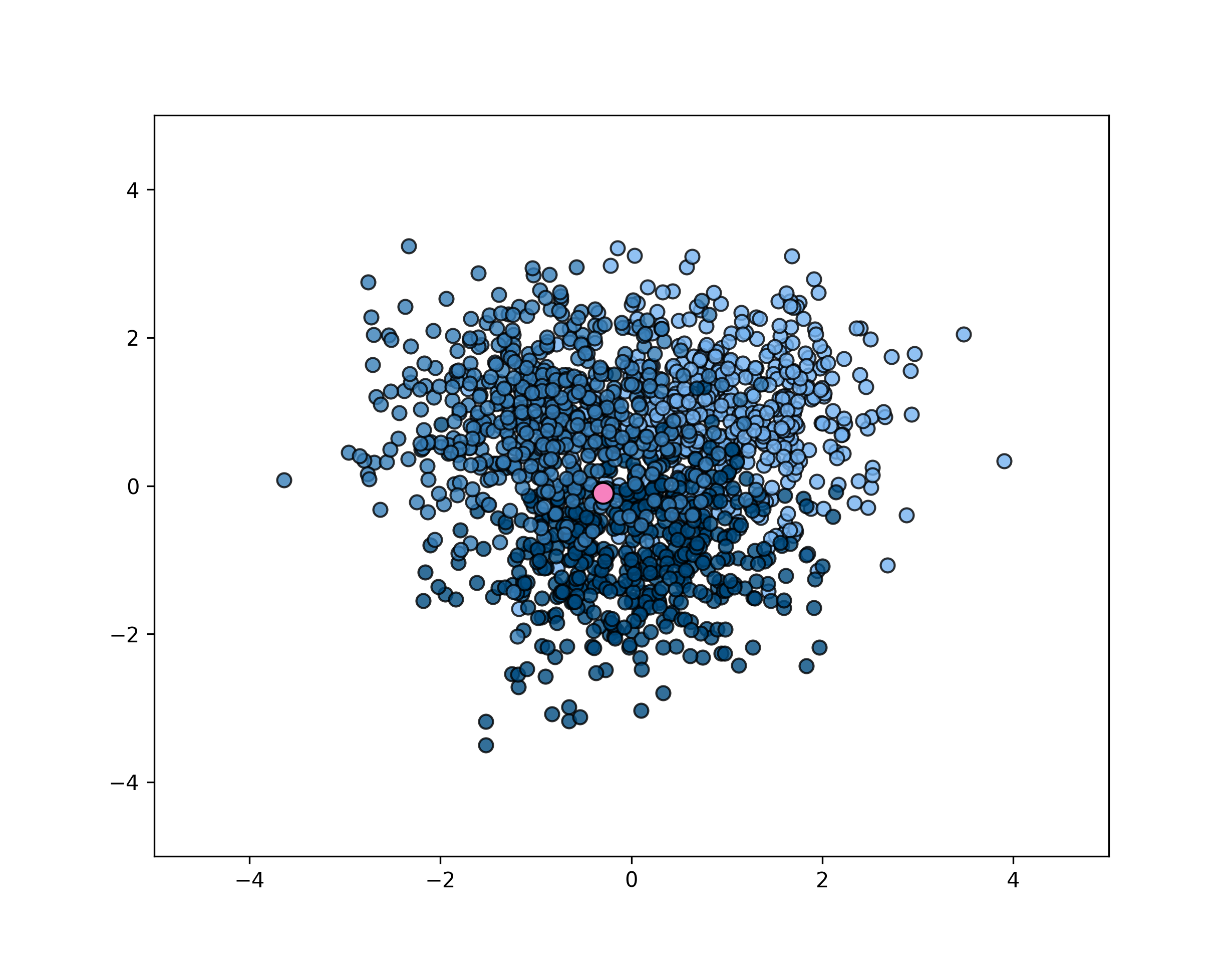

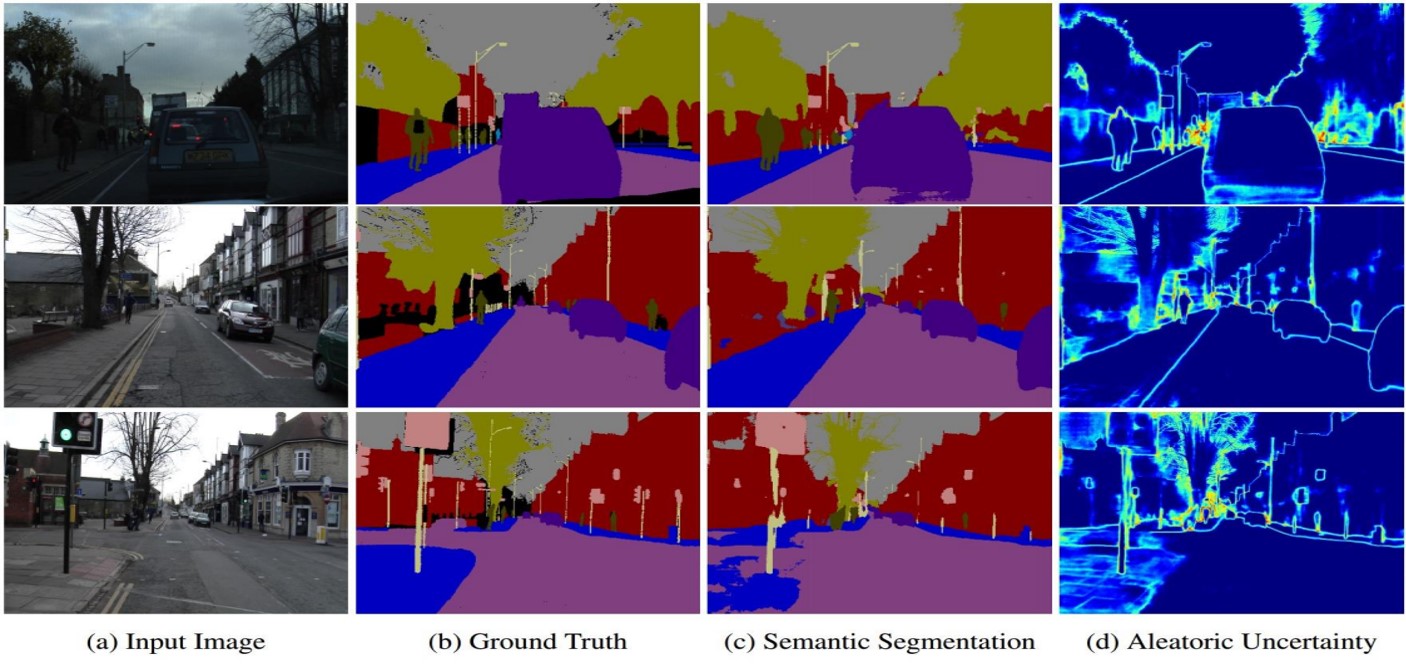

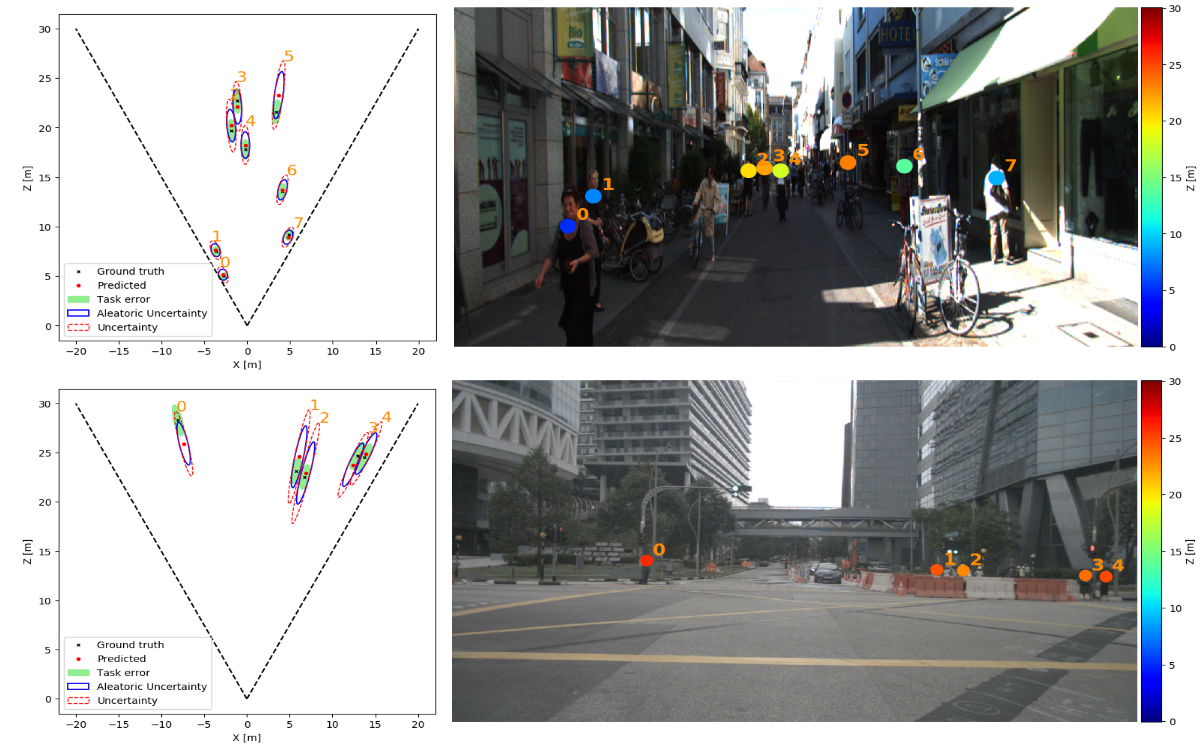

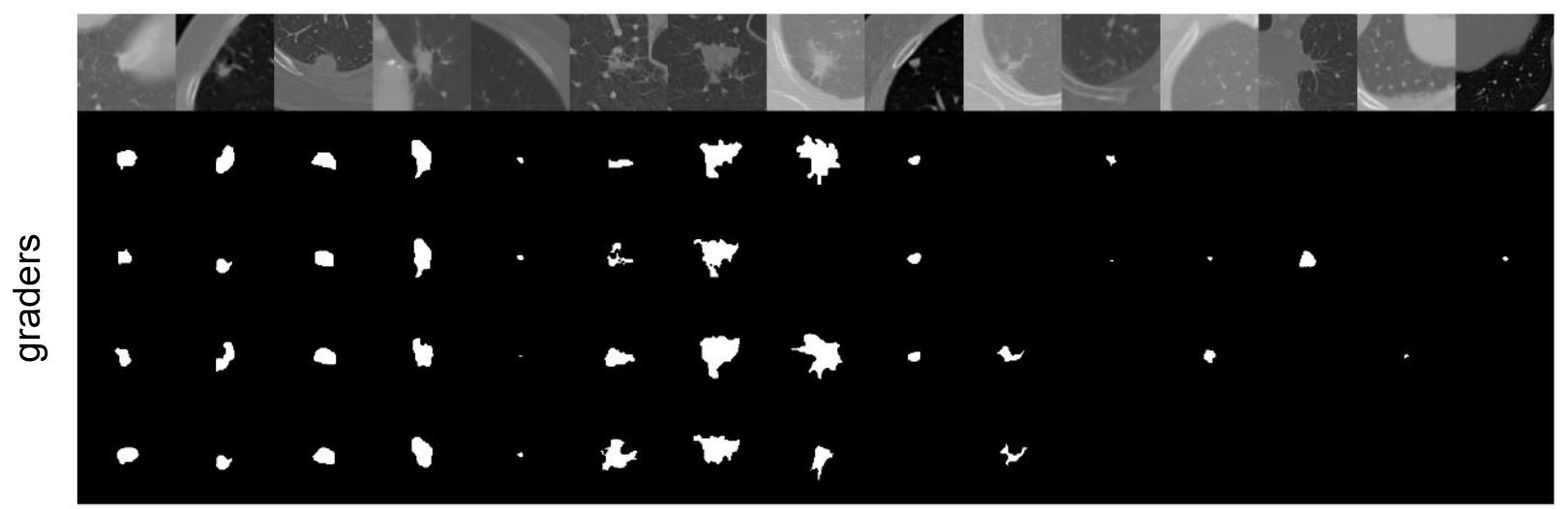

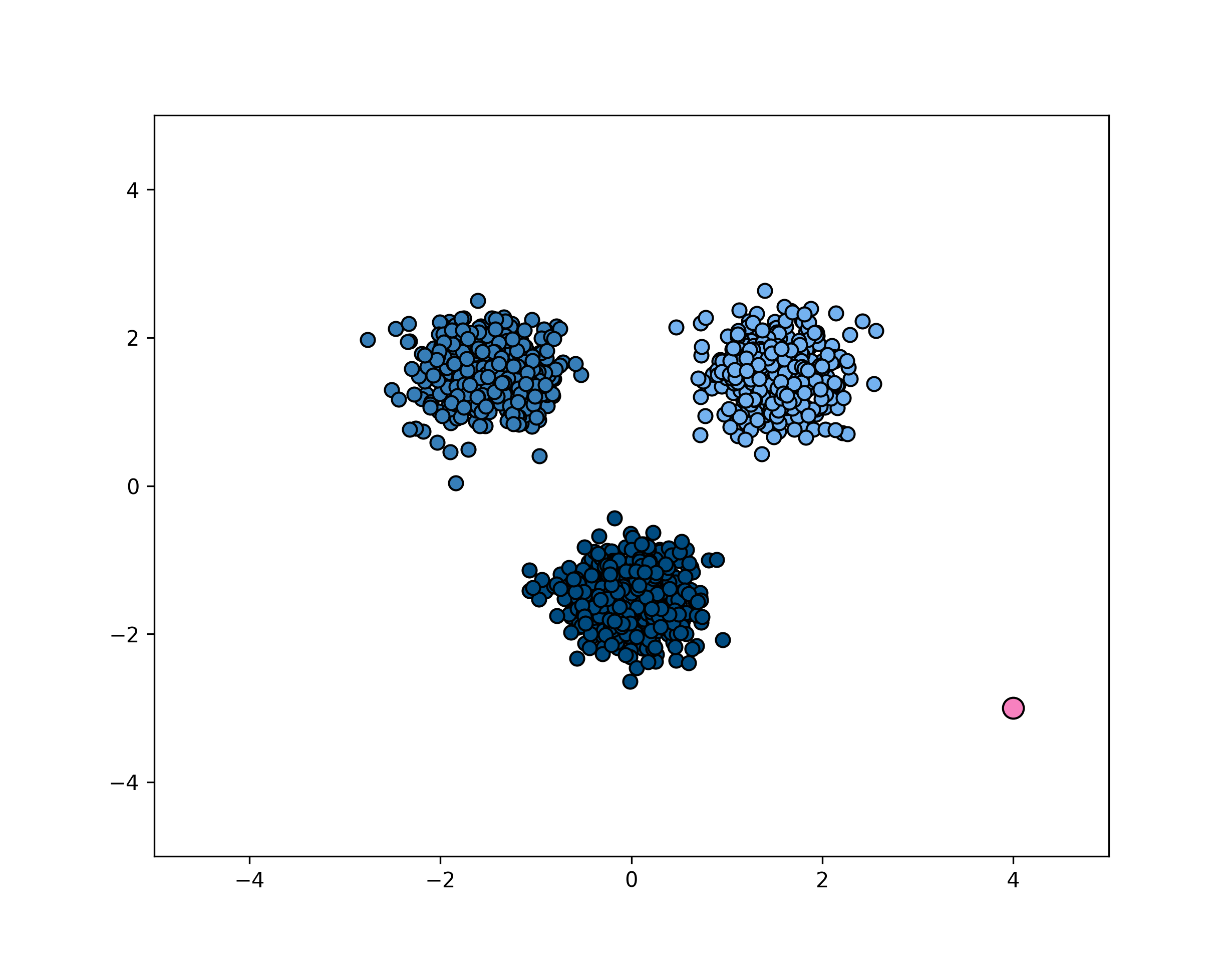

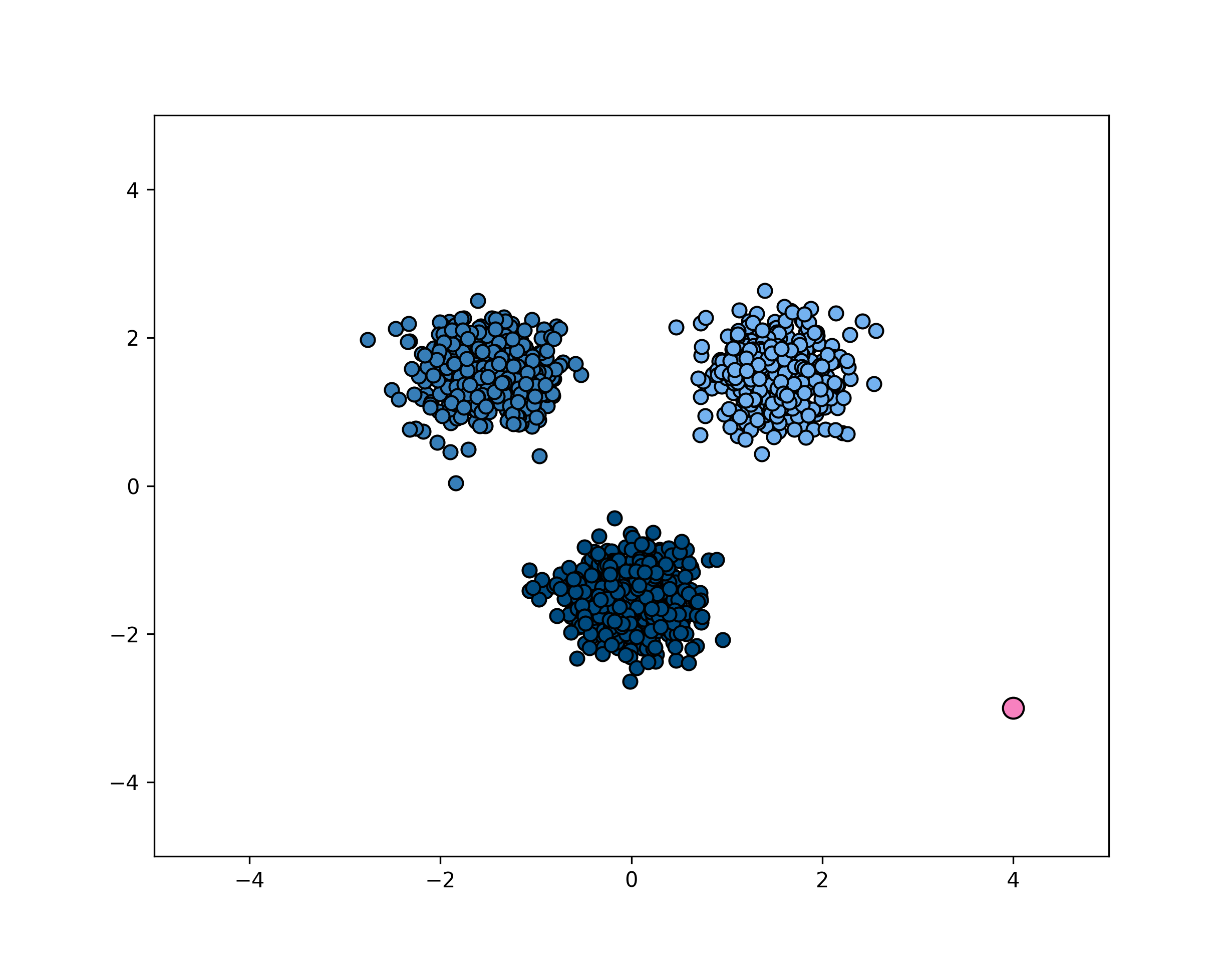

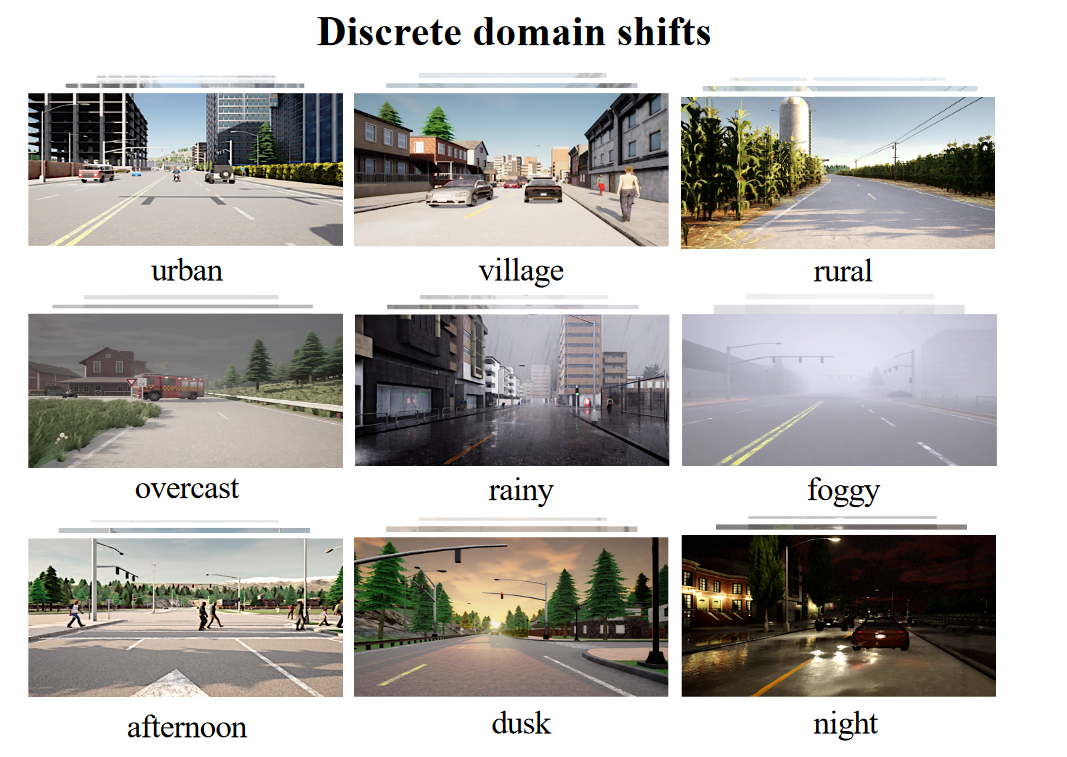

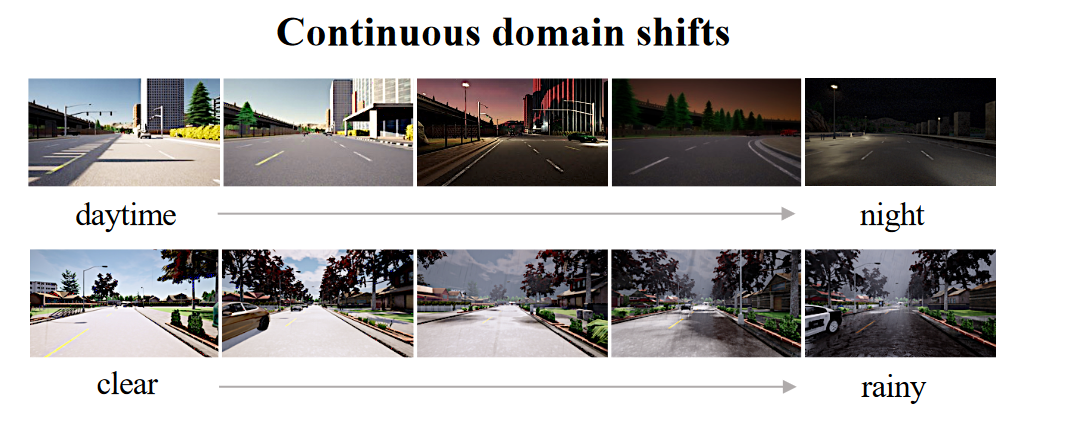

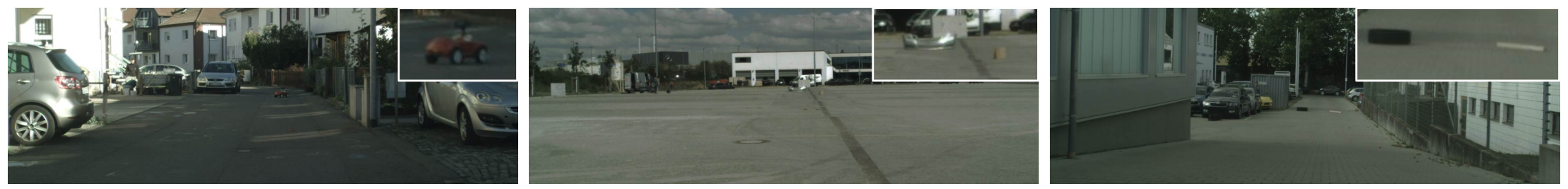

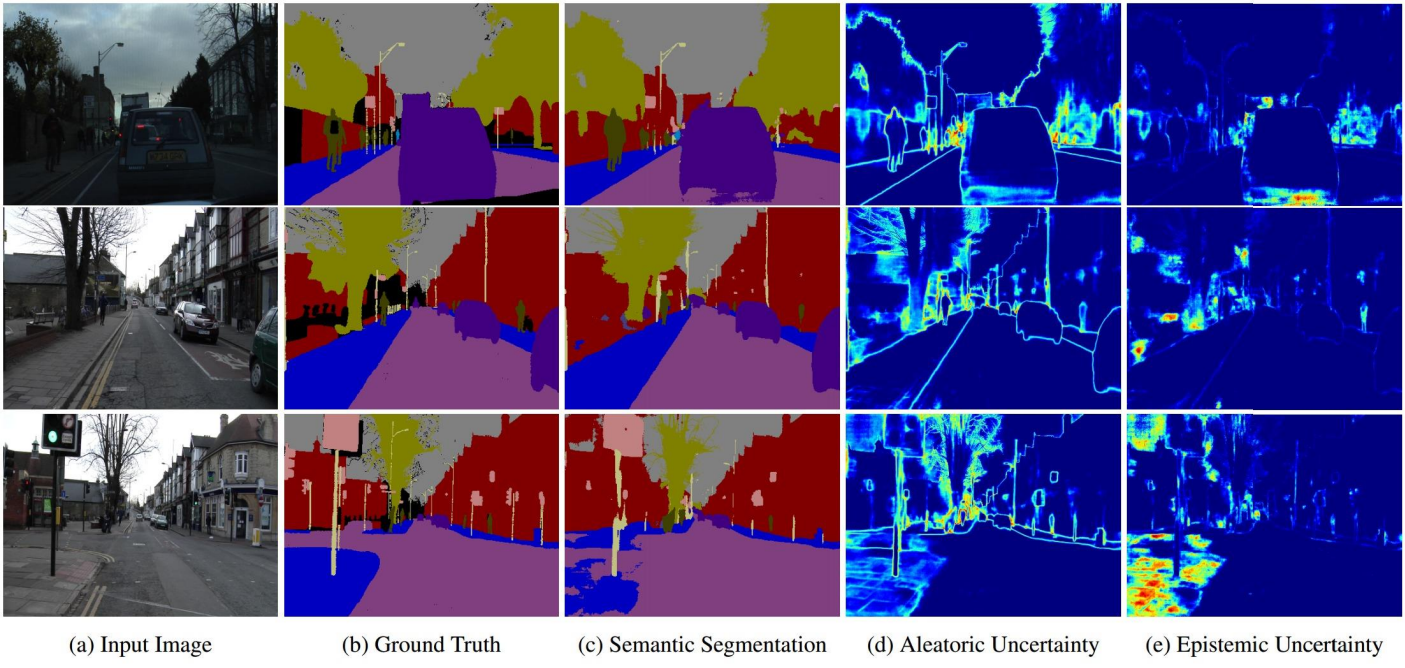

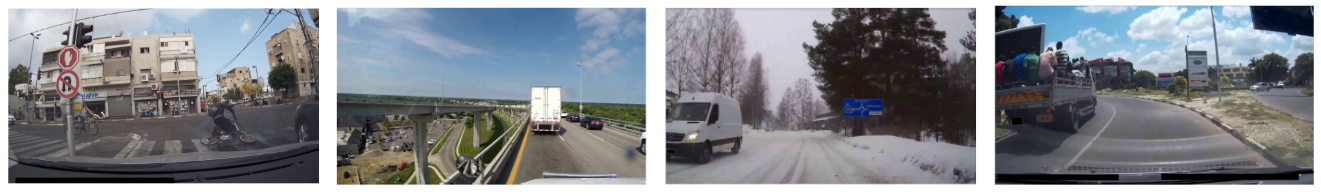

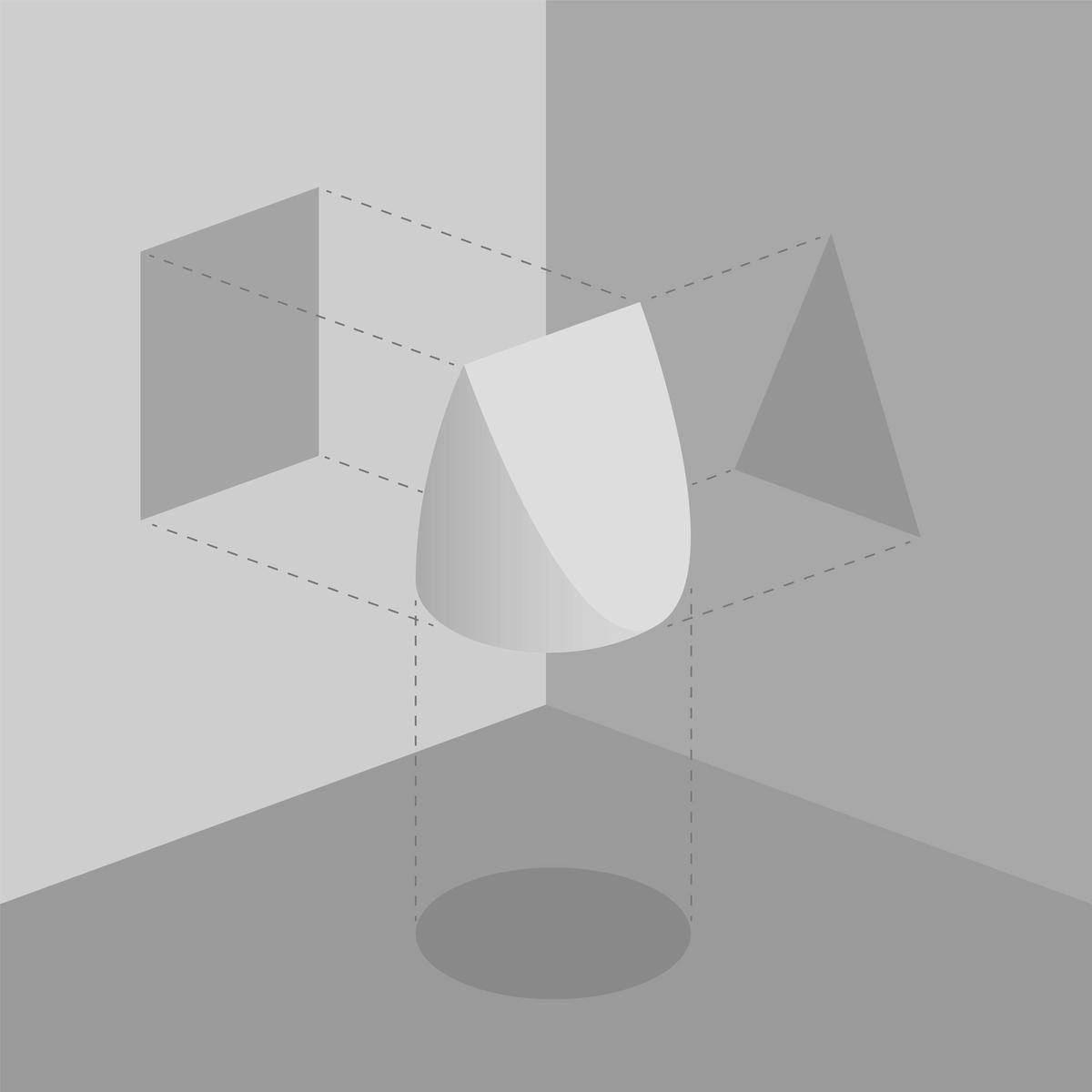

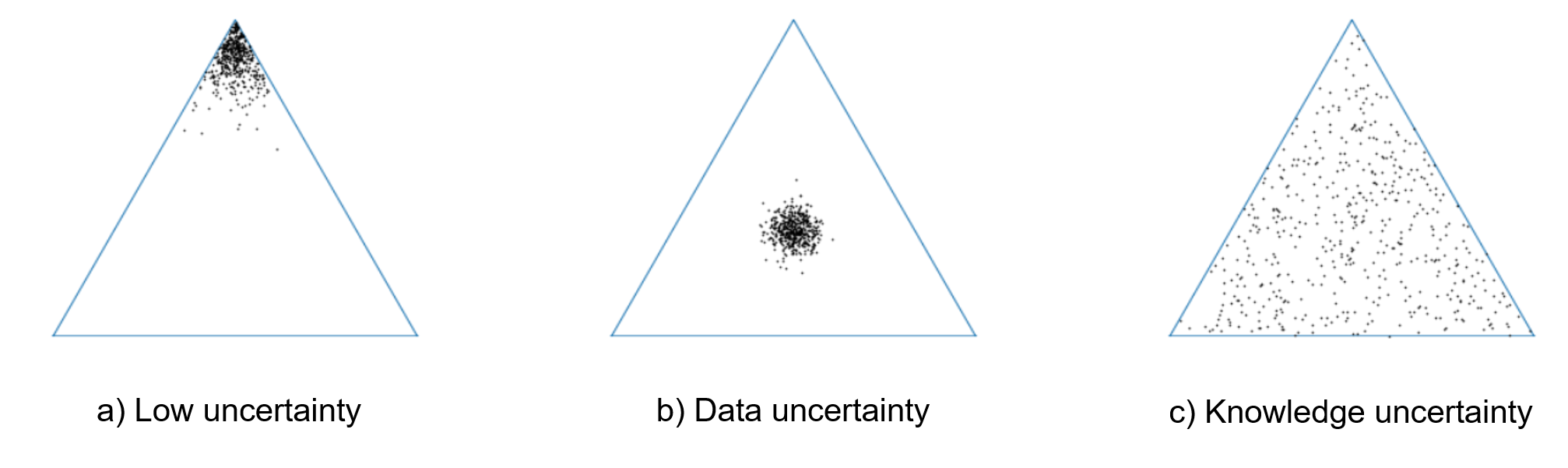

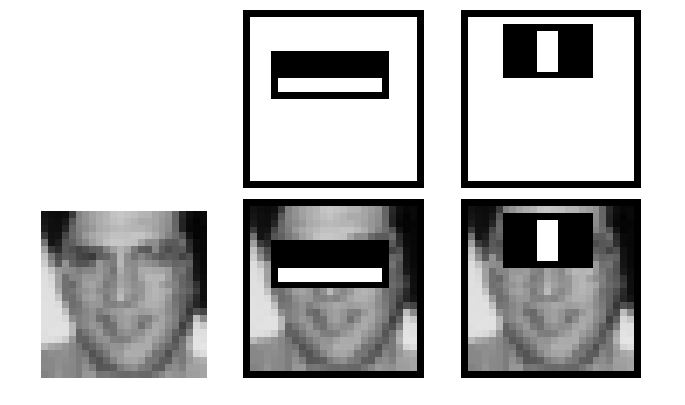

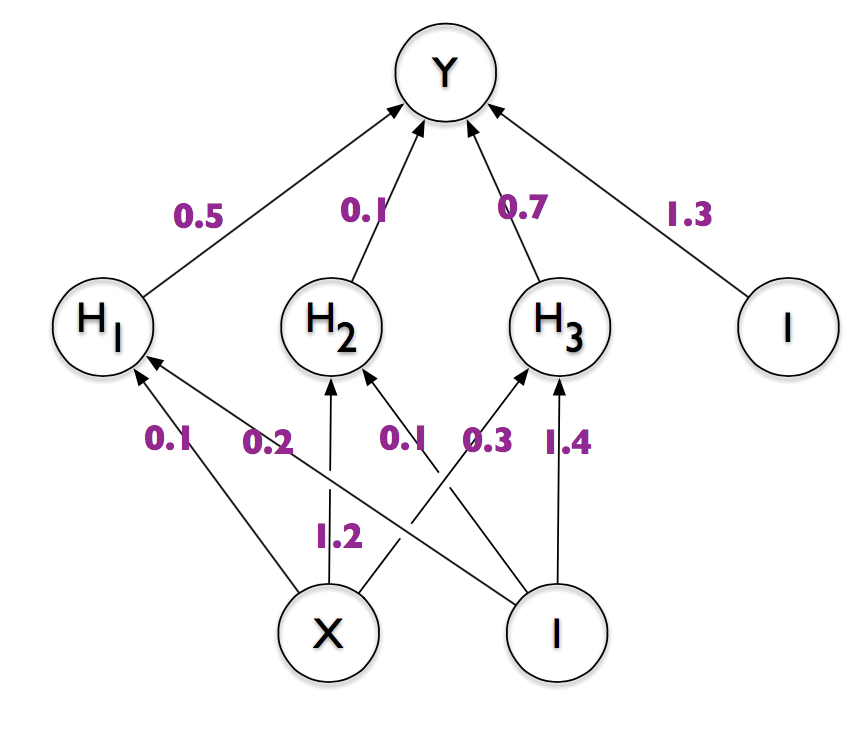

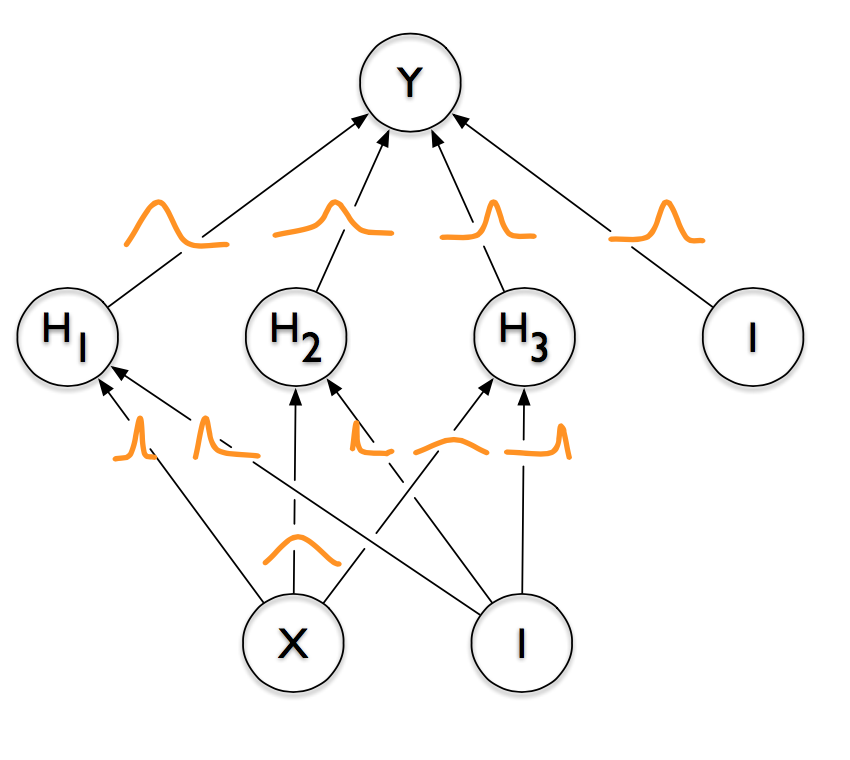

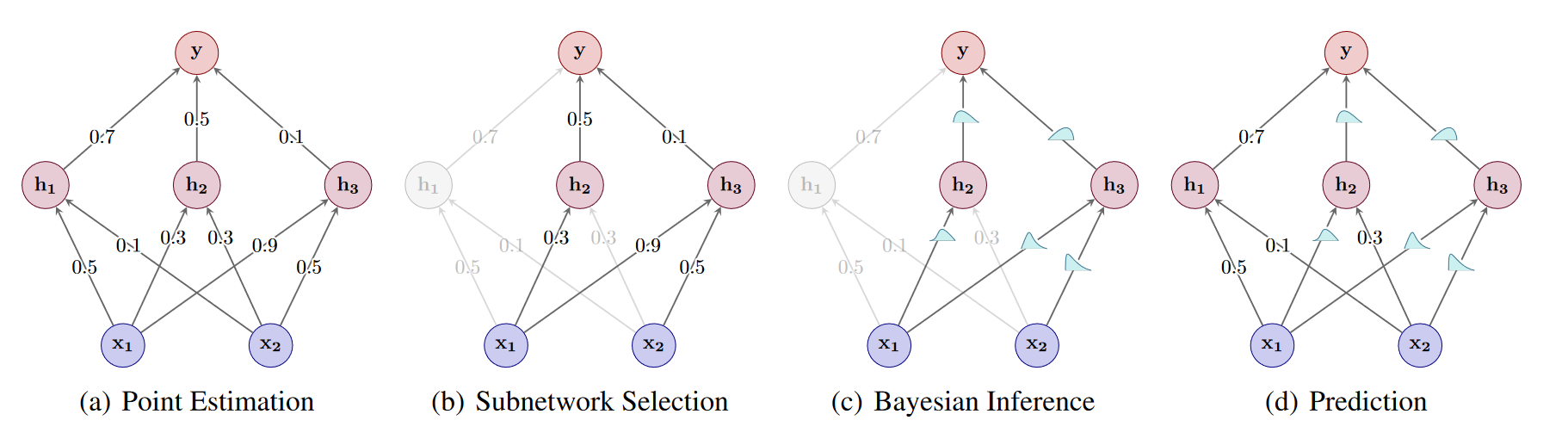

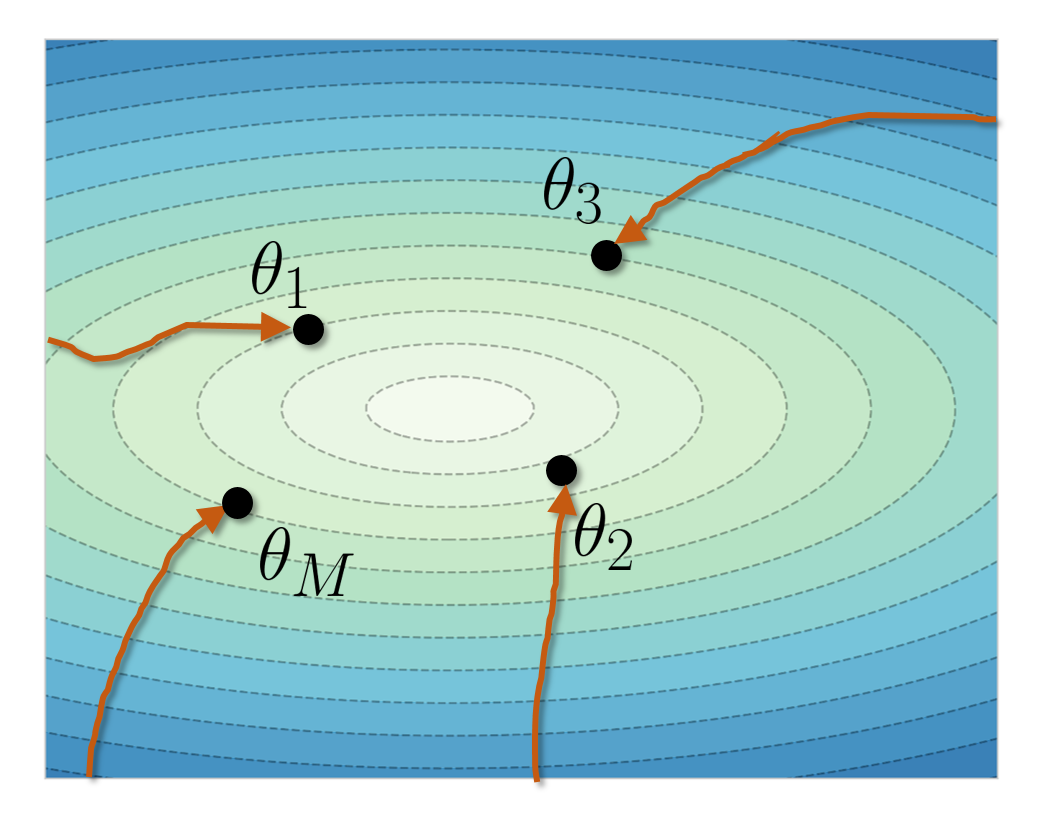

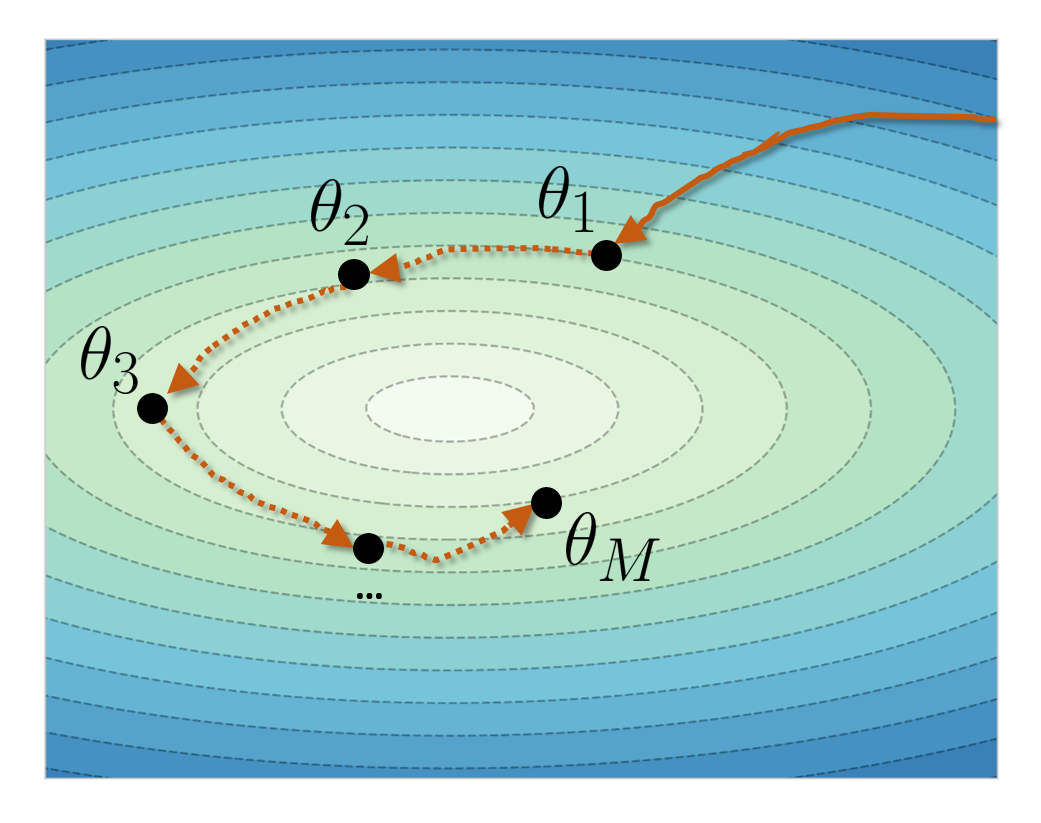

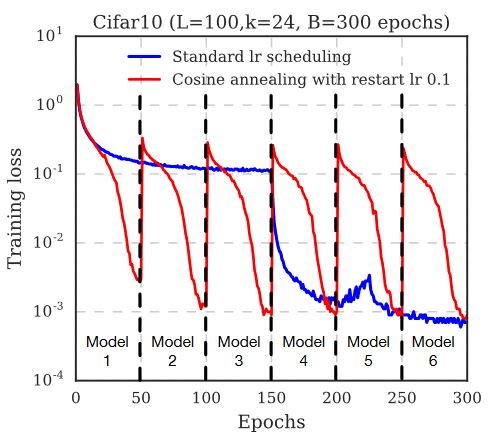

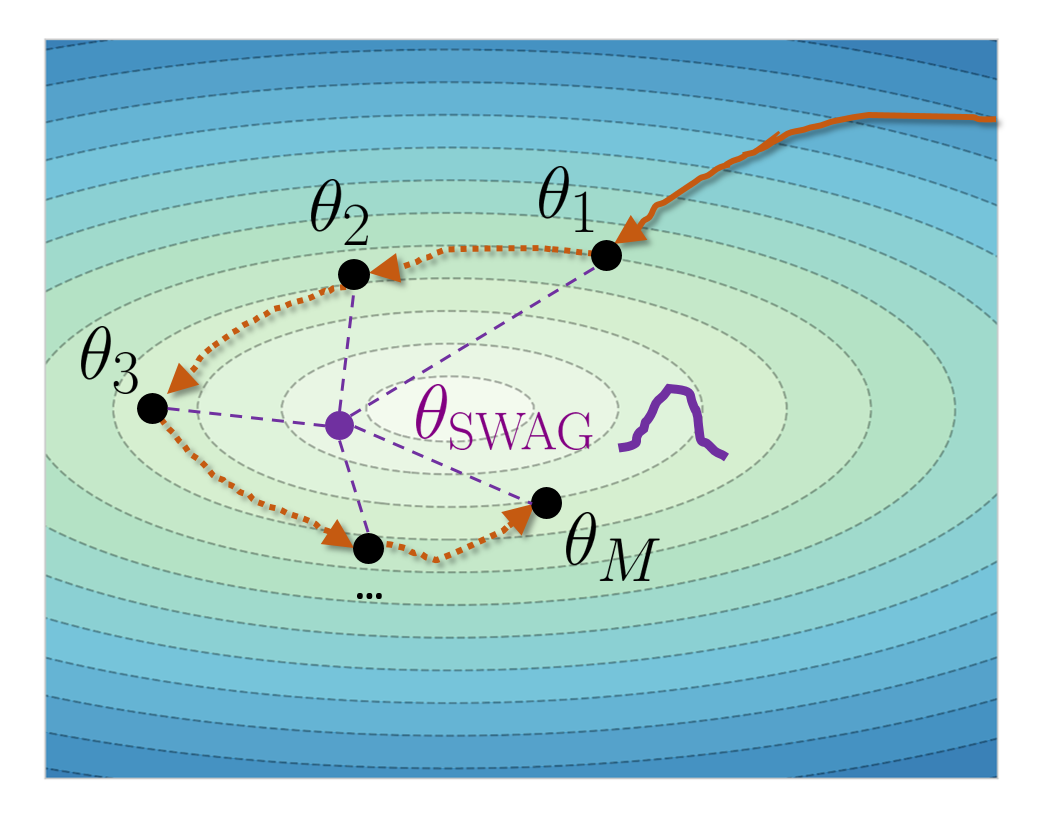

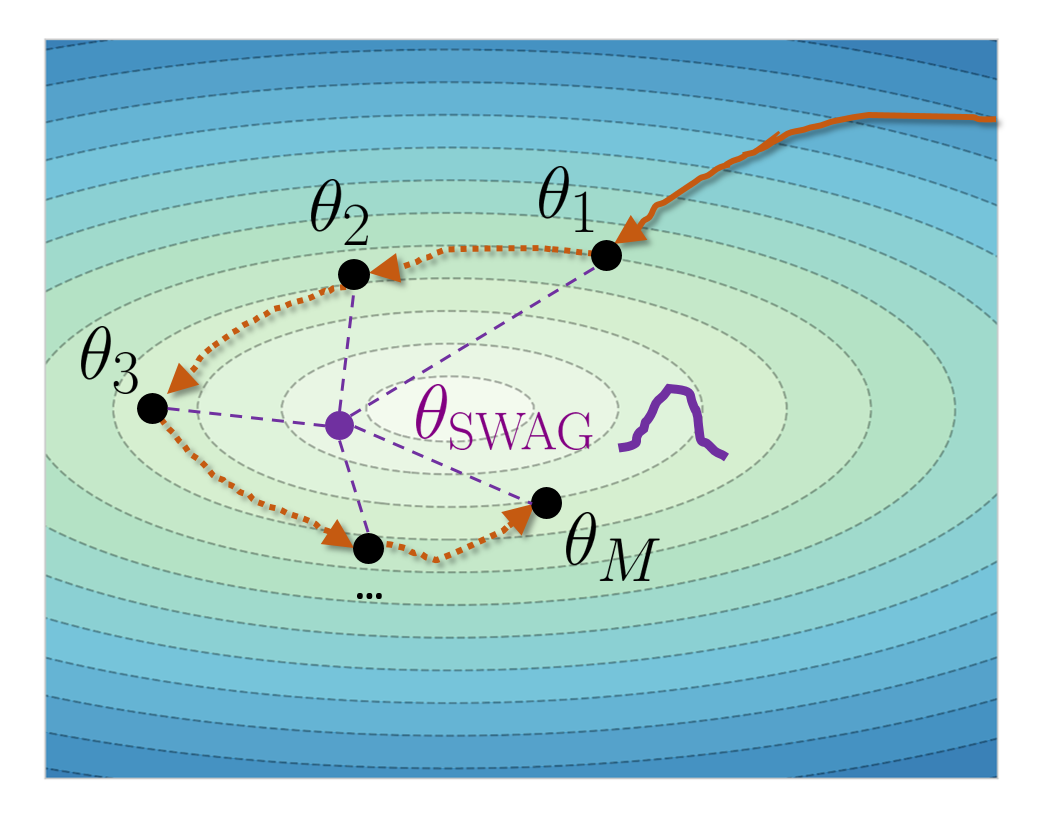

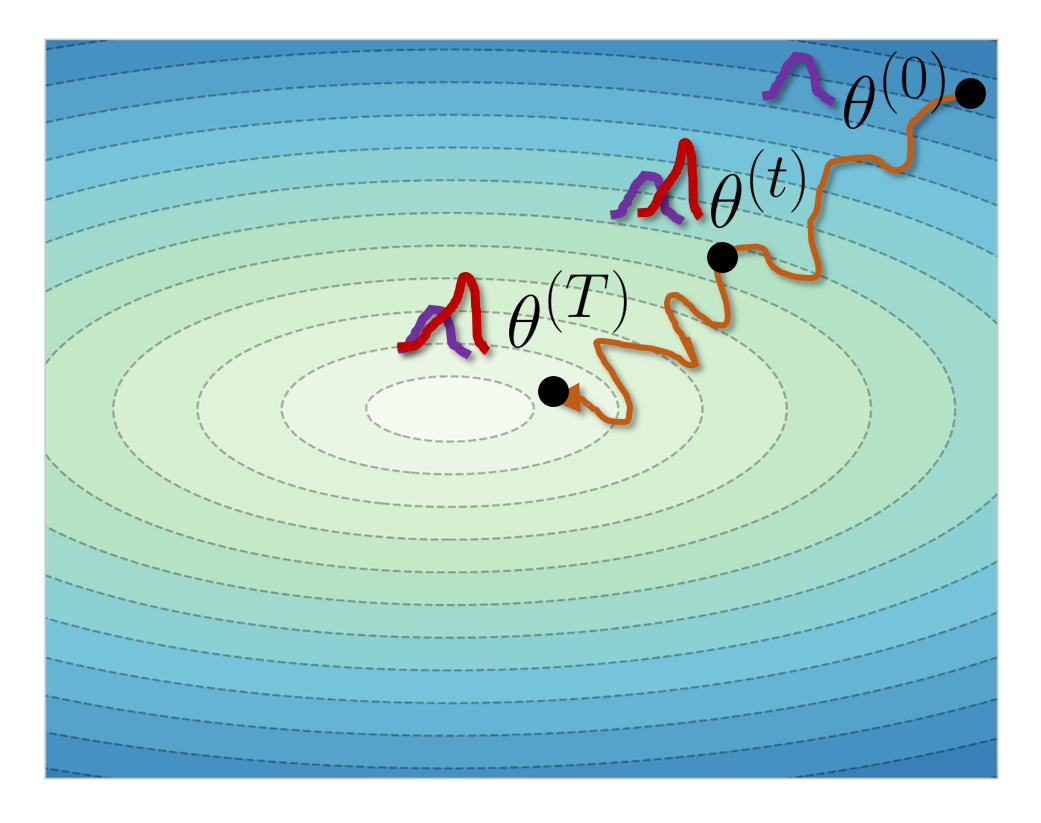

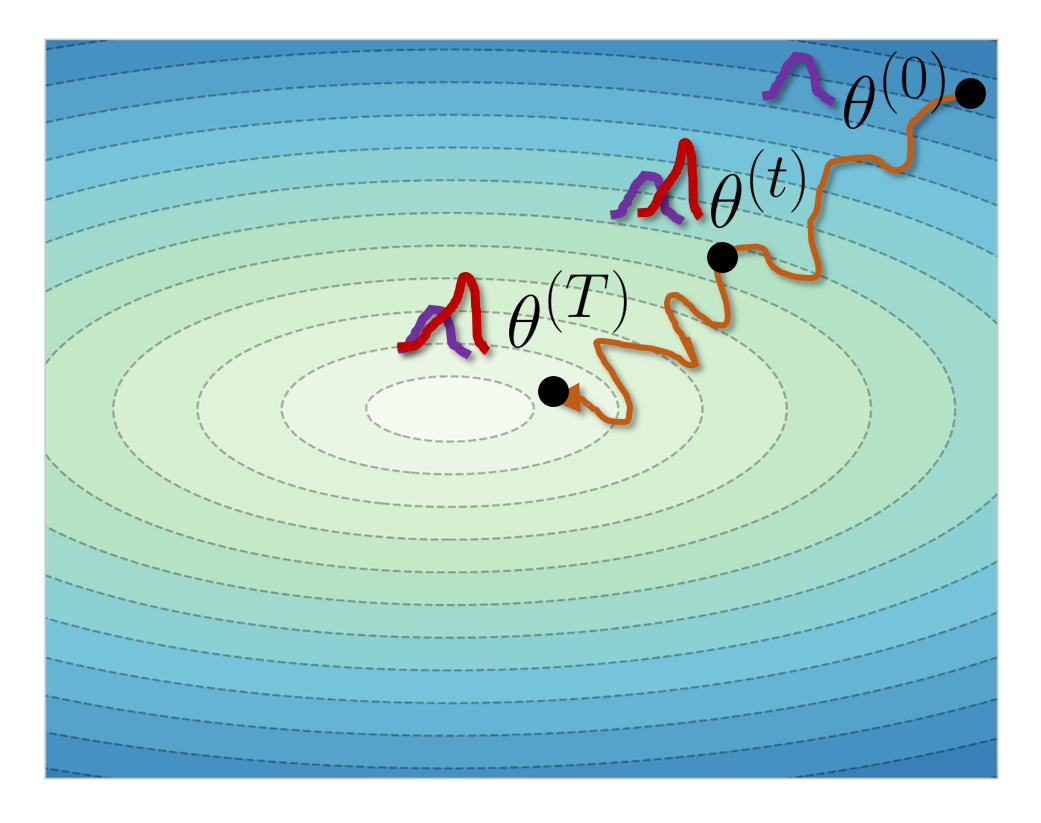

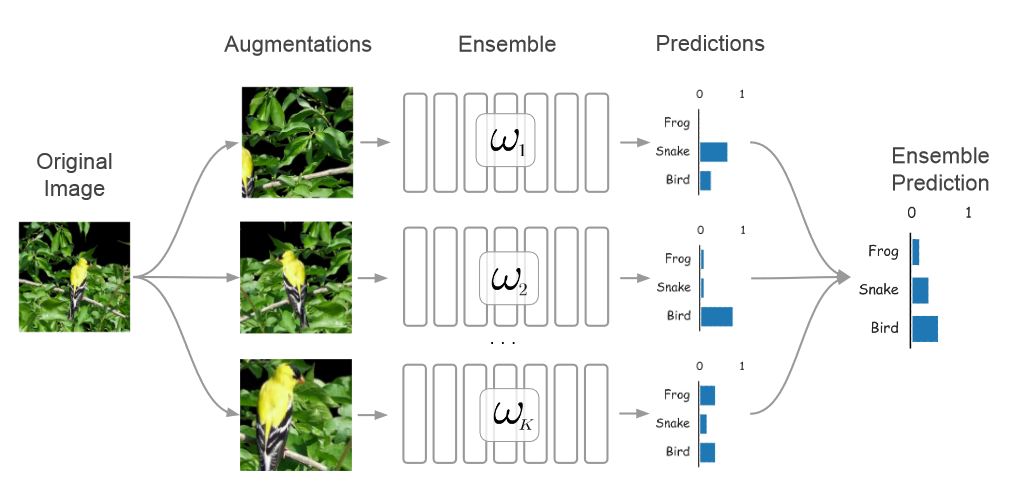

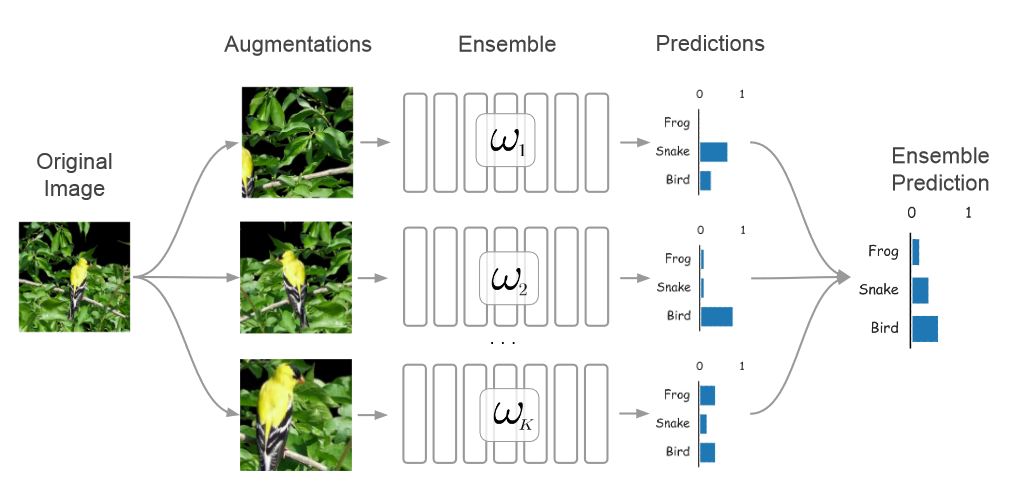

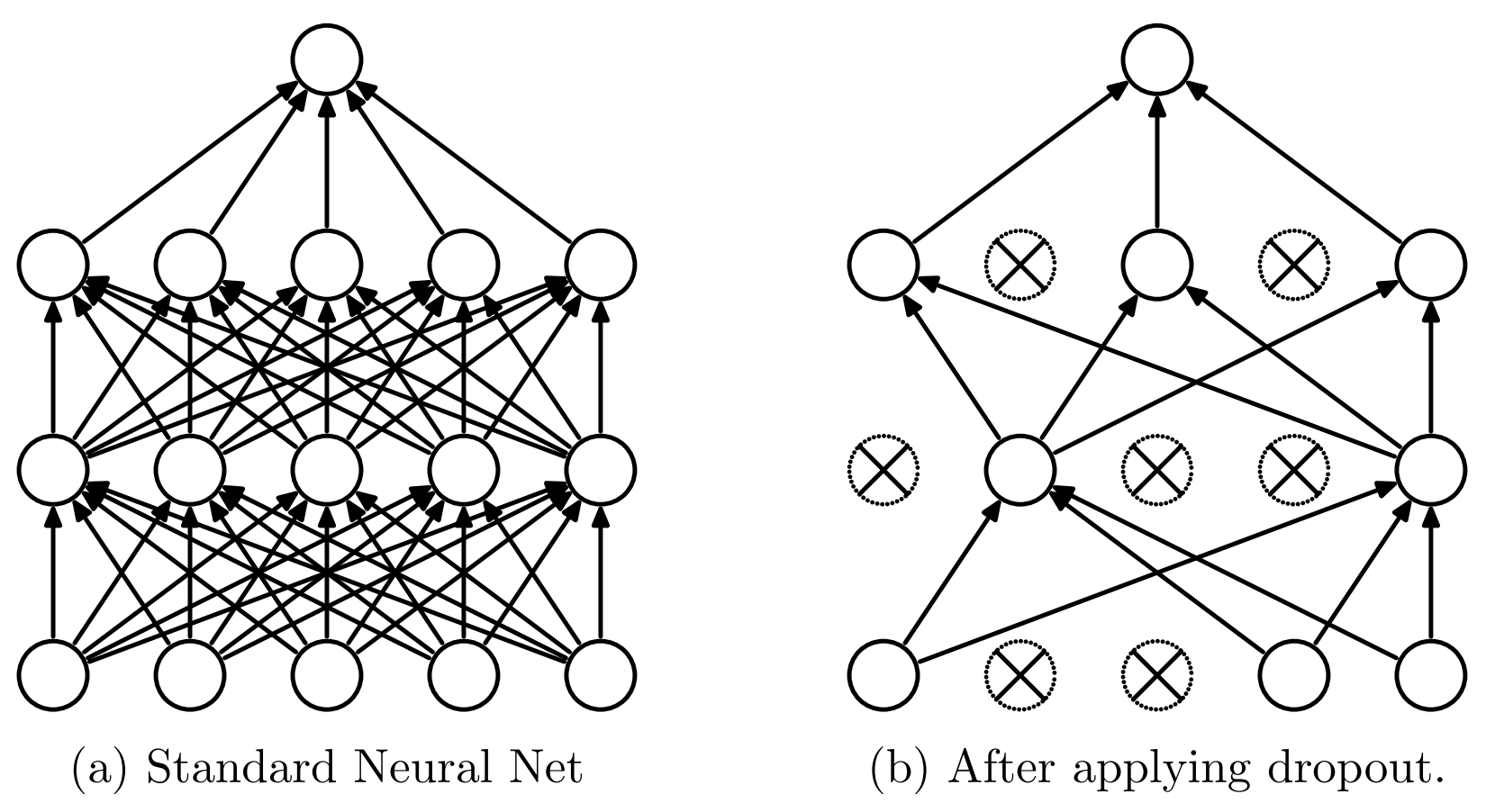

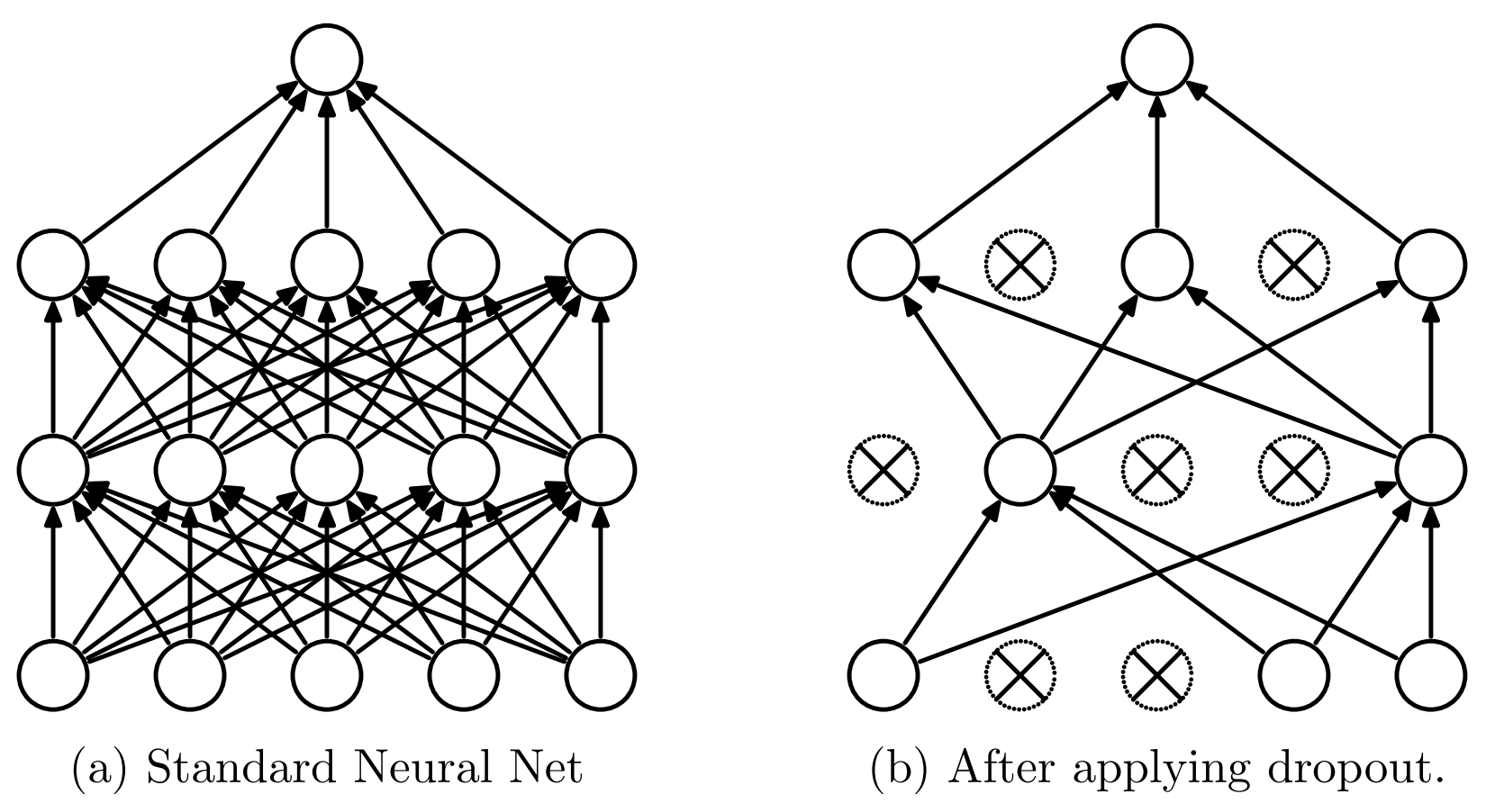

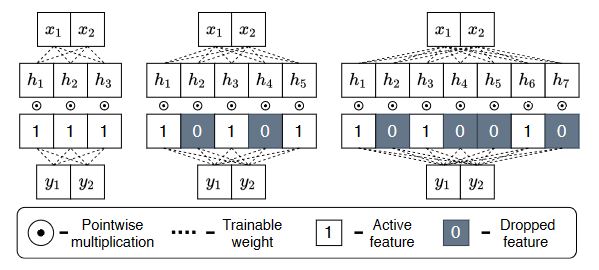

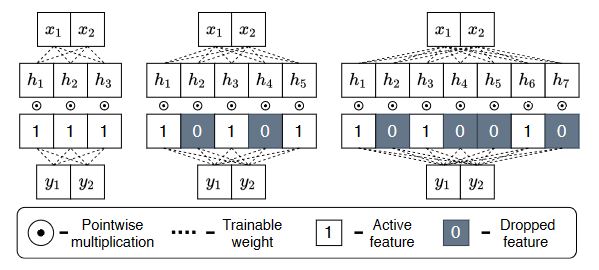

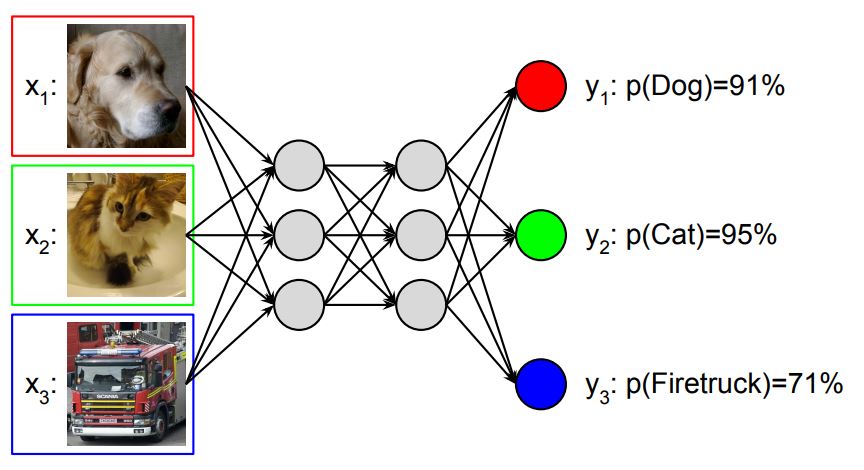

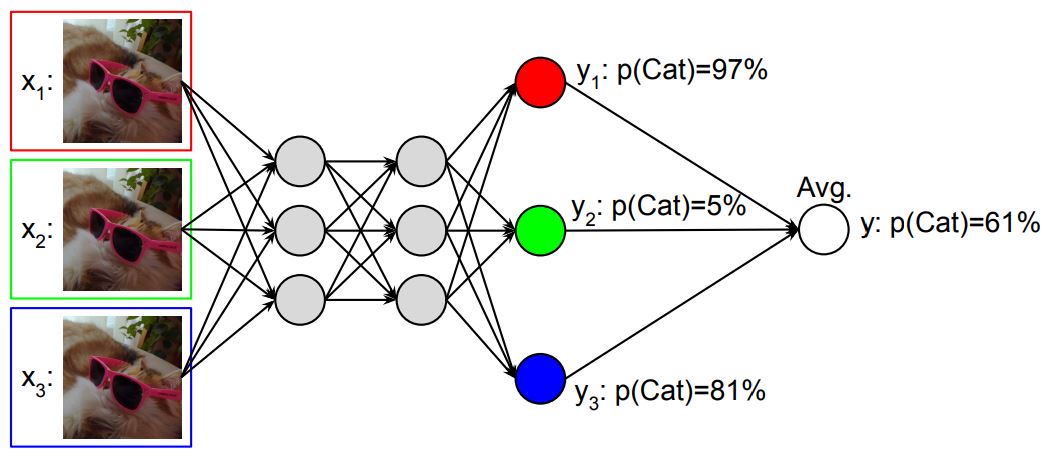

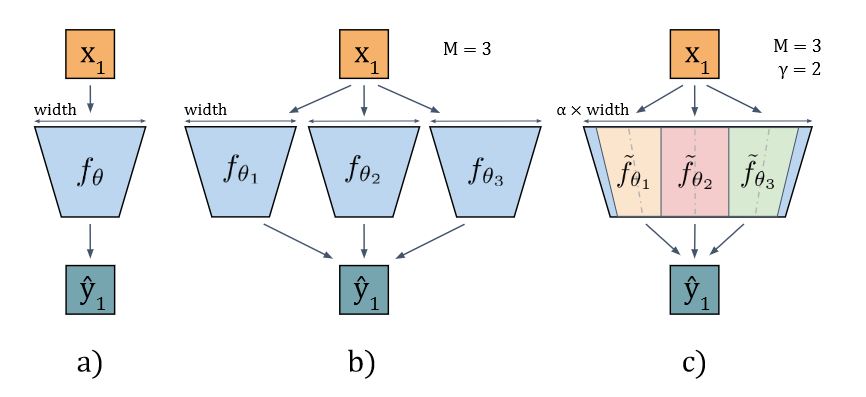

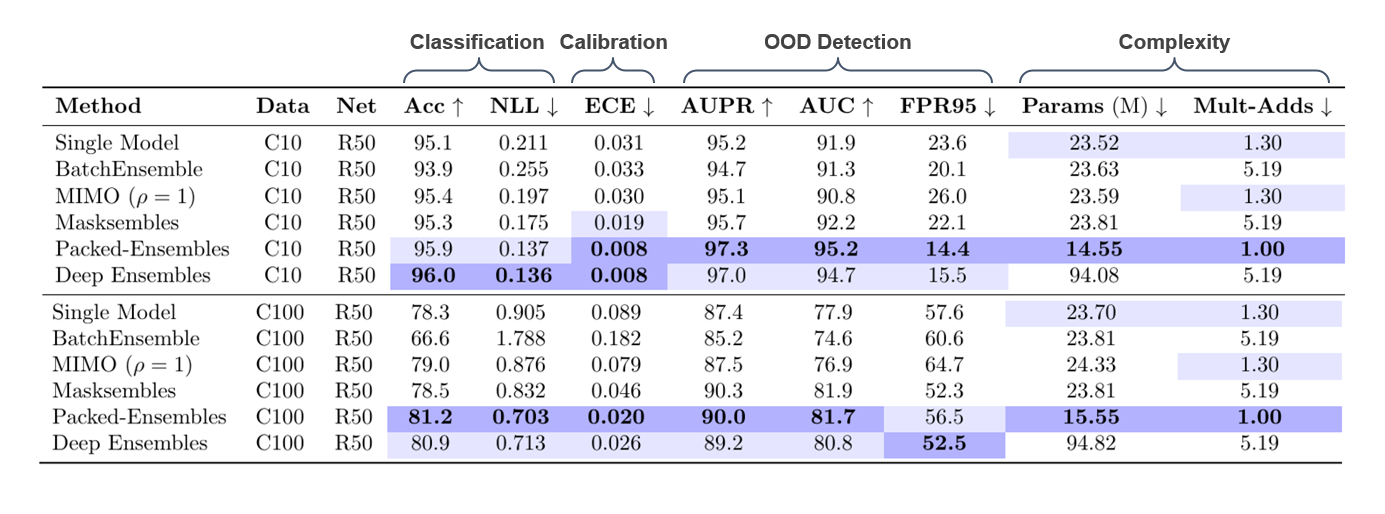

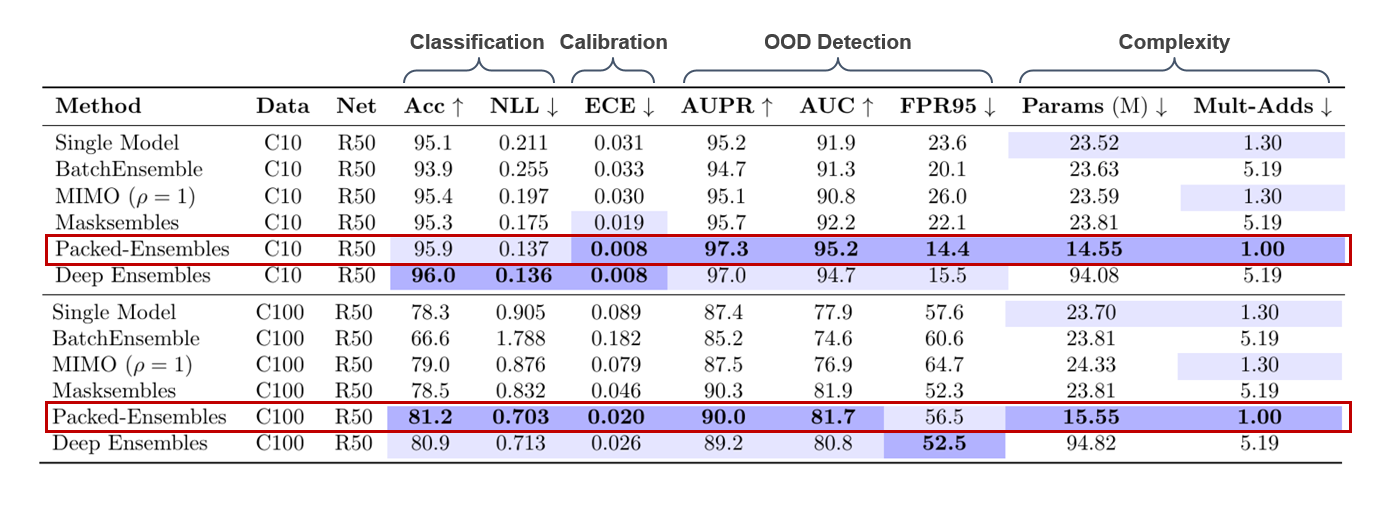

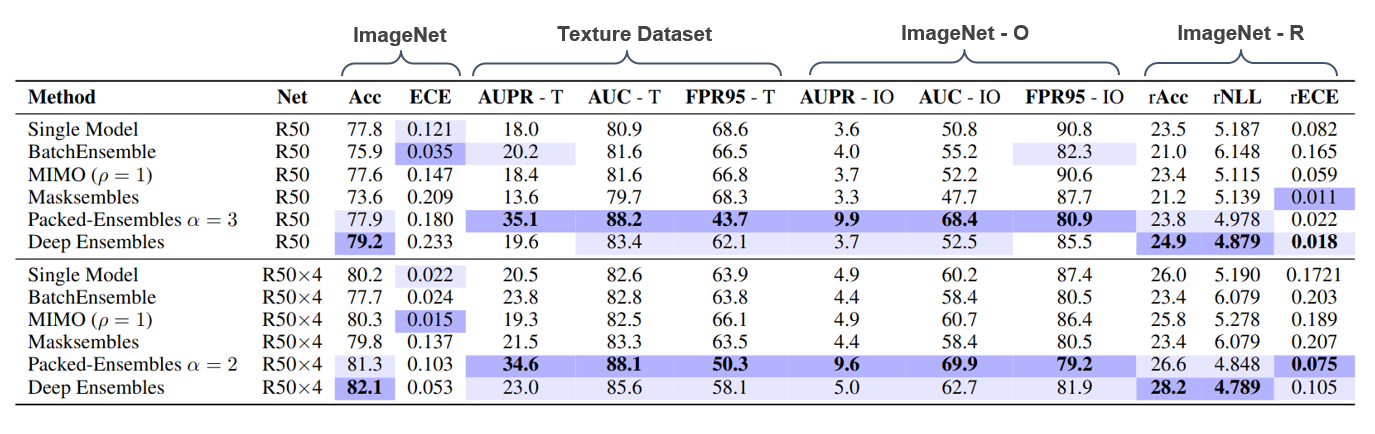

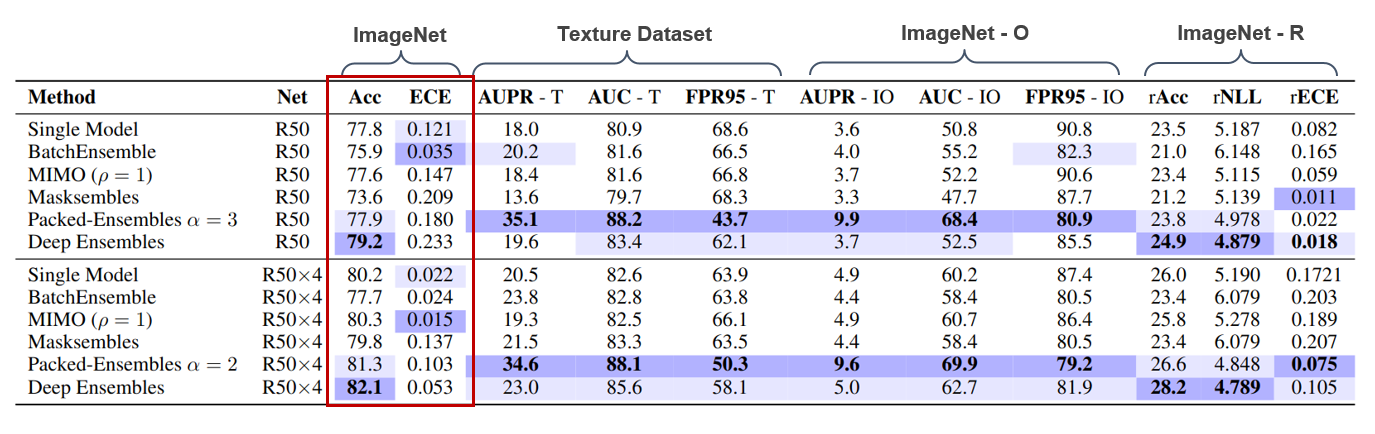

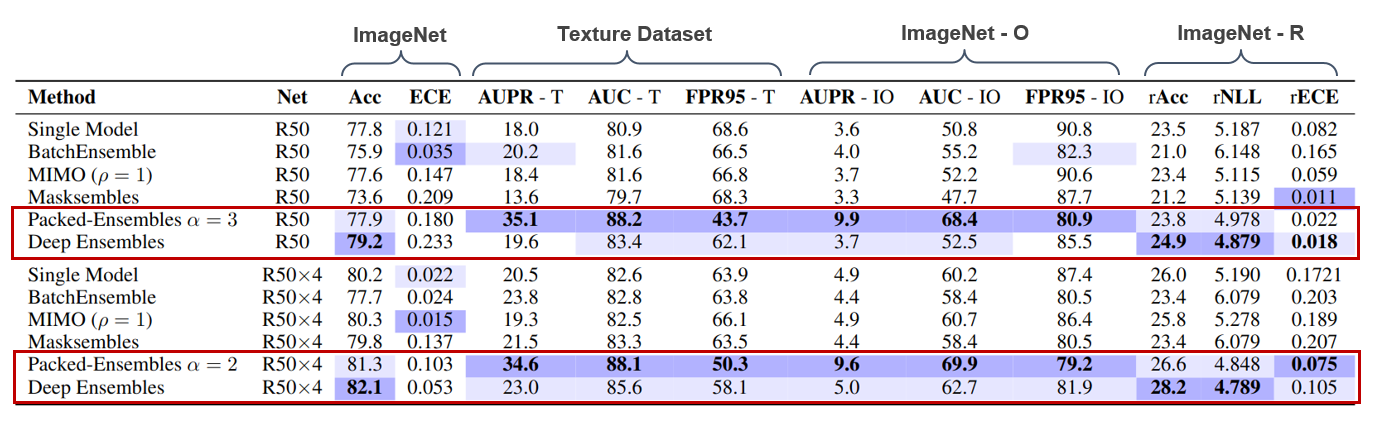

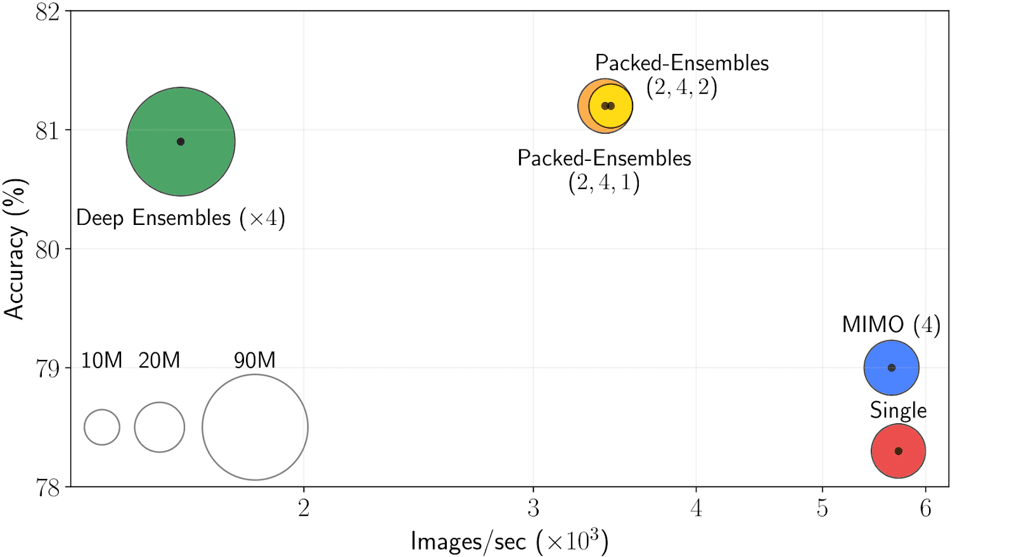

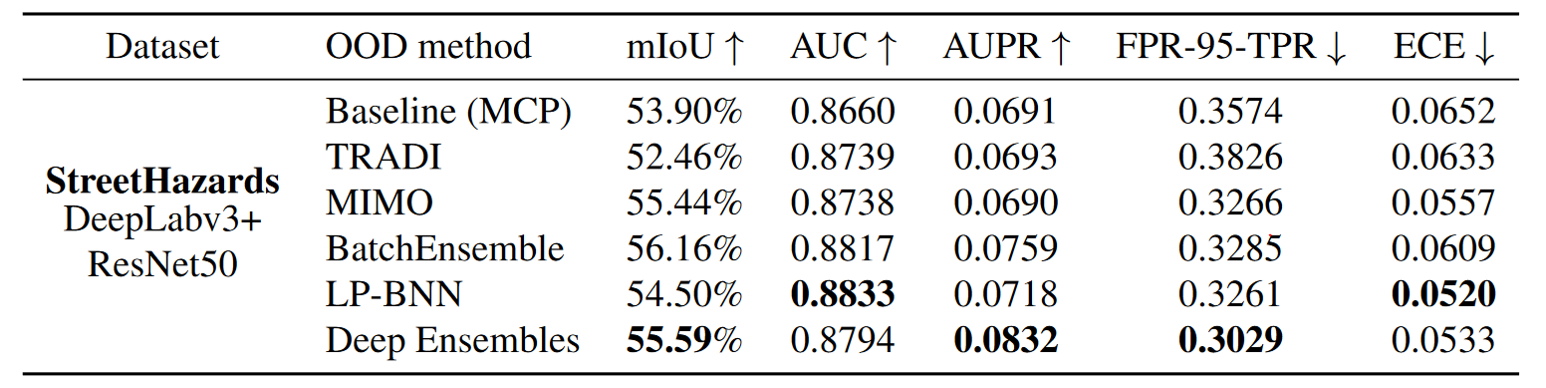

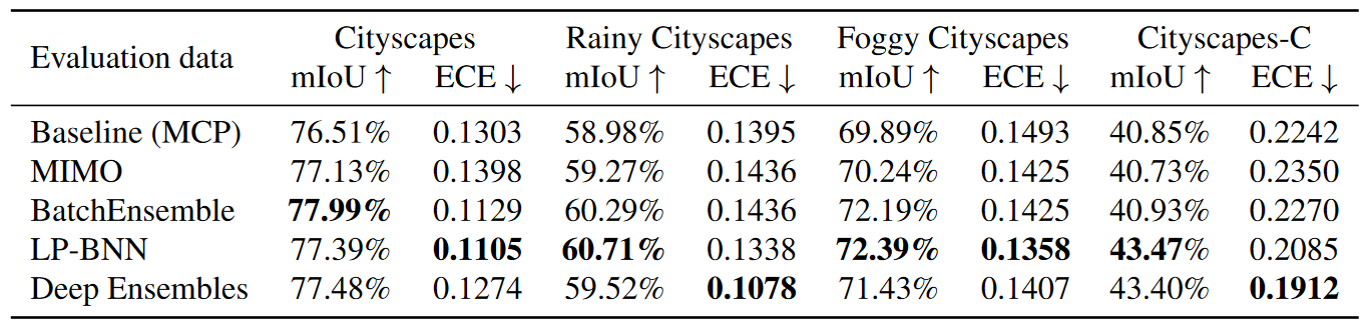

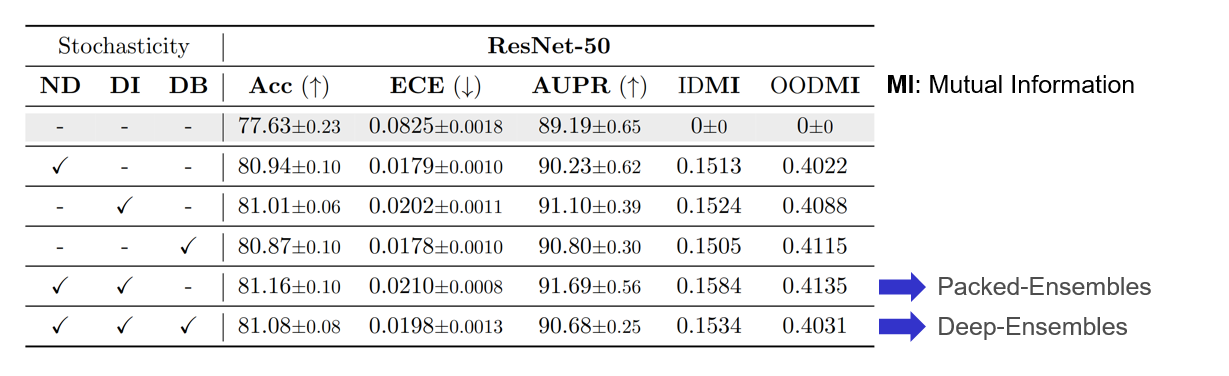

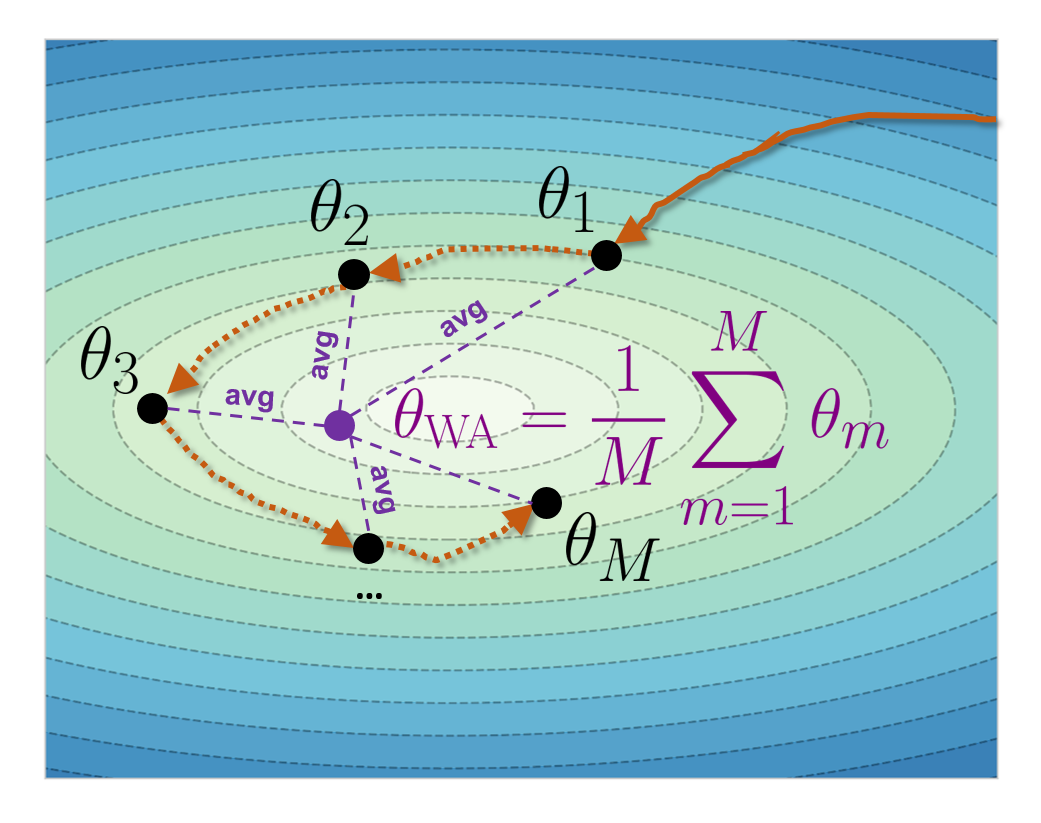

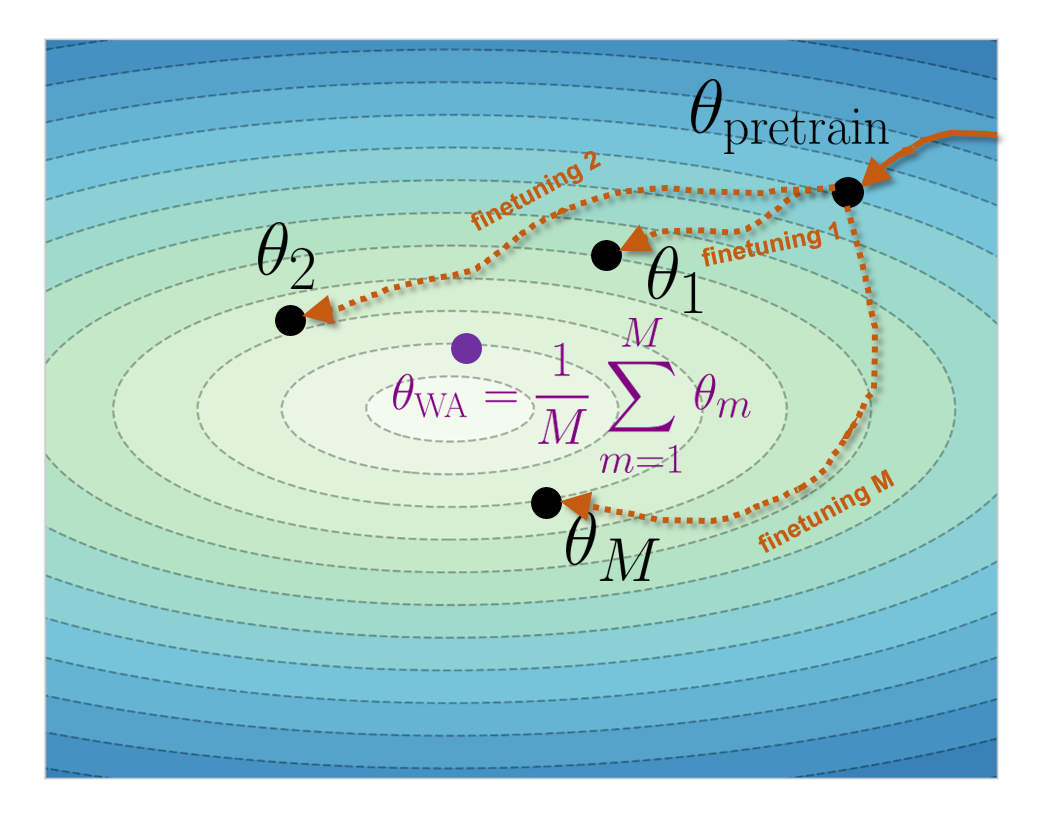

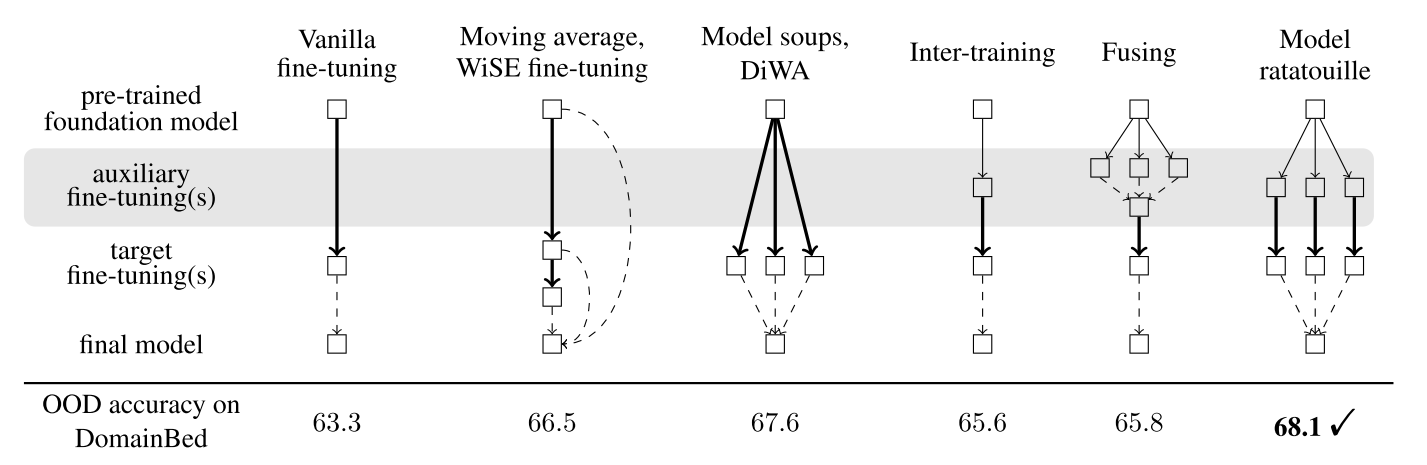

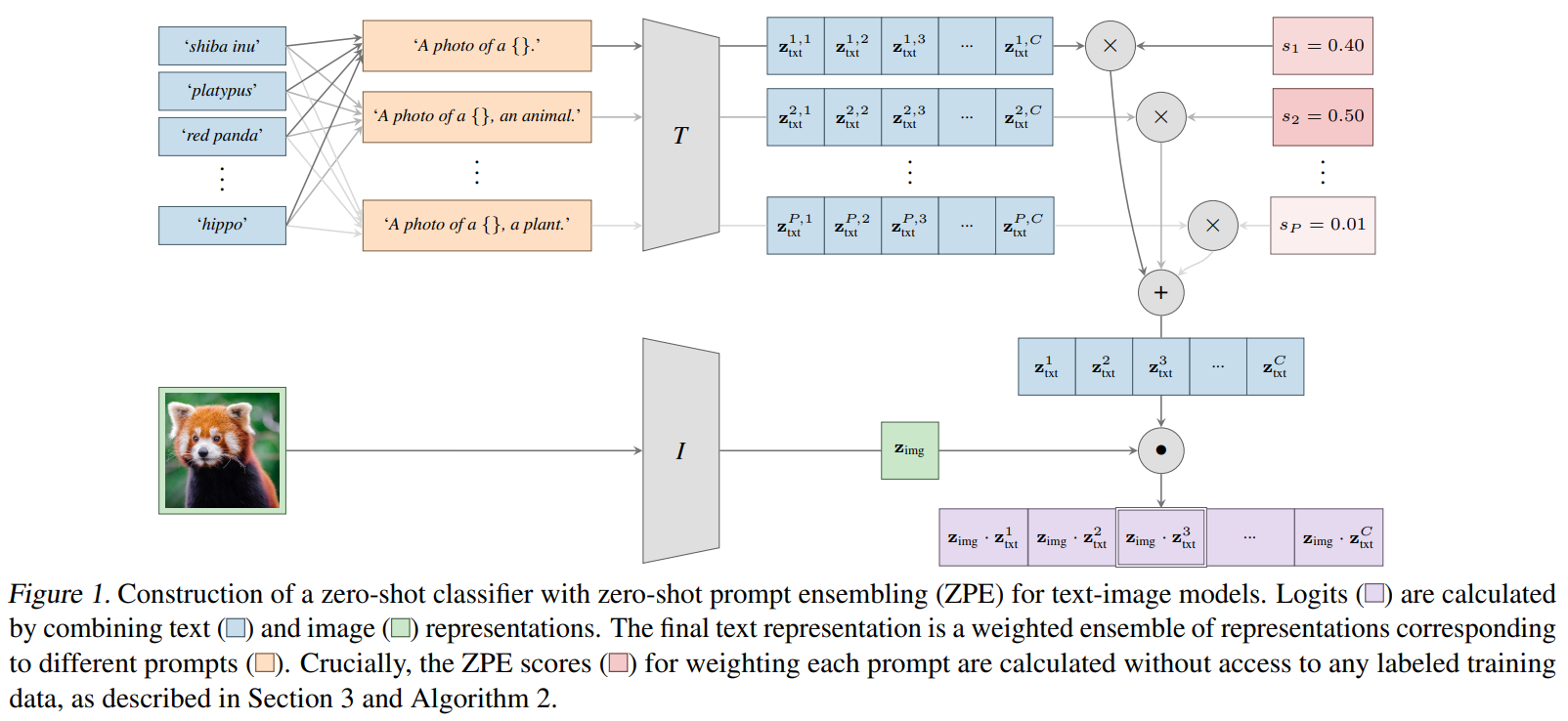

layout: true <div class="header-logo"><img src="images/iccv-logo.svg" /></div> .center.footer[Andrei BURSUC | The Many Faces of Reliability: Uncertainy Estimation and Ensemble Approaches ] --- class: center, middle ## .bold[ICCV 2023 Tutorial] # The Many Faces of Reliability of Deep Learning <br>for Real-World Deployment <br> .grid[ .kol-4-12[ .bold[Andrei Bursuc] ] .kol-4-12[ .bold[Tuan-Hung Vu] ] .kol-4-12[ .bold[Sharon Yixuan Li] ] ] .grid[ .kol-4-12[ .bold[Dengxin Dai] ] .kol-4-12[ .bold[Puneet Dokania] ] .kol-4-12[ .bold[Patrick Pérez] ] ] .foot[https://abursuc.github.io/many-faces-reliability/] --- class: center, middle ## .bold[ICCV 2023 Tutorial] ## .bold[The Many Faces of Reliability of Deep Learning <br>for Real-World Deployment] # Uncertainty Estimation and Ensemble Approaches <br/> .center.big.bold[Andrei Bursuc] .center[<img src="images/logo_valeoai.png" style="width: 200px;" />] .foot[https://abursuc.github.io/many-faces-reliability/] --- class: middle .bigger[Good uncertainty estimates quantify _when we can trust the model's predictions_ $\rightarrow$ helps __avoid mistakes__.] .bigger[Uncertainty estimation is an essential function for improving reliability and safety of systems running on ML models.] <!-- .big[Uncertainty is often formalized as a probability distribution on the predictions rather than a single point estimate.] --> --- class: middle, center # Sources of uncertainty --- class: middle, center .big[There are two main types of uncertainties each with its own pecularities*] .left.bottom[*for simplicity we will analyze here the classification case] --- class: middle, center ## Case 1 --- count: false class: middle <!-- .center.width-70[] --> .center.width-65[] .hidden.center.bigger[__Aleatoric / Data uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- class: middle <!-- ## .center[Case 1] --> <!-- .center.width-70[] --> .center.width-65[] .hidden.center.bigger[__Aleatoric / Data uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- count: false class: middle <!-- ## .center[Case 1] --> .center.width-65[] .center.bigger[__Aleatoric / Data uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- # Data/Aleatoric uncertainty .grid[ .kol-6-12[ .center.width-80[] ] .kol-6-12[ .center.width-80[] ] ] .center[.bigger[Similarly looking objects also fall into this category.]] --- class: middle .center.width-40[] --- class: middle .center.width-70[] .bigger[In urban scenes this type of uncertainty is frequently caused by similarly-looking classes: - .italic[pedestrian - cyclist - person on trottinette/scooter] - .italic[road - sidewalk] - also at object boundaries ] .citation[A. Kendall and Y. Gal, What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, NeurIPS 2017.] --- class: middle .center.width-70[] .center.bigger[Also caused by sensor limitations: localization and recognition of far-away objects is less precise. <br> Datasets with low resolution images, e.g., CIFAR, also expose this ambiguity. ] .citation[L. Bertoni et al., MonoLoco: Monocular 3D Pedestrian Localization and Uncertainty Estimation, ICCV 2019] --- class: middle .center.width-100[] .caption[Samples and annotations from different graders on LIDC-IDRI dataset.] .center.bigger[Difficult or ambiguous samples with annotation disagreement] .citation[S.G. Armato et al., The lung image database consortium (LIDC) and image database resource initiative (IDRI): a completed reference database of lung nodules on CT scans, Medical Physics 2011 <br> S. Kohl et al., A Probabilistic U-Net for Segmentation of Ambiguous Images, NeurIPS 2018] --- class: middle ## Data uncertainty .center.width-100[] - **Data uncertainty** is often encountered in practice due to sensor quality, natural randomness, that cannot be explained by our data. - Uncertainty due to the properties of the data - It cannot be reduced (_irreducible uncertainty_), but can be learned. Could be reduced with better measurements. - In layman words data uncertainty is called the: __known unknown__ --- class: middle, center ## Case 2 --- count: false class: middle <!-- ## .center[Case 2] --> <!-- .center.width-70[] --> .center.width-65[] .hidden.center.bigger[__Epistemic / Knowledge uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- count: false class: middle <!-- ## .center[Case 2] --> .center.width-65[] .center.bigger[__Epistemic / Knowledge uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- class: middle .grid[ .kol-6-12[ .big[I.I.D.: $p\_{\text{train}} (x,y) = p\_{\text{test}} (x,y)$ ] ] .kol-6-12[ .big[O.O.D.: $p\_{\text{train}} (x,y) =\not\ p\_{\text{test}} (x,y)$ ] ] ] .big[There are different forms of out-of-distribution / distribution shift:] .bigger[ - _covariate shift_: distribution of $p(x)$ changes while $p(y \mid x)$ remains constant - _label shift_: distribution of labels $p(y)$ changes while $p(x \mid y)$ remains constant - _OOD_ or _anomaly_: new object classes appear at test time ] --- class: middle ## Domain shift .grid[ .kol-6-12[ .center.width-100[] ] .kol-6-12[ .center.width-100[] .center.width-100[] ] ] .center.bigger[Distribution shift of varying degrees is often encountered in real world conditions] .citation[T. Sun et al., SHIFT: A Synthetic Driving Dataset for Continuous Multi-Task Domain Adaptation, CVPR 2022 <br> P. de Jorge et al., Reliability in Semantic Segmentation: Are We on the Right Track?, CVPR 2023] --- class: middle ## Object-level shift .center.width-100[] .center.width-100[] .center.width-100[] .caption[Row 1: Lost&Found; Row 2: SegmentMeIfYoucan; Row 3: BRAVO synthetic objects] .citation[P. Pinggera et al., Lost and Found: Detecting Small Road Hazards for Self-Driving Vehicles, IROS 2016 <br> R. Chan et al., SegmentMeIfYouCan: A Benchmark for Anomaly Segmentation, NeurIPS Datasets and Benchmarks 2023 <br> BRAVO Challenge 2023, https://valeoai.github.io/bravo/#challenge] --- class: middle ## .center[Case 2 - Data scarcity] <!-- .center.width-70[] --> .center.width-60[] .center.bigger[Also causing __epistemic / knowledge uncertainty__] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- class: middle ## .center[Case 2 - Data scarcity] .grid[ .kol-6-12[ <br><br> .center.width-100[] .caption[Train samples] ] .kol-6-12[ .center.width-70[] .caption[Test samples: unseen variations of seen classes] ] ] .citation[S. Kreiss et al., PifPaf: Composite Fields for Human Pose Estimation, CVPR 2019] --- exclude: true class: middle .center.width-90[] .bigger[In urban scenes, knowledge uncertainty accounts for semantically challenging pixels.] .citation[A. Kendall and Y. Gal, What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, NeurIPS 2017.] --- class: middle ## Knowledge uncertainty .center.width-100[] - **Knowledge uncertainty** is caused by the lack of knowledge about the process that generated the data. - It can be reduced with additional and sufficient training data (_reducible uncertainty_) - In layman words data uncertainty is called the: __unknown unknown__ --- exclude: true class: middle .center.width-50[] .credit[Image credit: Marcin Mozejko] --- class: middle .bigger[Knowing which *source* of uncertainty predominates can be useful for: - active learning, reinforcement learning (_knowledge uncertainty_) - new data acquisition (_knowledge uncertainty_) - decide to fall-back to a complementary sensor, e.g., Lidar, radar, turning on or increasing beam intensity of headlamp, etc. (_data uncertainty_) - switch model to output multiple predictions for the same sample or zoom-in on tricky image areas (_data uncertainty_) - failure detection (_total uncertainty_) ] --- class: middle .bigger[ The described data and knowledge uncertainty sources are **idealized**: - In practice, real data have **both uncertainties intermingled** and accumulating in total uncertainty. - Similarly models do not always satisfy conditions for data uncertainty estimation, e.g., over-confidence. ] .bigger[ Separating sources of uncertainty typically requires *Bayesian approaches*. ] --- exclude: true class: middle .bigger[Measuring the quality of the uncertainty can be challenging due to **lack of ground truth**, i.e., no “right answer” in some cases ] --- class: middle .bigger[To see how we can estimate the different types of uncertainties it will help us to reason in terms of ensembles and put on a Bayesian hat. ] --- ## Preliminaries .bigger[ <!-- - We consider a training dataset $\mathcal{D} = \{ (x\_i, y\_i) \}_{i=1}^{n}$ with $n$ samples and labels --> - We consider a training dataset $\mathcal{D} = \{ (x, y) \}$ with $N$ samples and labels - Most models find a single set or parameters to maximize the probability on conditioned data $$\begin{aligned} \theta^{*} &= \arg \max\_{\theta} p (\theta \mid x, y) \\\\ &= \arg \max\_{\theta} \sum\_{x, y \in \mathcal{D}} \log p (y \mid x, \theta) + \log p (\theta)\\\\ &= \arg \min\_{\theta} - \sum\_{x, y \in \mathcal{D}} \log p (y \mid x, \theta) - \log p (\theta) \end{aligned}$$ - _The probabilistic approach_: estimate a full distribution for $p(\theta \mid x, y)$ - _The ensembling approach_: find multiple good sets of of parameters $\theta^{*}$ ] --- exclude: true ## Ensembles preliminaries .bigger[ - We consider a training dataset $\mathcal{D} = \{ (x\_i, y\_i) \}_{i=1}^{n}$ with $n$ samples and labels - $f\_{\theta}(\cdot)$ is a neural network with parameters $\theta$ - We view $f$ as a probabilistic model with $f\_{\theta}(x\_i)=P(y_i \mid x\_i,\theta)$. - The model posterior $p(\theta \mid \mathcal{D})$ captures the uncertainty in $\theta$: $$p(\theta \mid \mathcal{D}) = \frac{p(\mathcal{D} \mid \theta) p(\theta)}{p(\mathcal{D})} $$ - From $\theta$ we can sample an ensemble of models: $$ \\{P(y \mid x, \theta\_m)\\}\_{m=1}^{M}, \theta\_m \sim p(\theta \mid \mathcal{D})$$ - For prediction we use Bayesian inference $$P(y \mid x, \mathcal{D}) = \mathbb{E}\_{p(\theta \mid \mathcal{D})} [P(y \mid x, \theta)] \approx \frac{1}{M} \sum_{m=1}^M P( y \mid x, \theta\_m), \theta\_m \sim p(\theta \mid \mathcal{D})$$ ] --- ## Ensembles preliminaries .bigger[ - We view our network as a probabilistic model with $P(y=c \mid x\_{test},\theta)$ - The model posterior $p(\theta \mid \mathcal{D})$ captures the uncertainty in $\theta$ and we compute it during _training_: $$p(\theta \mid \mathcal{D}) = \frac{p(\mathcal{D} \mid \theta) p(\theta)}{p(\mathcal{D})} $$ - From $\theta$ we can sample an ensemble of models: $$ \\{P(y \mid x\_{test}, \theta\_m)\\}\_{m=1}^{M}, \theta\_m \sim p(\theta \mid \mathcal{D})$$ - For _prediction_ we use Bayesian inference $$P(y \mid x\_{test}, \mathcal{D}) = \mathbb{E}\_{p(\theta \mid \mathcal{D})} [P(y \mid x\_{test}, \theta)] \approx \frac{1}{M} \sum\_{m=1}^M P( y \mid x\_{test}, \theta\_m), \theta\_m \sim p(\theta \mid \mathcal{D})$$ ] --- class: middle ## Total uncertainty .bigger[ - The _total uncertainty_ is the combination of __data uncertainty__ and __knowledge uncertainty__ - We can compute it from the entropy of _predictive posterior_: $$\begin{aligned} \mathcal{H}[P(y \mid x\_{test}, \mathcal{D})] & = \mathcal{H}[\mathbb{E}\_{p(\theta \mid \mathcal{D})}[P( y \mid x\_{test}, \theta)]] \\\\ & \approx \mathcal{H}[\frac{1}{M} \sum\_{m=1}^M P( y \mid x\_{test}, \theta\_m)], \theta\_m \sim p(\theta \mid \mathcal{D}) \\\\ \end{aligned}$$ <!-- % $$\mathcal{H}[P(y \mid x, \mathcal{D}] \approx \mathcal{H}[\frac{1}{M} \sum_{m=1}^M P( y \mid x, \theta\_m)], \theta\_m \sim p(\theta \mid \mathcal{D})$$ --> ] .citation[A. Mobiny et al., DropConnect Is Effective in Modeling Uncertainty of Bayesian Deep Networks, Nature Scientific Reports 2021 <br> A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019 <br> S. Depeweg et al., Decomposition of Uncertainty in Bayesian Deep Learning for Efficient and Risk-sensitive Learning, JMLR 2018] --- class: middle ## Data uncertainty .bigger[ - Under certain conditions and assumptions (_sufficient capacity, training iterations and training data_), models with probabilistic outputs capture estimates of data uncertainty - The _expected data uncertainty_ is: $$\mathbb{E}\_{p(\theta \mid \mathcal{D})} [\mathcal{H}[P(y \mid x\_{test}, \theta)]] \approx \frac{1}{M} \sum\_{m=1}^{M} \mathcal{H}[P( y \mid x\_{test}, \theta\_m)], \theta\_m \sim p(\theta \mid \mathcal{D})$$ - Each model $P(y \mid x\_{test}, \theta\_m)$ captures an estimate of the data uncertainty ] .citation[A. Mobiny et al., DropConnect Is Effective in Modeling Uncertainty of Bayesian Deep Networks, Nature Scientific Reports 2021 <br> A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019 <br> S. Depeweg et al., Decomposition of Uncertainty in Bayesian Deep Learning for Efficient and Risk-sensitive Learning, JMLR 2018] --- class: middle ## Knowledge uncertainty .bigger[ - We can obtain a measure of _knowledge uncertainty_ from the difference of the _total uncertainty_ and _data uncertainty_, i.e., the **mutual information**: $$\underbrace{\mathcal{MI}[y, \theta \mid x\_{test}, \mathcal{D}]}\_{\text{knowledge uncertainty}} = \underbrace{\mathcal{H}[P(y \mid x\_{test}, \mathcal{D})]}\_{\text\{total uncertainty}} - \underbrace{\mathbb{E}\_{p(\theta \mid \mathcal{D})} [\mathcal{H}[P(y \mid x\_{test}, \theta)]]}\_{\text{data uncertainty}} $$ - Intuitively, knowledge uncertainty captures the amount of information about the model parameters $\theta$ that would be gained through knowledge of the true outcome $y$ - Mutual Information is essentially a measure of the diversity of the ensemble ] .citation[A. Mobiny et al., DropConnect Is Effective in Modeling Uncertainty of Bayesian Deep Networks, Nature Scientific Reports 2021 <br> A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019 <br> S. Depeweg et al., Decomposition of Uncertainty in Bayesian Deep Learning for Efficient and Risk-sensitive Learning, JMLR 2018] --- class: middle .center.width-45[] .center.bigger[Diversity is an essential property for knowledge uncertainty] --- class: middle ## Ensemble predictions on a simplex .center.width-100[] .bigger[ - 3-way classification task - each point represents the picture of ensemble member ] .citation[A. Malinin, Uncertainty Estimation in Deep Learning with application to Spoken Language Assessment, PhD Thesis 2019] --- class: middle .bigger[Thanks to their appealing properties for _uncertainty estimation and separation_, ensembles are one the key approaches for this task.] --- class: middle, center # Ensemble approaches --- class: middle .grid[ .kol-6-12[ .center.width-90[] .caption[Face detection with AdaBoost] ] .kol-6-12[ <br> <br> <br> .center.width-80[] .caption[Kaggle competitions] ] ] .center.bigger[Ensemble methods have been leading to SotA results for decades.] .citation[P. Viola and M. Jones, Rapid object detection using a boosted cascade of simple features, CVPR 2001] --- class: middle .grid[ .kol-6-12[ <br> <br> .center.width-80[] .caption[Reverend Bayes] ] .kol-6-12[ .center.width-60[] .caption[The Stig] ] ] .center.bigger[Inspiring theoreticians and high-performance seekers alike.*] .bottom.left.smaller[*Depending on the community, one method is frequently regarded as a special case for the other with different assumptions. Mind that Bayesian approaches and ensembles have different mindsets, however the two approaches often inspire each other. ] --- class: middle, center ## .bigger[Bayesian Neural Networks (BNNs)] --- class: middle .grid[ .kol-6-12[ .center.bold[Standard Neural Network] .center.width-80[] ] .kol-6-12[ .center.bold[Bayesian Neural Network] .center.width-80[] ] ] .grid[ .kol-6-12[ - Parameters represented by _single, fixed values (point estimates)_ - Conventional approaches to training NNs can be interpreted as _approximations_ to the full Bayesian method (equivalent to MLE or MAP estimation) ] .kol-6-12[ - Parameters represented by _distributions_ - For Gaussian priors: each parameter consists of a pair $(\mu, \sigma)$ describing a distribution over it (.red[2x more parameters]) ] ] .citation[C. Blundell et al., Weight Uncertainty in Neural Networks, ICML 2015] --- class: middle .bigger[Bayesian Neural Networks (BNNs) are *easy to formulate*, but notoriously **difficult** to perform inference in.] .bigger[Modern BNNs are trained with variational inference (_reparameterization trick_) assuming parameter independence.] --- class: middle .center.width-85[] .caption[Subnetwork inference] .bigger[Recent methods consider only the _last layer as variational_ (Laplace Redux) or _a part of parameters across layers as variational_ with some parameter inter-dependence (LP-BNN, Subnetwork Inference).] .citation[E. Daxberger et al., Laplace Redux -- Effortless Bayesian Deep Learning, NeurIPS 2021 <br> G. Franchi et al., Encoding the latent posterior of Bayesian Neural Networks for uncertainty quantification, arXiv 2020 <br> E. Daxberger et al., Bayesian Deep Learning via Subnetwork Inference, ICML 2021] --- # Deep Ensembles .grid[ .kol-6-12[ .bigger[ .bold[Idea:] Run regular SGD training with different random seeds. At test time, average predictions. .bigger.green[Pros] - extremely simple - top performance across benchmarks - agnostic to the underlying architecture .bigger.red[Cons] - computational cost grows linearly both for training and testing ] ] .kol-6-12[ .center.width-100[] ] ] .citation[B. Lakhsminarayanan et al., Simple and Scalable Predictive Uncertainty Estimation using Deep Ensembles, NeurIPS 2017] --- class: middle .bigger[_Deep Ensembles_ have long been SOTA across tasks and benchmarks in terms of **predictive performance and uncertainty estimation (diversity)**.] .bigger[While they are used heavily for offline computation (e.g., pseudo-labeling, active learning, etc.), their computational cost is **prohibitive for real-time decision systems**.] .bigger[They have inspired numerous subsequent works aiming to improve _computational cost (memory, runtime) during training and/or testing_, _size and number of networks needed_, etc., while preserving as many of the desirable properties of ensembles.] --- class: middle, center # A quick tour of deep ensemble variants --- exclude: true class: middle, center ## .bigger[Ensembles from $1$ single training run and <br> $1$ network budget] --- class: middle, center ## .bigger[Ensembles from $1$ single training run] --- # Snapshot Ensembles .grid[ .kol-6-12[ .bigger[ .bold[Idea:] Collect checkpoints from cyclic learning rate cycles and use them as an ensemble .bigger.green[Pros] - relatively simple to setup - good predictive performance - low computational cost at training .bigger.red[Cons] - still an ensemble at test time: multiple networks, multiple forwards - limited diversity in the predictions - some instability if checkpoints are sampled from early training steps ] ] .kol-6-12[ .center.width-100[] ] ] .citation[G. Huang et al., Snapshot Ensembles: Train 1, get M for free, ICLR 2017] --- count: false # Snapshot Ensembles .grid[ .kol-6-12[ .bigger[ .bold[Idea:] Collect checkpoints from cycle learning rate cycles and use them as an ensemble .bigger.green[Pros] - relatively simple to setup - good predictive performance - low computational cost at training .bigger.red[Cons] - still an ensemble at test time: multiple networks, multiple forwards - limited diversity in the predictions - some instability if checkpoints are sampled from early training steps ] ] .kol-6-12[ .center.width-100[] ] ] .citation[G. Huang et al., Snapshot Ensembles: Train 1, get M for free, ICLR 2017] --- class: middle, center ## .bigger[BNNs from $1$ single training run] --- # SWAG .grid[ .kol-6-12[ .bigger[ <br> .bold[Idea:] Use checkpoints to estimate a distribution over the network parameters. - _training_: collect checkpoints at end of cyclic LR cycles. - _test time_: sample ensemble from final distribution and run multiple forwards. ] ] .kol-6-12[ .center.width-100[] ] ] .citation[W. Maddox et al., A Simple Baseline for Bayesian Uncertainty in Deep Learning, NeurIPS 2019] --- count: false # SWAG .grid[ .kol-6-12[ .bigger[ <br> .bigger.green[Pros] - relatively simple to setup - good predictive performance and faster training .bigger.red[Cons] - needs many epochs to explore checkpoints - still an ensemble at test time: multiple networks, multiple forwards - limited diversity in the predictions ] ] .kol-6-12[ .center.width-100[] ] ] .citation[W. Maddox et al., A Simple Baseline for Bayesian Uncertainty in Deep Learning, NeurIPS 2019] --- # TRADI .grid[ .kol-6-12[ <br> .bigger[ .bold[Idea:] Track parameters over their optimization trajectory from initialization towards minimum. - _training_: use Kalman filter to compute per-parameter distribution. - _test time_: sample ensemble from final distribution and run multiple forwards. ] ] .kol-6-12[ .center.width-100[] ] ] .citation[G. Franchi et al.,TRADI: Tracking deep neural network weight distributions for uncertainty estimation, ECCV 2020] --- count: false # TRADI .grid[ .kol-6-12[ <br> .bigger[ .bigger.green[Pros] + good predictive performance + very low computational overhead and impact on training .bigger.red[Cons] - still an ensemble at test time: multiple networks, multiple forwards - limited diversity in the predictions ] ] .kol-6-12[ .center.width-100[] ] ] .citation[G. Franchi et al.,TRADI: Tracking deep neural network weight distributions for uncertainty estimation, ECCV 2020] --- class: middle, center ## .bigger[Multiple forwards over $1$ network] --- # Test-time augmentation .grid[ .kol-6-12[ <br> .bigger[ .bold[Idea:] Leverage data augmentations at test time to mimic an ensemble - _training_: no change - _test_: apply different data augmentations on the input and run multiple forwards ] ] .kol-6-12[ .center.width-100[] ] ] .citation[A. Ashukha et al., Pitfalls of In-Domain Uncertainty Estimation and Ensembling in Deep Learning, ICLR 2020] --- count: false # Test-time augmentation .grid[ .kol-6-12[ .bigger[ .bigger.green[Pros] - very simple - boosts predictive performance - 1 network at test time .bigger.red[Cons] - multiple forwards at train time - limited diversity in the predictions - works better if network is calibrated - tricky for distribution shifts ] ] .kol-6-12[ .center.width-100[] ] ] .citation[A. Ashukha et al., Pitfalls of In-Domain Uncertainty Estimation and Ensembling in Deep Learning, ICLR 2020] --- # MC-Dropout .grid[ .kol-6-12[ <br> .bigger[ .bold[Idea:] Use Dropout during training: multiple subnetwork configurations. - _training_: Dropout layers active - _test_: Keep Dropout active and forward with multiple Dropout masks ] ] .kol-6-12[ .center.width-100[] ] ] .citation[Y. Gal & Z. Ghahramani, Dropout as a Bayesian approximation: Representing model uncertainty in deep learning, ICML 2016] --- count: false # MC-Dropout .grid[ .kol-6-12[ .bigger[ .bigger.green[Pros] - simple to train - good predictive performance - 1 network at test time .bigger.red[Cons] - multiple forwards at train time - limited diversity in the predictions - potentially cumbersome on conv layers ] ] .kol-6-12[ .center.width-100[] ] ] .citation[Y. Gal & Z. Ghahramani, Dropout as a Bayesian approximation: Representing model uncertainty in deep learning, ICML 2016] --- # Masksembles .grid[ .kol-6-12[ .bigger[ .bold[Idea:] Select masks from promising subnetworks from a network. - _training_: like Dropout, but with fixed masks. - _test_: forwards for each mask. ] ] .kol-6-12[ .center.width-100[] ] ] .citation[N. Durasov et al., Masksembles for Uncertainty Estimation, CVPR 2021] --- count: false # Masksembles .grid[ .kol-6-12[ .bigger[ .bigger.green[Pros] - simple to train - good predictive performance - 1 network at test time .bigger.red[Cons] - multiple forwards at train time - limited diversity in the predictions - potentially cumbersome on conv layers ] ] .kol-6-12[ .center.width-100[] ] ] .citation[N. Durasov et al., Masksembles for Uncertainty Estimation, CVPR 2021] --- class: middle, center ## .bigger[Ensemble-like architectures with $1$ forward] --- # MIMO (Multi-Input Multi-Output) .grid[ .kol-6-12[ .bigger[ <br><br> .bold[Idea:] Harness multiple subnetwork paths learned implicitly by large networks - _training_: different images forwarded together - _test_: forward different augmentations of the same image ] ] .kol-6-12[ .center.width-80[] .center.width-80[] ] ] .citation[M.Havasi et al., Training independent subnetworks for robust prediction, ICLR 2021 <br> S. Lee et al., Why M Heads are Better than One: Training a Diverse Ensemble of Deep Networks, arXiv 2015] --- count: false # MIMO (Multi-Input Multi-Output) .grid[ .kol-6-12[ .bigger[ .bigger.green[Pros] - simple to train - good predictive performance - minor computational overhead .bigger.red[Cons] - limited diversity in the predictions - subnetworks not independent - tends to saturate with increasing number of subnetworks ] ] .kol-6-12[ .center.width-80[] .center.width-80[] ] ] .citation[M.Havasi et al., Training independent subnetworks for robust prediction, ICLR 2021 <br> S. Lee et al., Why M Heads are Better than One: Training a Diverse Ensemble of Deep Networks, arXiv 2015] --- # BatchEnsemble .grid[ .kol-6-12[ .bigger[ .bold[Idea:] Parameterize each weight matrix $W$ as new weight matrix $\overline{W}$ multiplied by the outer product of two vectors $\mathbf{r}$ and $\mathbf{s}$. Each ensemble member has a different set of $\mathbf{r}$ and $\mathbf{s}$ vectors. - _training_: single forward - _test_: single forward ] ] .kol-6-12[ .center.width-80[] ] ] .citation[Y. Wen et al., BatchEnsemble: an alternative approach to efficient ensemble and lifelong learning, ICLR 2019] --- count: false # BatchEnsemble .grid[ .kol-6-12[ .bigger[ .bigger.green[Pros] - excellent computational trade-off - good predictive performance .bigger.red[Cons] - limited diversity in the predictions - subnetworks not independent ] ] .kol-6-12[ .center.width-80[] ] ] .citation[Y. Wen et al., BatchEnsemble: an alternative approach to efficient ensemble and lifelong learning, ICLR 2019] --- # Packed-Ensembles .center.width-70[] .caption[a) Single network; b) Deep Ensembles; c) Packed-Ensembles] .bigger[ .bold[Idea:] Leverage grouped convolutions to separate independent subnetworks trained together in the envelope of a single larger model. ] .citation[O. Laurent & A. Lafage et al., Packed-Ensembles for Efficient Uncertainty Estimation, ICLR 2023] --- # Packed-Ensembles .center[ <video width="854" height="480" autoplay no-controls muted> <source src="images/packed-ensembles.mp4" type="video/mp4"> </video> ] <!-- .bigger[ .bold[Idea:] Leverage grouped convolutions to separate independent subnetworks trained together in the envelope of a single larger model. ] --> .citation[O. Laurent & A. Lafage et al., Packed-Ensembles for Efficient Uncertainty Estimation, ICLR 2023] --- class: middle, center # Performance comparison --- # Image classification .center.width-70[] .caption[Performance comparison (averaged over five runs) on CIFAR-10/100 using ResNet-50 (R50) architecture. <br> All ensembles have M = 4 subnetworks, we highlight the best performances in bold. ] --- count: false # Image classification .center.width-70[] .caption[Performance comparison (averaged over five runs) on CIFAR-10/100 using ResNet-50 (R50) architecture. <br> All ensembles have M = 4 subnetworks, we highlight the best performances in bold. ] .bigger[ - Independent ensembles achieve better performance - Packed Ensembles does so with a lower computational budget ] --- # Image classification .center.width-80[] .caption[Performance comparison on ImageNet using ResNet-50 (R50) and ResNet-50x4 (R50x4). <br> All ensembles have M = 4 subnetworks. We highlight the best performances in bold] --- count: false # Image classification .center.width-80[] .caption[Performance comparison on ImageNet using ResNet-50 (R50) and ResNet-50x4 (R50x4). <br> All ensembles have M = 4 subnetworks. We highlight the best performances in bold] .bigger[ - Single networks and ensembles of partially inter-dependent subnetworks do better in in-domain evaluation ] --- count: false # Image classification .center.width-80[] .caption[Performance comparison on ImageNet using ResNet-50 (R50) and ResNet-50x4 (R50x4). <br> All ensembles have M = 4 subnetworks. We highlight the best performances in bold] .bigger[ - Independents ensembles generalize better to distribution shifts, better leveraging knowledge from training ImageNet data ] --- class: middle .center.width-60[] .caption[Evaluation of computation cost vs. performance trade-offs for multiple uncertainty quantification techniques on CIFAR-100. ] .citation[O. Laurent & A. Lafage et al., Packed-Ensembles for Efficient Uncertainty Estimation, ICLR 2023] --- # Semantic Segmentation .center.width-60[] .caption[Performance comparison on OOD detection for semantic segmentation on StreetHazards dataset] .center.width-60[] .caption[Performance comparison distribution shift (rain, fog, corruptions) for semantic segmentation. Segmentation model: DeepLabv3+ with ResNet50 encoder] .bigger[ - Behaviors on semantic segmentation do not always mimic those from image classification - DeepEnsembles perform best on OOD across metrics - On distribution shift, other methods are close-by ] --- class: middle ## .center[Where does diversity come from?] .center.width-70[] .caption[Performance w.r.t. different stochasticity sources on CIFAR-100. SVHN is used as OOD. <br> .bold[ND] corresponds to the use of Non-deterministic backpropagation algorithms, <br>.bold[DI] to different initializations, and <br> .bold[DB] to different compositions of the batches. ] .bigger[ Even the only use of non-deterministic algorithms introduces enough diversity between each subnetwork of the ensemble. ] .citation[O. Laurent & A. Lafage et al., Packed-Ensembles for Efficient Uncertainty Estimation, ICLR 2023] --- class: middle, center # Recent trends --- # Weight averaging .grid[ .kol-6-12[ <br> .bigger[ .bold[Idea:] Average multiple checkpoints along the SGD trajectory with cyclic learning rate. This leads to better generalization. - _training_: collect checkpoints at end of cyclic LR cycles and then average them - _test time_: single forward. ] ] .kol-6-12[ .center.width-100[] ] ] .citation[P. Izmailov et al., Averaging Weights Leads to Wider Optima and Better Generalization, UAI 2018] --- # Model soups .grid[ .kol-6-12[ <br> .bigger[ - The idea has been revisited in the context of foundation models that offer a good starting point - Checkpoints are computed from different finetunings: different hyperparams, data order, augmentation - .bold[Requirement]: the weights should remain linearly connected - Boosts performance on distribution shift, but loses in calibration ] ] .kol-6-12[ .center.width-100[] ] ] .citation[M. Wortsman et al., Model soups: averaging weights of multiple fine-tuned models improves accuracy without increasing inference time, ICML 2022 <br> J. Frankle et al., Linear mode connectivity and the lottery ticket hypothesis, ICML 2020 <br> T. Garipov et al., Loss Surfaces, Mode Connectivity, and Fast Ensembling of DNNs, NeurIPS 2018] --- # Diverse model soups .center.width-75[] .caption[Different finetuning strategies for model soups. .bold[Thin solid arrows:] auxiliary finetuning; .bold[thick solid arrow:] finetuning on target task; .bold[dashed arrows:] weight averaging. ] .bigger[ - The utility of diversity emerges again: diversity in WA reduces variance, which dominates in distribution shifts - Model ratatouille: + recycle multiple finetunings on auxiliary tasks + launch multiple finetunings on the target task with different inits + average all finetuned weights ] .citation[A. Ramé et al., Diverse Weight Averaging for Out-of-Distribution Generalization, NeurIPS 2022 <br> A. Ramé et al., Model Ratatouille: Recycling Diverse Models for Out-of-Distribution Generalization, ICML 2023] --- # Ensembles in vision-language models .center.width-80[] .bigger[ - Emergence of different ensembling strategies on the text-encoder side ] .citation[J. Urquhart et al., A Simple Zero-shot Prompt Weighting Technique to Improve Prompt Ensembling in Text-Image Models, ICML 2023] --- # Wrap-up .bigger[ - Understanding different sources of uncertainty can be useful for different actions and applications - Ensembles are better equipped to separate sources of uncertainty - Diversity is a key property for ensemble models - Many emerging computationally efficient alternatives for ensembles mimic ensemble properties. Contenders are often below or specific to a type of architecture - Model soups: new approach to harness the power of fondation model in $1$ forward pass. - Vision-language models: first text-based ensembles ] --- layout: false class: end-slide, center count: false The end. --- exclude: true ## Preliminaries - We consider a training dataset $\mathcal{D} = \{ (x\_i, y\_i) \}_{i=1}^{n}$ with $n$ samples and labels - $f\_{\theta}(\cdot)$ is a neural network with parameters $\theta$ , that outputs a classification or regression prediction - We view the neural network as a probabilistic model with $f\_{\theta}(x\_i)=P(Y=y_i \mid X=x\_i,\theta)$. - In supervised learning, we optimize $\theta$ by minimizing the cross-entropy loss, which is equivalent to finding the parameters that maximize the likelihood estimation $$\mathcal{L}\_{\scriptscriptstyle \text{MLE}}(\theta) = - \sum_{(x\_i,y\_i) \in \mathcal{D}} \log P(y\_i\mid x\_i,\theta).$$ - The Bayesian approach enables adding prior information on the parameters $\theta$, by placing a prior distribution $\mathcal{P}(\theta)$ upon them and we can now find the maximum aposteriori (MAP) weights: $$\mathcal{L}\_{\scriptscriptstyle \text{MAP}}(\theta) = - \sum_{(x\_i,y\_i) \in \mathcal{D}} \log P(y\_i \mid x\_i,\theta) - \log P(\theta)$$