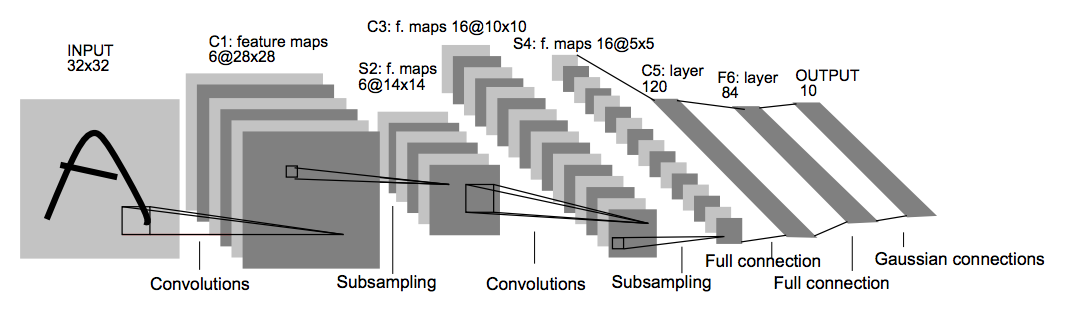

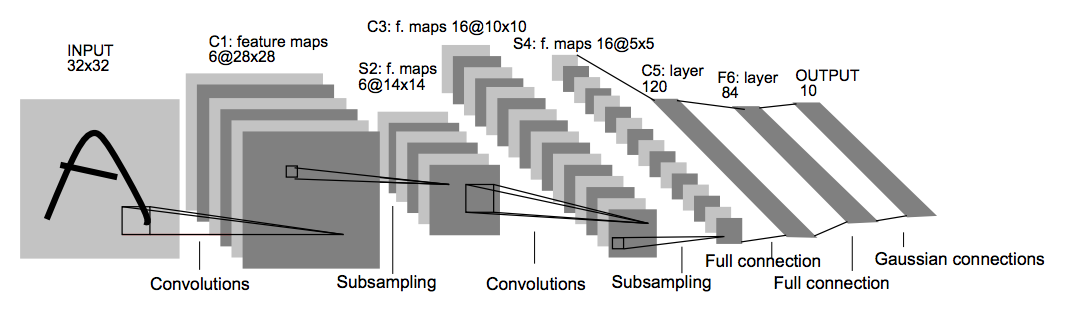

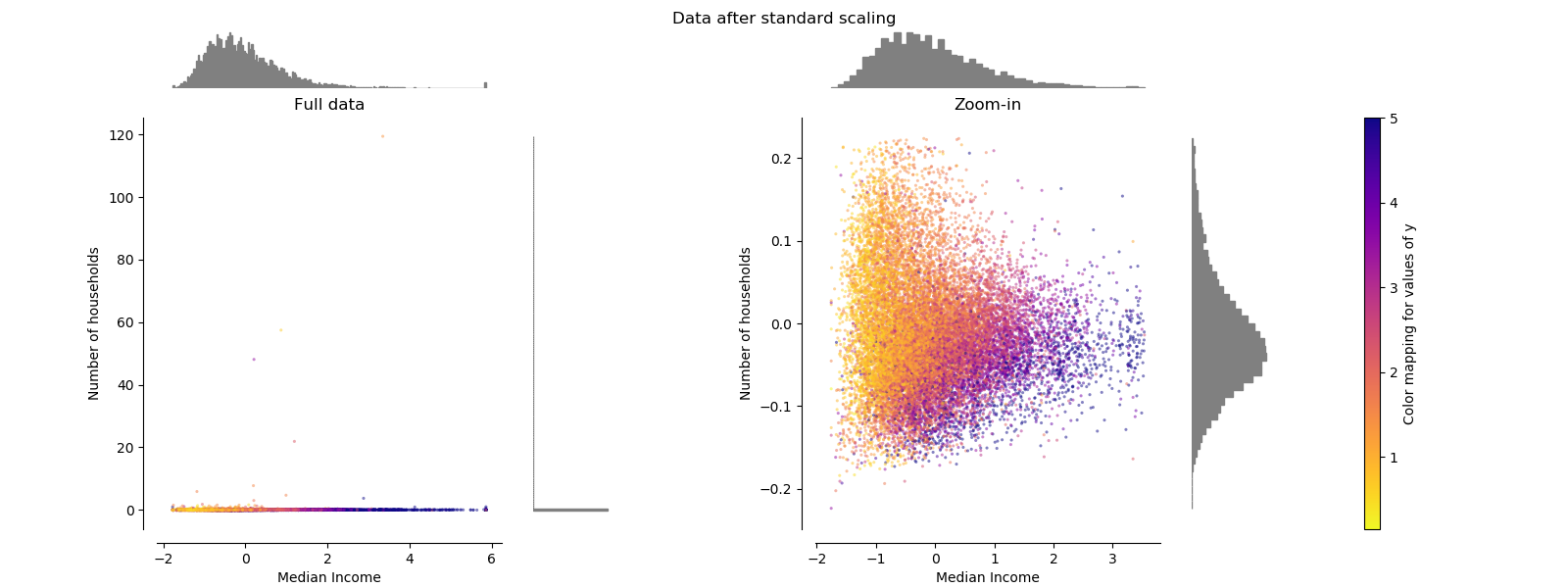

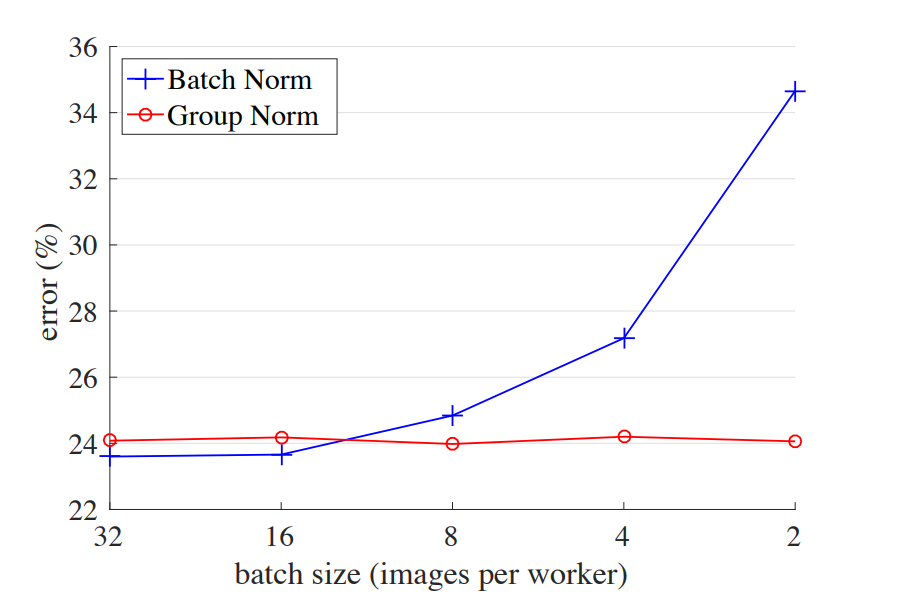

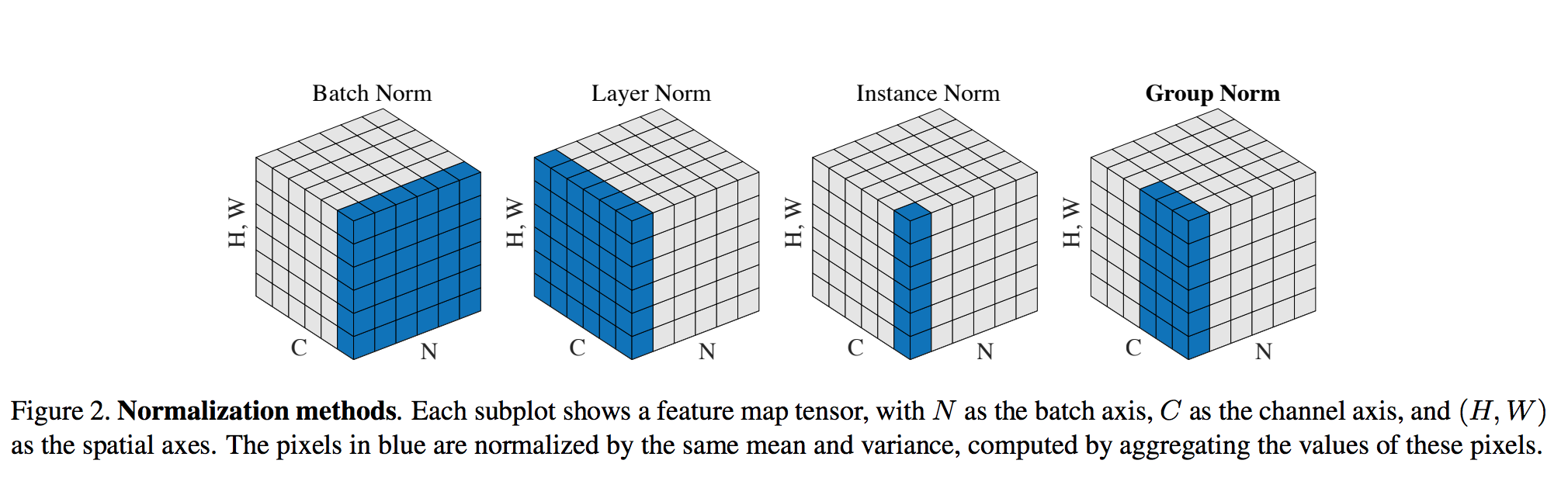

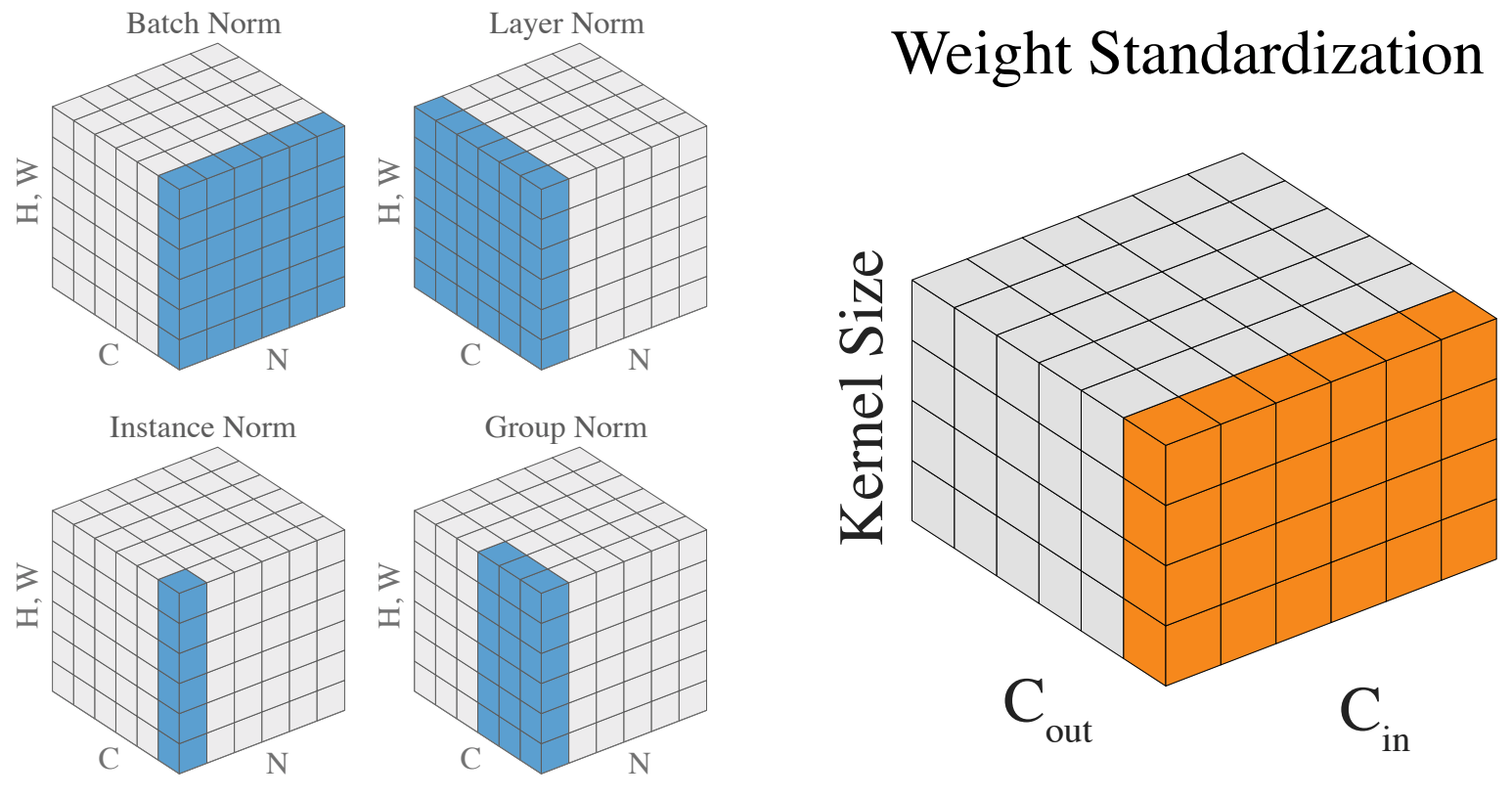

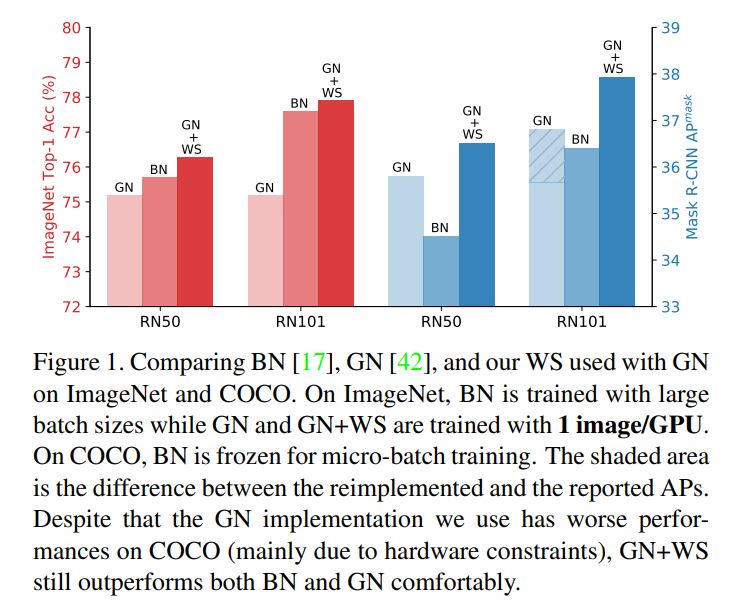

layout: true .center.footer[Marc LELARGE and Andrei BURSUC | Deep Learning Do It Yourself | 14.4 BatchNorm] --- class: center, middle, title-slide count: false ## Going Deeper # 14.4 BatchNorm <br/> <br/> .bold[Andrei Bursuc ] <br/> url: https://dataflowr.github.io/website/ .citation[ With slides from A. Karpathy, F. Fleuret, G. Louppe, C. Ollion, O. Grisel, Y. Avrithis ...] --- class: middle .bigger[Previously we saw that maintaining proper statistics of the activations and derivatives was a critical issue to allow the training of deep architectures.] .hidden.bigger[We can addres this by: ($1$) crafting smarter _weight initialization_ techniques ] .hidden.bigger[ ($2$) explicitly _forcing the activation statistics_ during the forward pass by re-normalizing them. ] .hidden.center.bigger[__Batch normalization__ .cites[(Ioffe and Szegedy, 2015)] was the first method introducing this idea.] --- count: false class: middle .bigger[Previously we saw that maintaining proper statistics of the activations and derivatives was a critical issue to allow the training of deep architectures.] .bigger[We can addres this by: ($1$) crafting smarter _weight initialization_ techniques ] .hidden.bigger[ ($2$)explicitly _forcing the activation statistics_ during the forward pass by re-normalizing them. ] .hidden.center.bigger[__Batch normalization__ .cites[(Ioffe and Szegedy, 2015)] was the first method introducing this idea.] --- count: false class: middle .bigger[Previously we saw that maintaining proper statistics of the activations and derivatives was a critical issue to allow the training of deep architectures.] .bigger[We can addres this by: ($1$) crafting smarter _weight initialization_ techniques ] .bigger[ ($2$) explicitly _forcing the activation statistics_ during the forward pass by re-normalizing them. ] .hidden.center.bigger[__Batch normalization__ .cites[(Ioffe and Szegedy, 2015)] was the first method introducing this idea.] --- count: false class: middle .bigger[Previously we saw that maintaining proper statistics of the activations and derivatives was a critical issue to allow the training of deep architectures.] .bigger[We can addres this by: ($1$) crafting smarter _weight initialization_ techniques ] .bigger[ ($2$) explicitly _forcing the activation statistics_ during the forward pass by re-normalizing them. ] .center.bigger[__Batch normalization__ .cites[(Ioffe and Szegedy, 2015)] was the first method introducing this idea.] --- class: middle .bigger.italic["Training Deep Neural Networks is complicated by the fact that the _distribution of each layer's inputs changes during training, as the parameters of the previous layers change_. This slows down the training by requiring lower learning rates and careful parameter initialization ..."] .pull-right[(Ioffe and Szegedy, 2015)] .reset-column[ ] .hidden.center.width-60[] .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; ICML 2015] --- count: false class: middle .bigger.italic["Training Deep Neural Networks is complicated by the fact that the _distribution of each layer's inputs changes during training, as the parameters of the previous layers change_. This slows down the training by requiring lower learning rates and careful parameter initialization ..."] .pull-right[(Ioffe and Szegedy, 2015)] .reset-column[ ] .center.width-60[] .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by R --- class: middle, center .bigger[Before we learn about BatchNorm, let's remember of another common strategy for controlling input data statistics.] --- # Data normalization Weight initialization strategies aim to preserve the activation variance constant across layers, under the initial assumption that the **input feature variances are the same**. That is, $$\text{Var}(x\_i) = \text{Var}(x\_j) \triangleq \text{Var}(x) \approx 1$$ for all pairs of features $i,j$. .credit[Credits: F. Fleuret] --- This constraint is usually not satisfied. However it can be enforced by **standardazing** the input data feature wise $$\mathbf{x}' = (\mathbf{x} - \hat{\mu}) \odot \frac{1}{\sqrt{\hat{\sigma}^2}},$$ where $$ \begin{aligned} \hat{\mu} = \frac{1}{N} \sum\_{\mathbf{x} \in \mathbf{d}} \mathbf{x} \quad\quad\quad \hat{\sigma}^2 = \frac{1}{N} \sum\_{\mathbf{x} \in \mathbf{d}} (\mathbf{x} - \hat{\mu})^2. \end{aligned} $$ .center.width-70[] .credit[Credits: G. Louppe] --- class: middle .bigger[In PyTorch:] ```python mu, std = train_input.mean(), train_input.std() train_input.sub_(mu).div_(std) test_input.sub_(mu).div_(std) ``` --- class: middle, center, black-slide .center.width-100[] --- class: middle ## Batch normalization Normalize activations in each **mini-batch** before activation function: *speeds up* and *stabilizes* training. --- class: middle Let us consider a minibatch of samples at training, for which $\mathbf{u}\_b \in \mathbb{R}^D$, $b=1, ..., B$, are intermediate values computed at some location in the computational graph. In batch normalization the per-component mean and variance are first computed on the batch $$ \hat{\mu}\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B \mathbf{u}\_b \quad\quad\quad \hat{\sigma}^2\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B (\mathbf{u}\_b - \hat{\mu}\_\text{batch})^2, $$ then used for component-wise normalization $$ \mathbf{\hat{u}}\_b = (\mathbf{u}\_b - \hat{\mu}\_\text{batch})\odot\frac{1}{\sqrt{\hat{\sigma}^2\_\text{batch} + \epsilon}} $$ from which the standardized $\mathbf{y}\_b \in \mathbb{R}^D$ are computed such that $$ \mathbf{y}\_b = \gamma \odot \mathbf{\hat{u}}\_b + \beta $$ where $\gamma, \beta \in \mathbb{R}^D$ are parameters to optimize and $\mathbf{y}\_b$ is the output of the BatchNorm layer. .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; ICML 2015] --- class: middle ## Recap (without the $b$ subscript) .grid[ .kol-6-12[ $\mathbf{u}$ $\hat{\mu}\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B \mathbf{u}\_b$ $\hat{\sigma}^2\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B (\mathbf{u}\_b - \hat{\mu}\_\text{batch})^2$ $\mathbf{\hat{u}} = (\mathbf{u} - \hat{\mu}\_\text{batch})\odot\frac{1}{\sqrt{\hat{\sigma}^2\_\text{batch} + \epsilon}}$ $\mathbf{y} = \gamma \odot \mathbf{\hat{u}} + \beta$ ] .kol-6-12[ input, shape is $B\times D$ per channel mean, shape is $D$ per channel variance, shape is $D$ normalized input, shape is $B\times D$ BN output, shape is $B\times D$ ] ] --- class: middle, center .Q[Why introducing the learnable parameters $\gamma,\beta$?] $$ \mathbf{y}\_b = \gamma \odot \mathbf{\hat{u}}\_b + \beta $$ .hidden.bigger[ Learning $\gamma = \sigma$ and $\beta = \mu$ will recover the identity function if needed. ] --- count: false class: middle, center .Q[Why introducing the learnable parameters $\gamma,\beta$?] $$ \mathbf{y}\_b = \gamma \odot \mathbf{\hat{u}}\_b + \beta $$ .bigger[ Learning $\gamma = \sigma$ and $\beta = \mu$ will recover the identity function if needed. ] --- class: middle, center .bigger[During training we compute minibatch statistics $\hat{\mu}\_\text{batch}$ and $\hat{\sigma}^2\_\text{batch}$.] .Q[What challenges does this bring at test time?] .hidden.bigger[As for dropout, the model behaves differently during *train* and *test*.] --- count: false class: middle, center .bigger[During training we compute minibatch statistics $\hat{\mu}\_\text{batch}$ and $\hat{\sigma}^2\_\text{batch}$.] .Q[What challenges does this bring at test time?] .bigger[As for dropout, the model behaves differently during *train* and *test*.] --- # Batc Normalization - training - as the name suggests, BN learns using the *mini-batch statistics* - during forward for each mini-batch $\mathbf{u}$ the mean and variance: $$ \hat{\mu}\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B \mathbf{u}\_b \quad\quad\quad \hat{\sigma}^2\_\text{batch} = \frac{1}{B} \sum\_{b=1}^B (\mathbf{u}\_b - \hat{\mu}\_\text{batch})^2, $$ - on the side we store moving averages of the *training set mean and variance* updated at every mini-batch during training: $$ \begin{aligned} \mu\_\text{stat}^{(\tau+1)} & = \alpha \mu\_\text{stat}^{(\tau)} + (1 - \alpha) \hat{\mu}\_\text{batch} \\\\ \sigma\_\text{stat}^{(\tau+1)} & = \alpha \sigma\_\text{stat}^{(\tau)} + (1 - \alpha) \hat{\sigma}\_\text{batch} \end{aligned} $$ .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; ICML 2015] --- class: middle, center .Q[How do we perform backpropagation in BatchNorm layers?] --- # Batch Normalization - inference/runtime - During inference, BatchNorm shifts and rescales each component according to the empirical moments estimated during training: $$\mathbf{y} = \gamma \odot (\mathbf{u} - \mu\_\text{stat})\odot\frac{1}{\sqrt{\sigma^2\_\text{stat} + \epsilon}} + \beta$$ - During inference, BatchNorm performs a *component-wise affine transformation* and it can process samples individually - BatchNorm can be converted into computationally efficient layers at runtime .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; ICML 2015] --- # Batch normalization As dropout, batch normalization is implemented as a separate module .highlight[`torch.BatchNorm1d`] / .highlight[`torch.BatchNorm2d`] / .highlight[`torch.BatchNorm3d`] that processes the input components separately. -- count: false ```py >>> x = torch.randn(16, 100, 7, 7) >>> bn2d = nn.BatchNorm2d(100) >>> y = bn2d(x) >>> x.size() torch.Size([16, 100, 7, 7]) >>> y.size() torch.Size([16, 100, 7, 7]) >>> bn2d.weight.data.size() torch.Size([100]) >>> bn2d.bias.data.size() torch.Size([100]) >>> bn2d.running_mean.size() torch.Size([100]) >>> bn2d.running_var.size() torch.Size([100]) ``` --- count: false # Batch normalization As dropout, batch normalization is implemented as a separate module .highlight[`torch.BatchNorm1d`] / .highlight[`torch.BatchNorm2d`] / .highlight[`torch.BatchNorm3d`] that processes the input components separately. ```py *>>> x = torch.randn(16, 100, 7, 7) >>> bn2d = nn.BatchNorm2d(100) >>> y = bn2d(x) *>>> x.size() *torch.Size([16, 100, 7, 7]) *>>> y.size() *torch.Size([16, 100, 7, 7]) >>> bn2d.weight.data.size() torch.Size([100]) >>> bn2d.bias.data.size() torch.Size([100]) >>> bn2d.running_mean.size() torch.Size([100]) >>> bn2d.running_var.size() torch.Size([100]) ``` --- count: false # Batch normalization As dropout, batch normalization is implemented as a separate module .highlight[`torch.BatchNorm1d`] / .highlight[`torch.BatchNorm2d`] / .highlight[`torch.BatchNorm3d`] that processes the input components separately. ```py >>> x = torch.randn(16, 100, 7, 7) *>>> bn2d = nn.BatchNorm2d(100) >>> y = bn2d(x) >>> x.size() torch.Size([16, 100, 7, 7]) >>> y.size() torch.Size([16, 100, 7, 7]) *>>> bn2d.weight.data.size() *torch.Size([100]) *>>> bn2d.bias.data.size() *torch.Size([100]) >>> bn2d.running_mean.size() torch.Size([100]) >>> bn2d.running_var.size() torch.Size([100]) ``` --- count: false # Batch normalization As dropout, batch normalization is implemented as a separate module .highlight[`torch.BatchNorm1d`] / .highlight[`torch.BatchNorm2d`] / .highlight[`torch.BatchNorm3d`] that processes the input components separately. ```py >>> x = torch.randn(16, 100, 7, 7) *>>> bn2d = nn.BatchNorm2d(100) >>> y = bn2d(x) >>> x.size() torch.Size([16, 100, 7, 7]) >>> y.size() torch.Size([16, 100, 7, 7]) >>> bn2d.weight.data.size() torch.Size([100]) >>> bn2d.bias.data.size() torch.Size([100]) *>>> bn2d.running_mean.size() *torch.Size([100]) *>>> bn2d.running_var.size() *torch.Size([100]) ``` --- # Batch normalization Results on ImageNet LSVRC 2012: .center.width-70[] .citation[S. Ioffe and C. Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift; ICML 2015] -- count: false - learning rate can be greater - dropout and local normalization are not necessary - $L\_2$ regularization influence should be reduced --- class: middle ## Drawbacks of Batch Normalization - requires larger mini-batches to work well .hidden[ - potential discrepancy between training and testing distributions (addressed in Batch Re-Normalization) - head-aches for multi-GPU training: statistics must be synchronised often - we still don't have a precise understanding on why it works this well (many papers address this recently). Pay attention to it in novel settings, e.g., self-supervised learning. ] --- count: false class: middle .center.bigger[BatchNorm doesn't work well with small mini-batches] .center.width-60[] .caption[ImageNet classification error vs. batch sizes] .citation[Y. Wu and K. He, Group Normalization, ECCV 2018] --- count: false class: middle ## Drawbacks of Batch Normalization - requires larger mini-batches to work well - potential discrepancy between training and testing distributions (addressed in Batch Re-Normalization) .hidden[ - head-aches for multi-GPU training: statistics must be synchronised often - we still don't have a precise understanding on why it works this well (many papers address this recently). Pay attention to it in novel settings, e.g., self-supervised learning. ] --- count: false class: middle ## Drawbacks of Batch Normalization - requires larger mini-batches to work well - potential discrepancy between training and testing distributions (addressed in Batch Re-Normalization) - head-aches for multi-GPU training: statistics must be synchronised often .hidden[ - we still don't have a precise understanding on why it works this well (many papers address this recently). Pay attention to it in novel settings, e.g., self-supervised learning. ] --- count: false class: middle ## Drawbacks of Batch Normalization - requires larger mini-batches to work well - potential discrepancy between training and testing distributions (addressed in Batch Re-Normalization) - head-aches for multi-GPU training: statistics must be synchronised often - we still don't have a precise understanding on why it works this well (many papers address this recently). Pay attention to it in novel settings, e.g., self-supervised learning. --- class: middle ## Practicalities - BatchNorm layers can be placed across the network after each convolutional block - unclear whether to place BatchNorm layers before or after activation layers (ReLU) --- # Multiple variants .center.width-90[] .citation[Y. Wu and K. He, Group Normalization, ECCV 2018] --- # Weight Standardization - recent approach for *micro-batch training* (i.e. 1-2 images per GPU): object detection, semantic segmentation - .italic[standardizes]/normalizes weights in convolutional layers .center.width-60[] .citation[S. Qiao et al., Weight Standardization, arXiv 2019] --- # Weight Standardization .center.width-50[] .citation[S. Qiao et al., Weight Standardization, arXiv 2019] --- class: end-slide, center count: false The end.