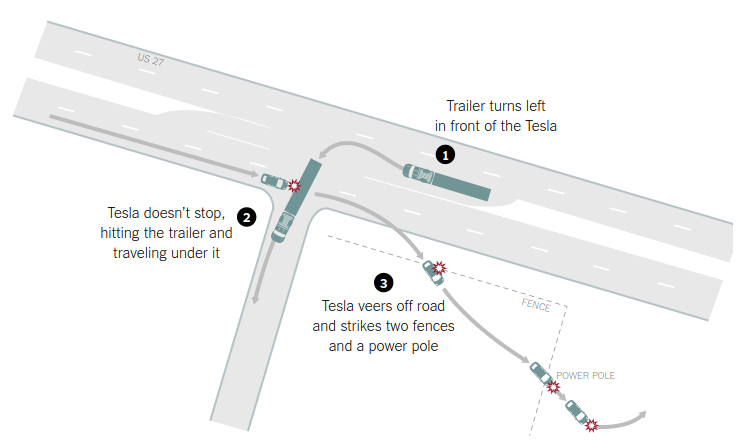

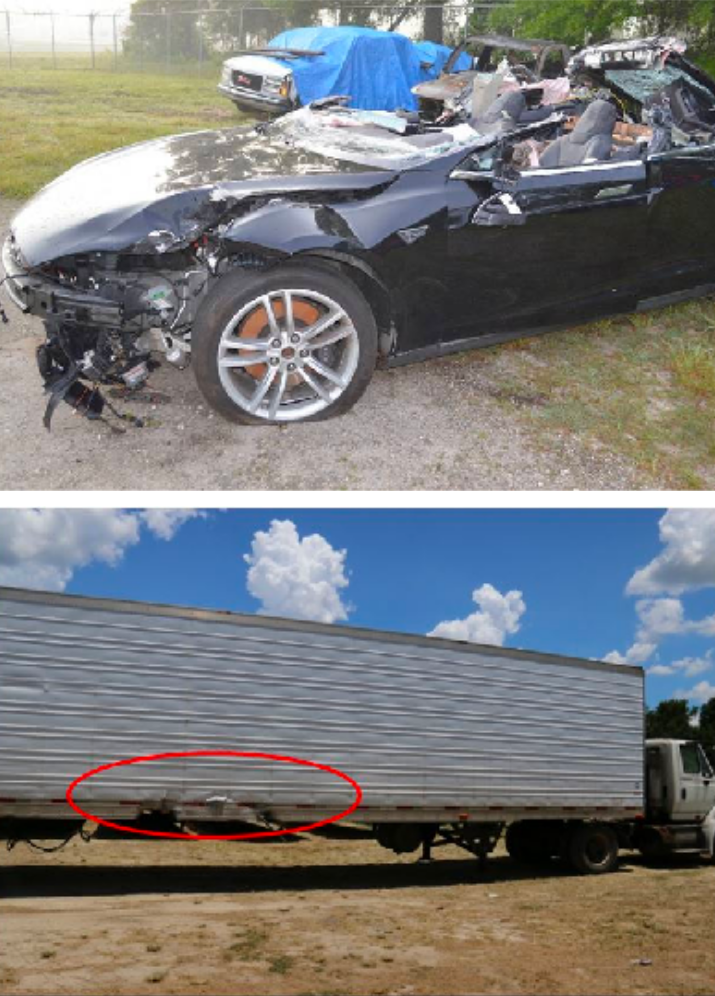

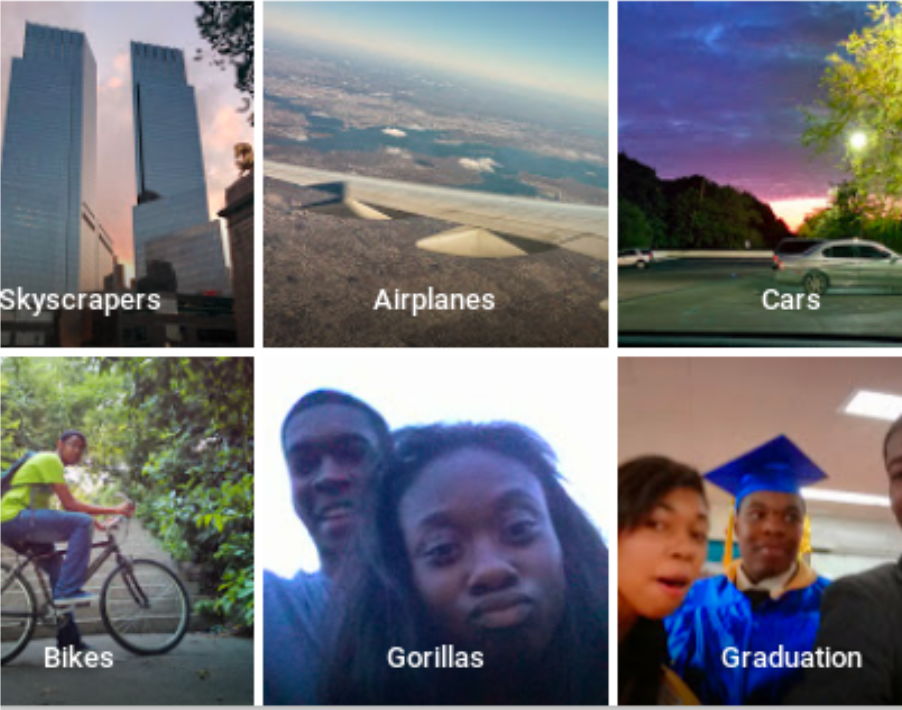

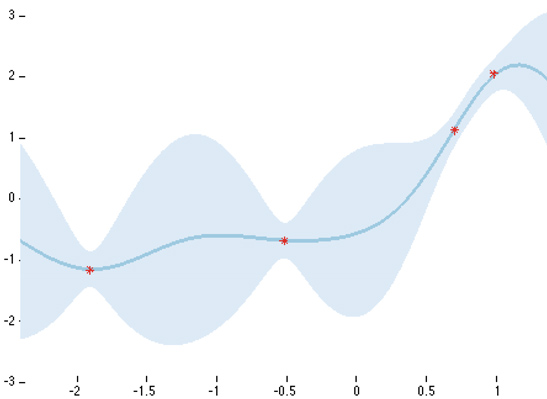

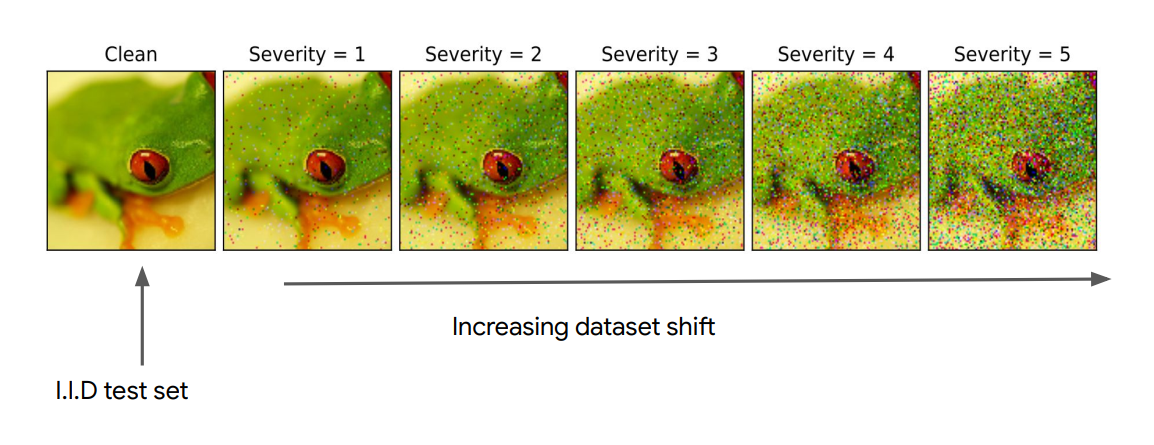

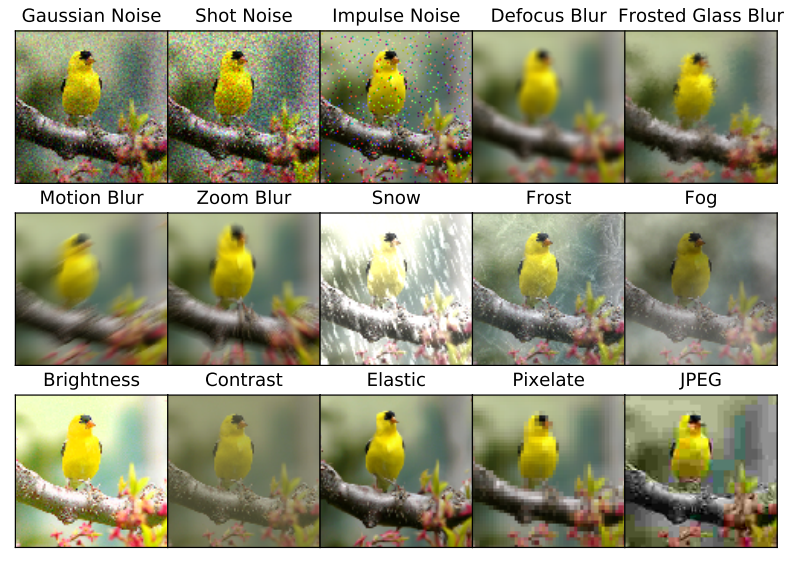

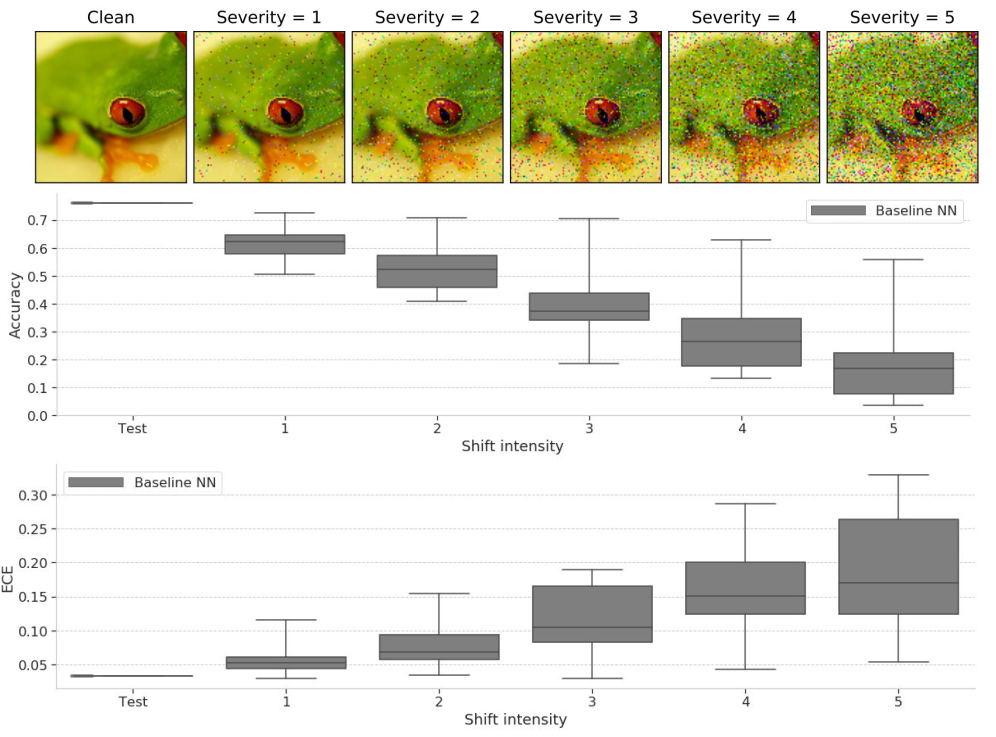

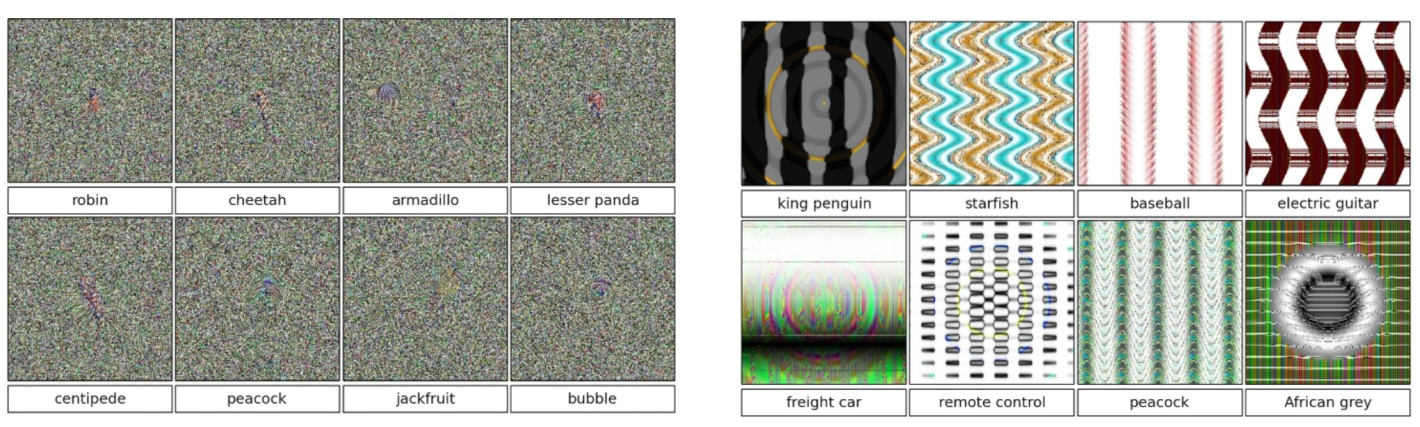

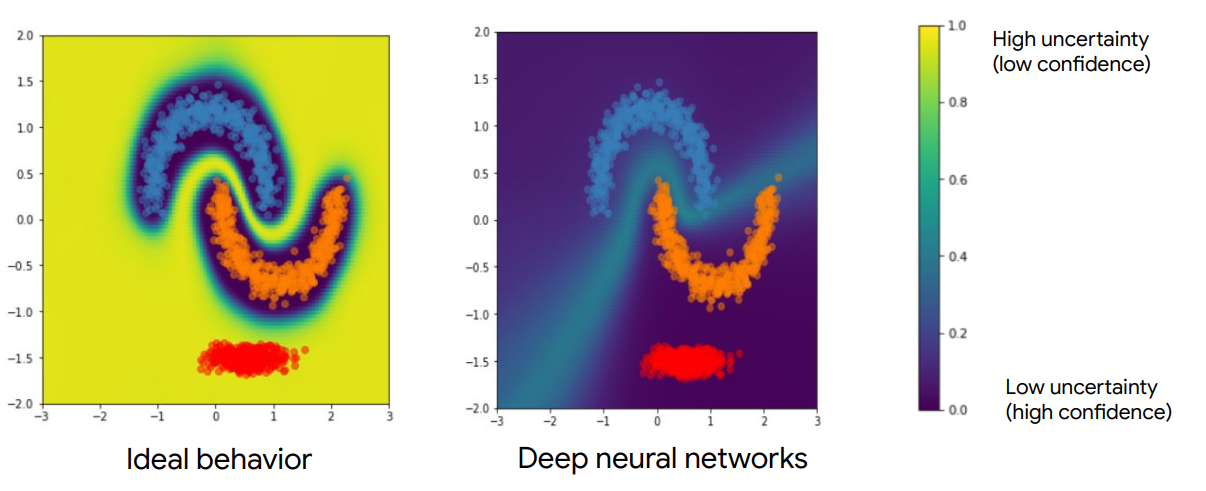

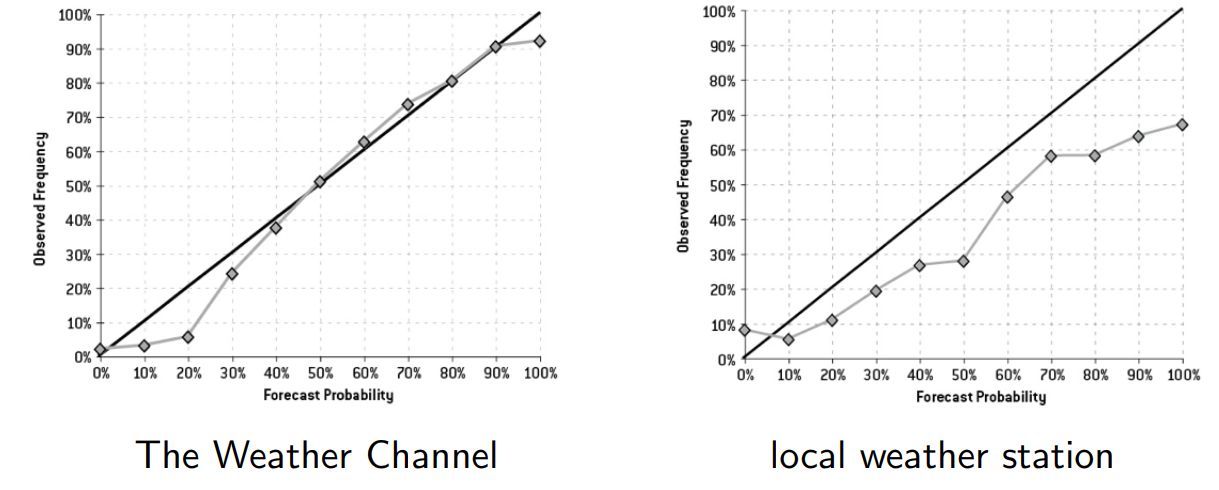

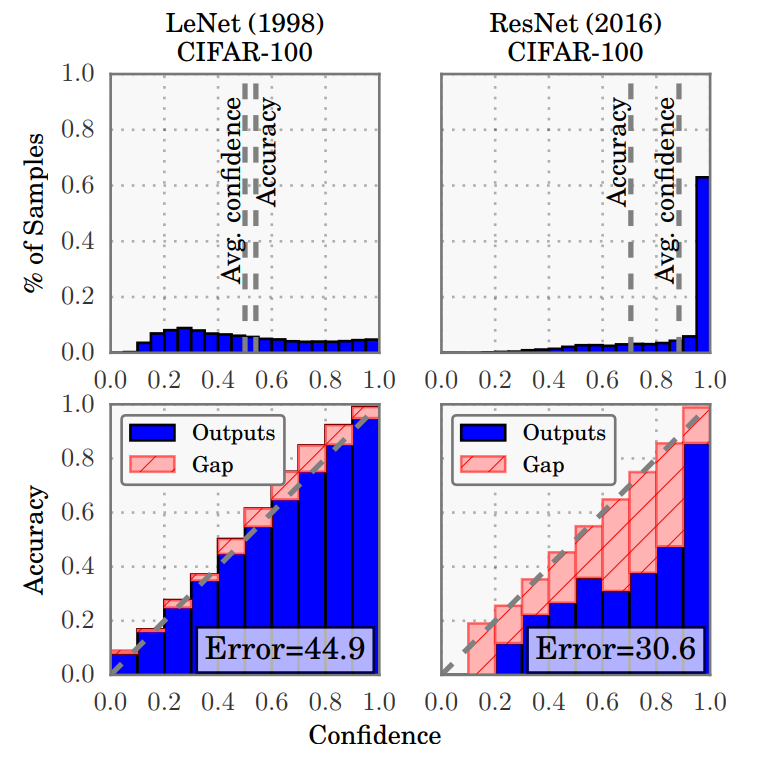

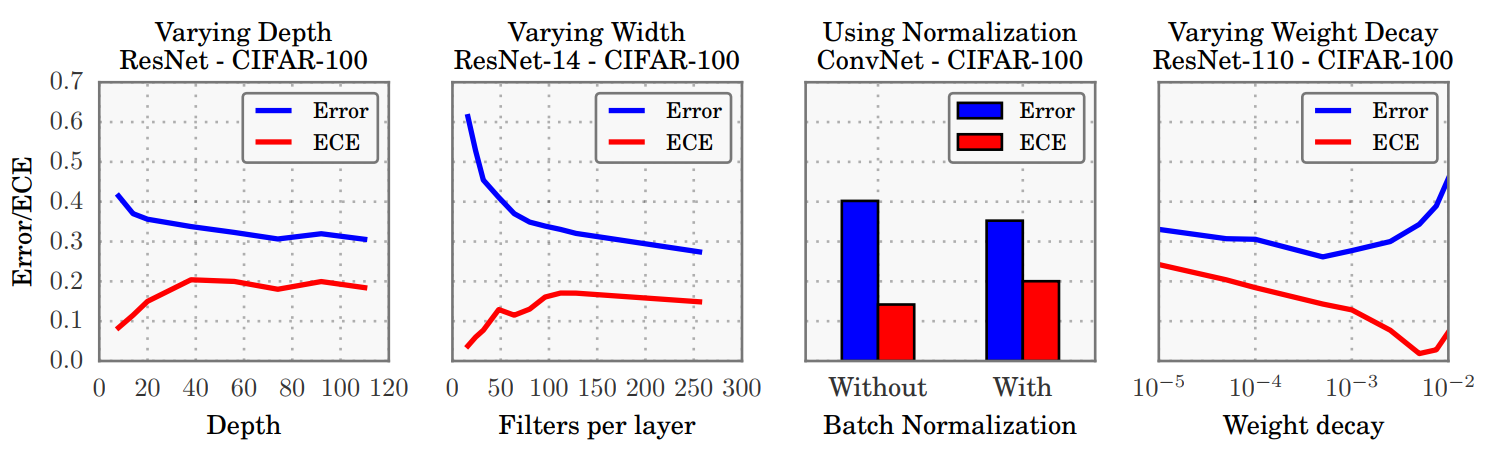

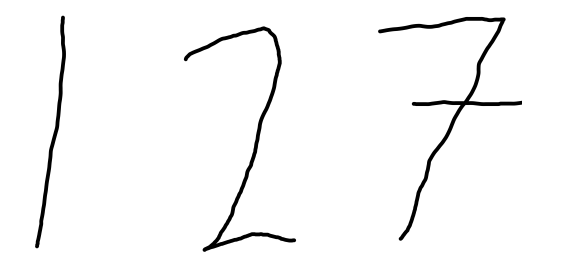

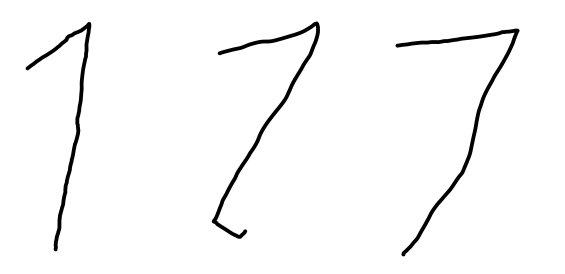

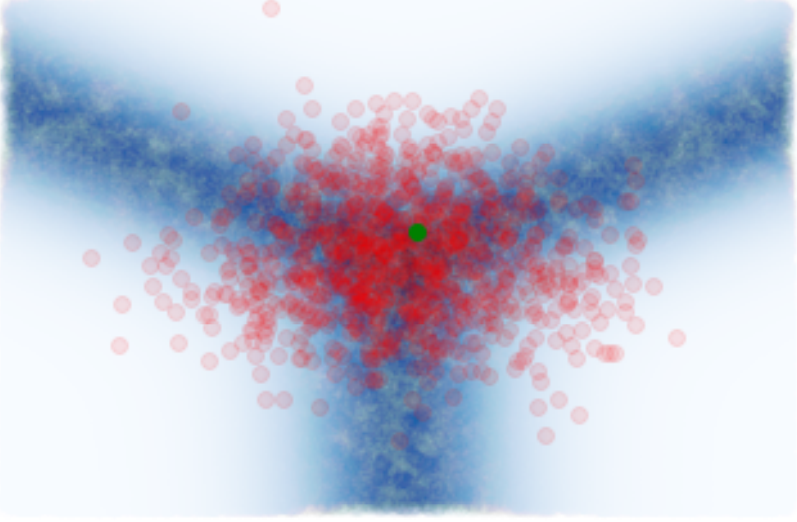

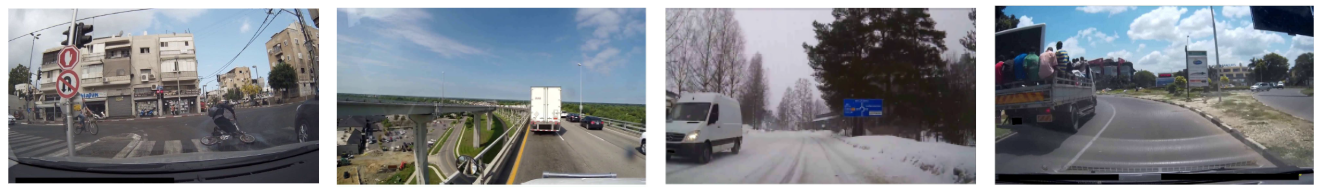

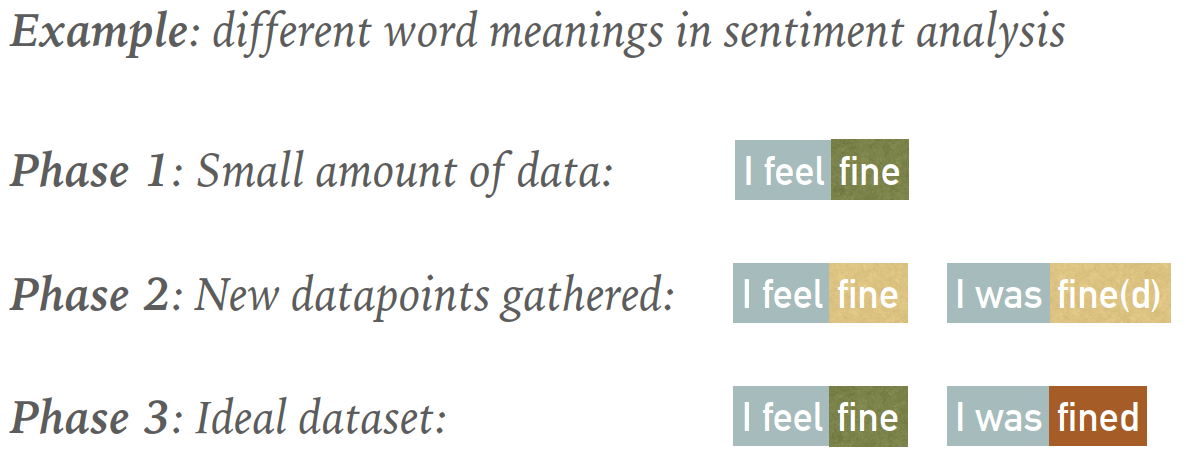

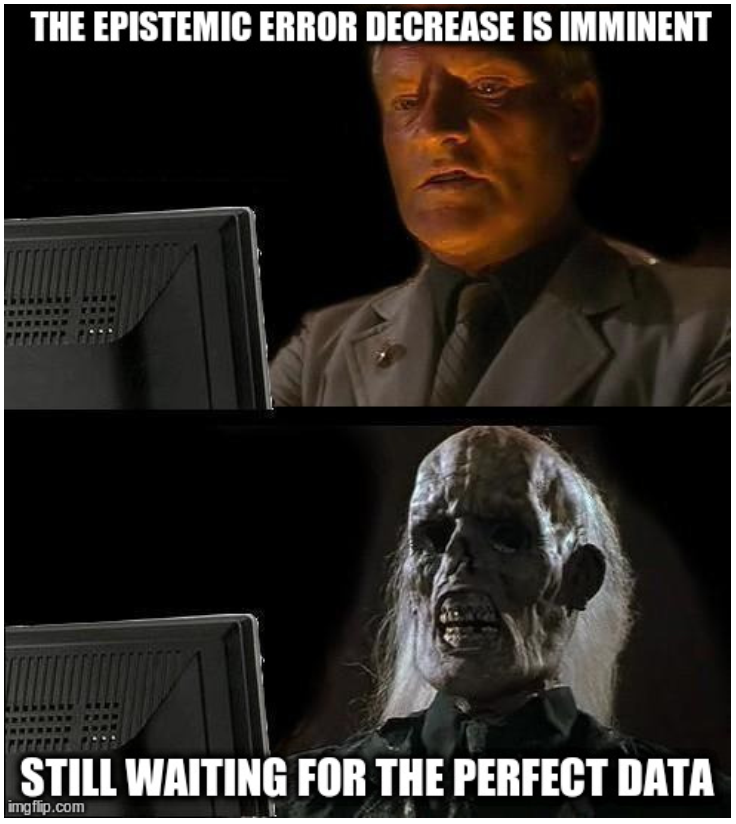

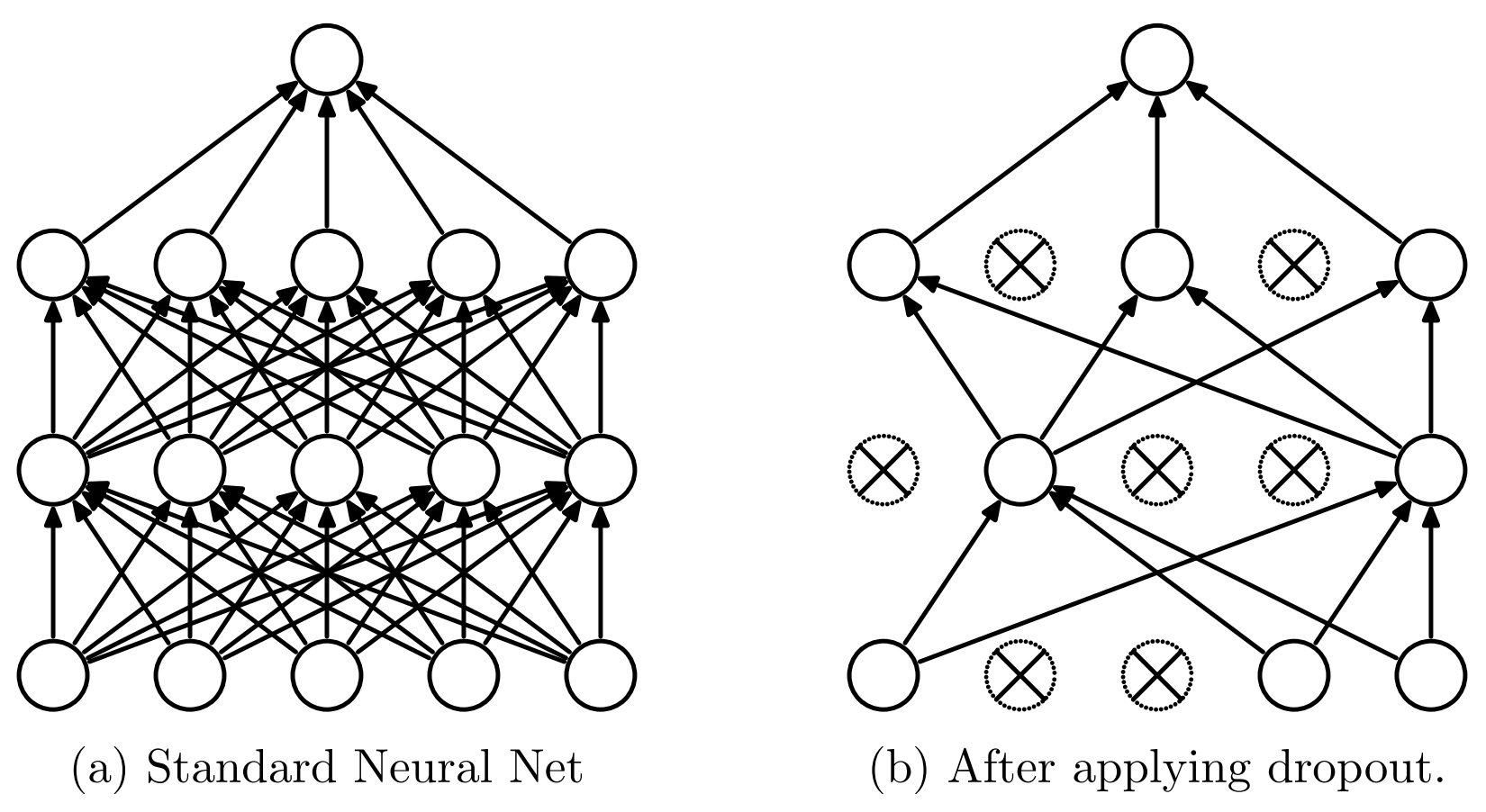

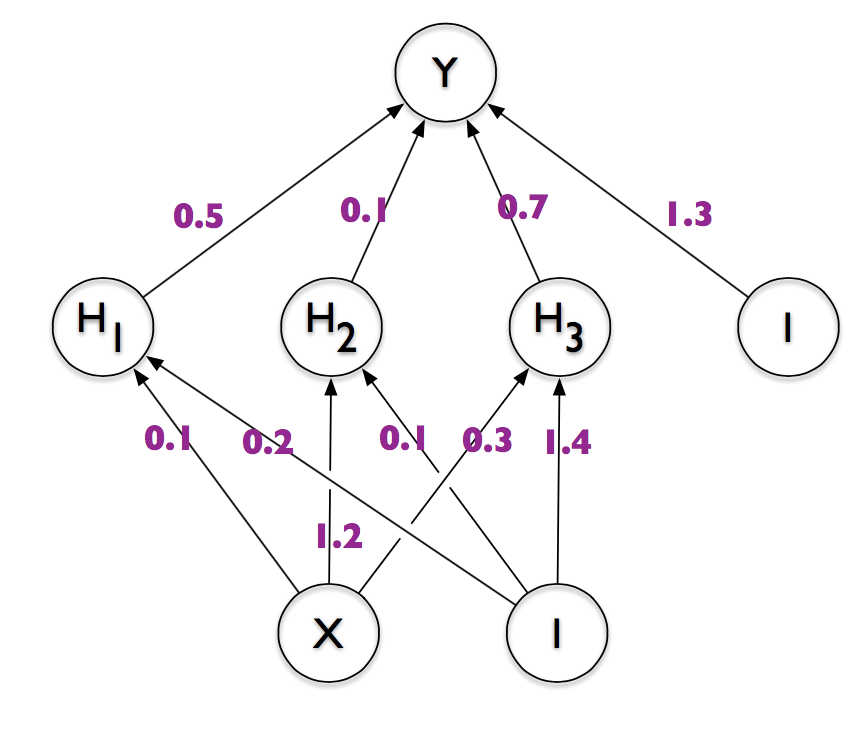

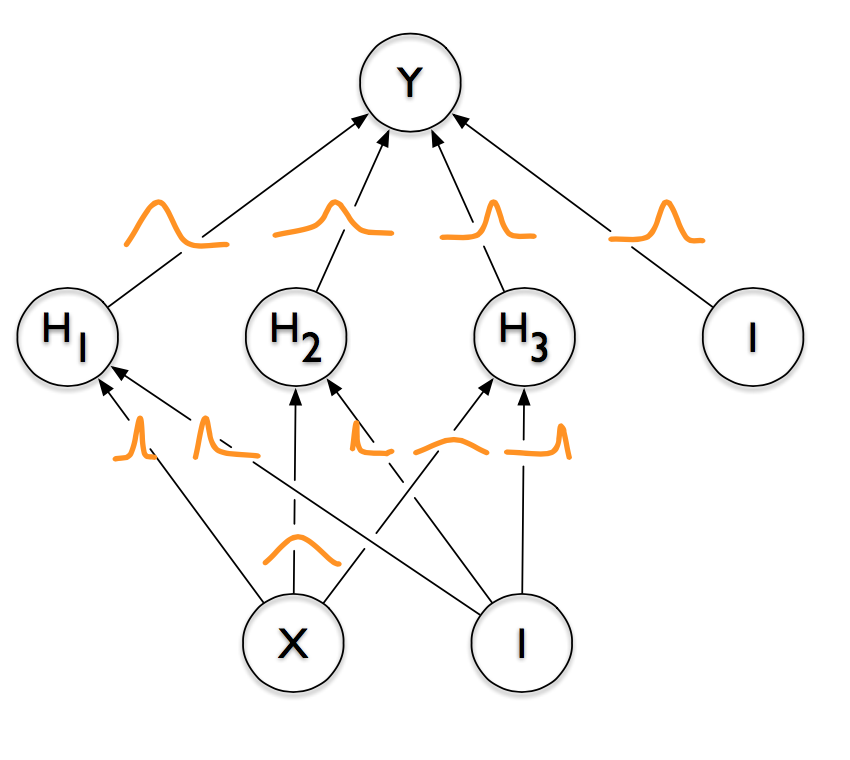

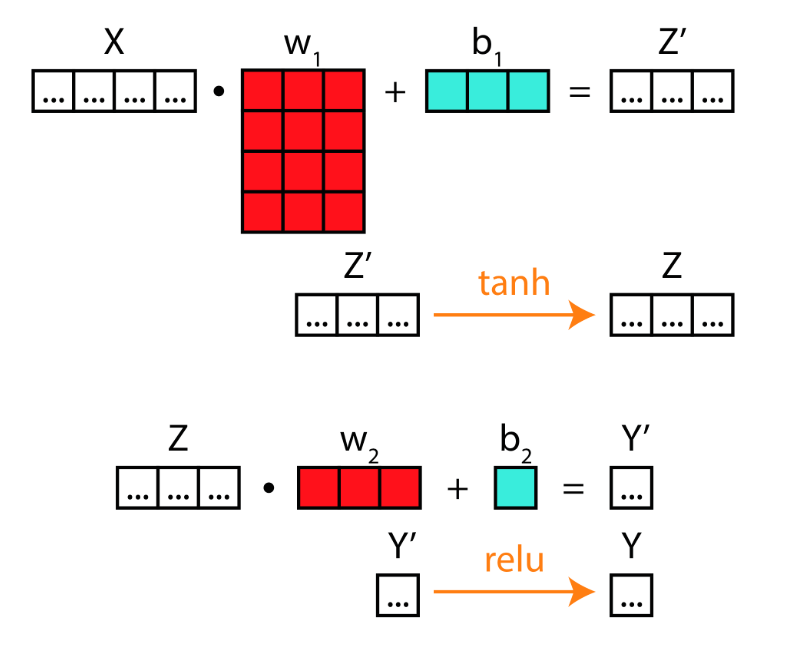

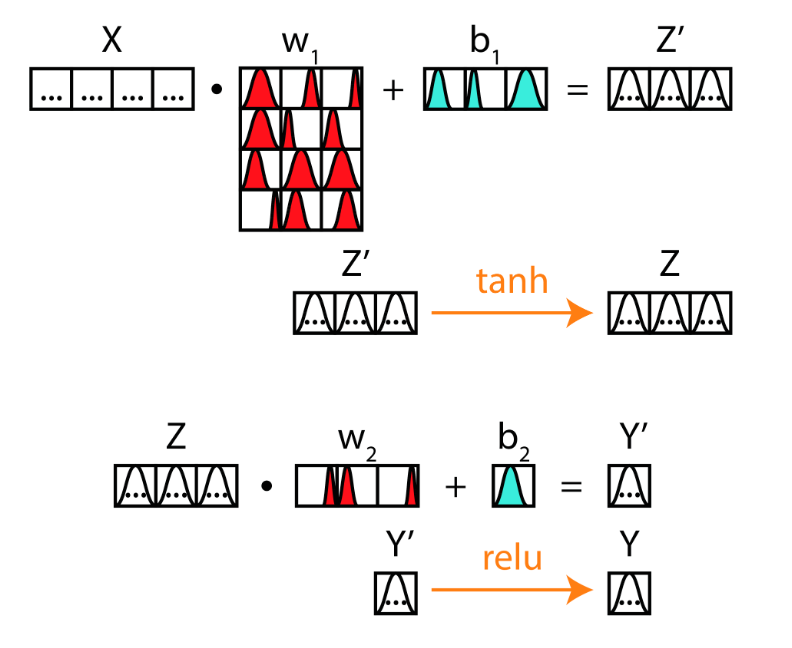

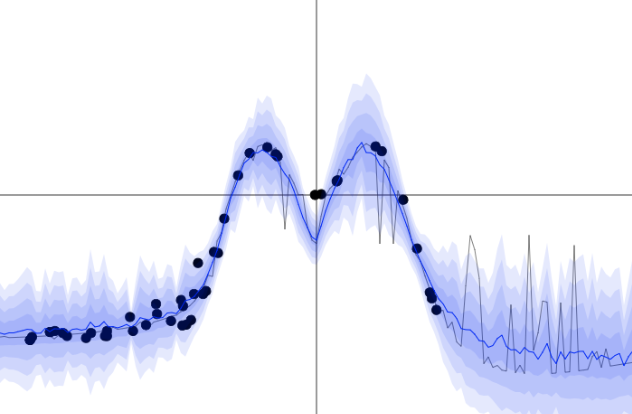

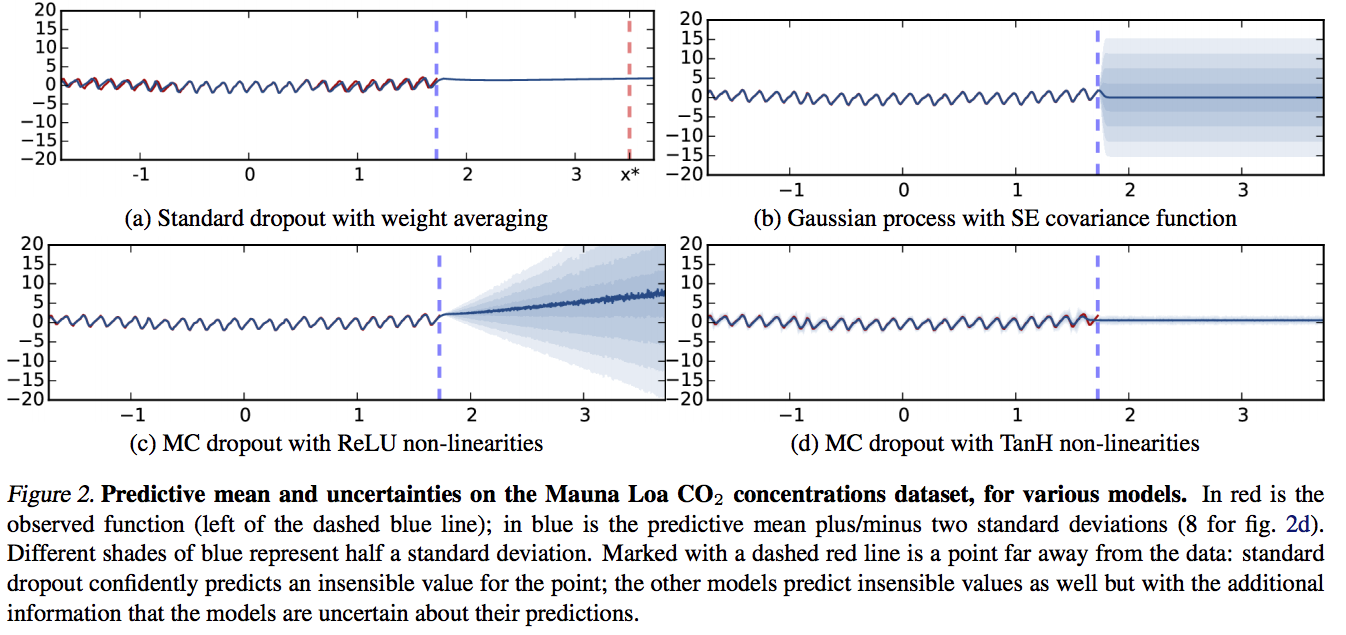

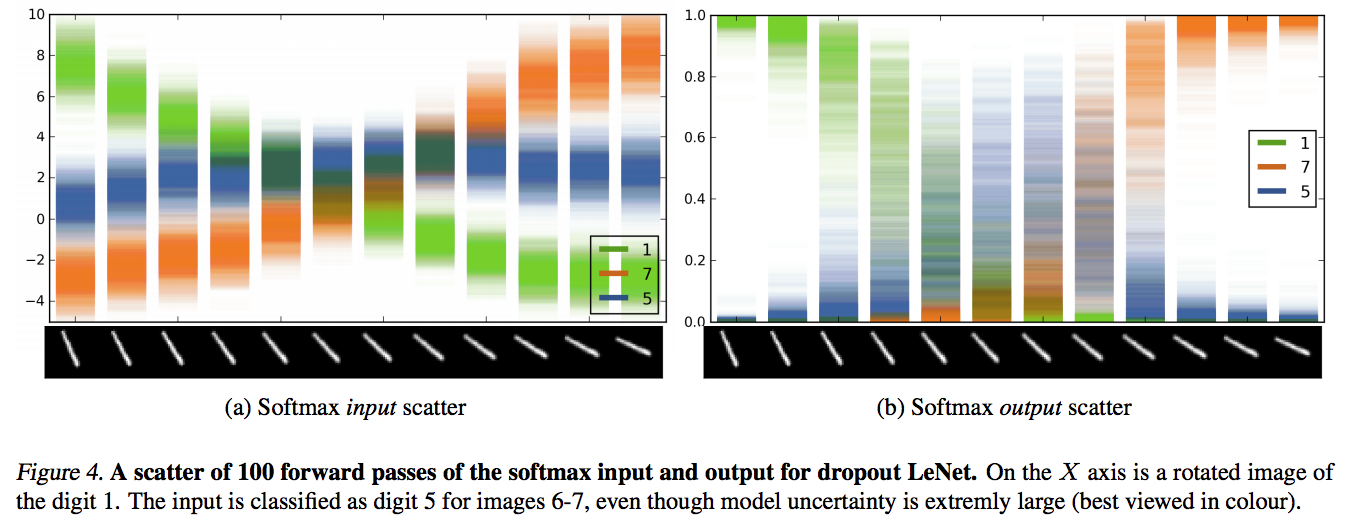

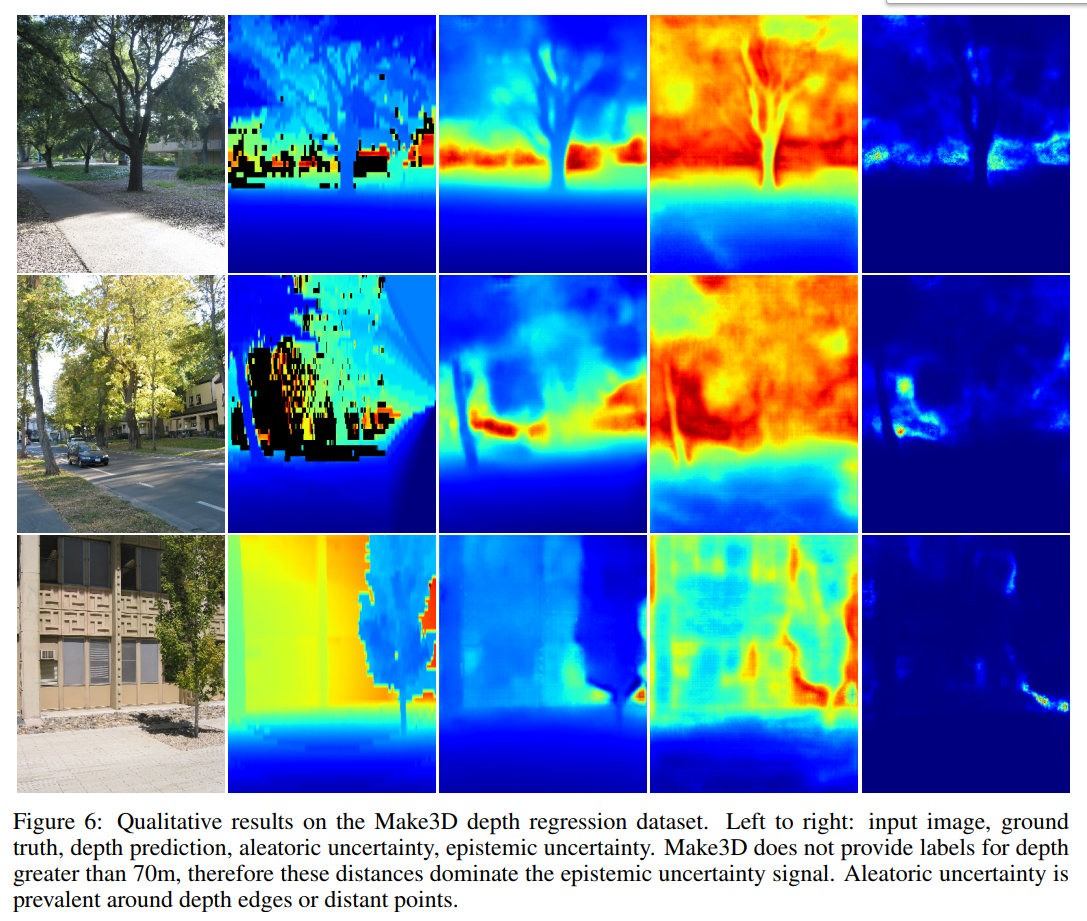

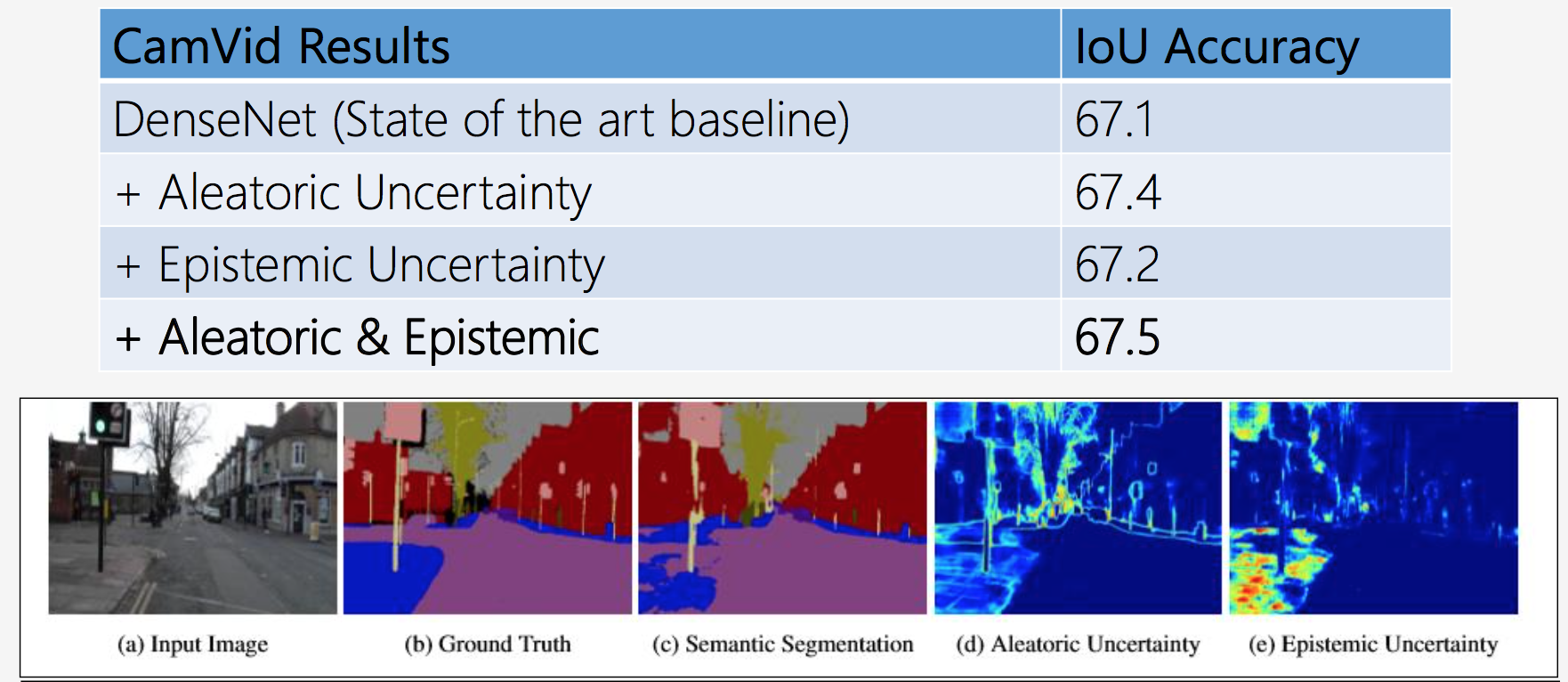

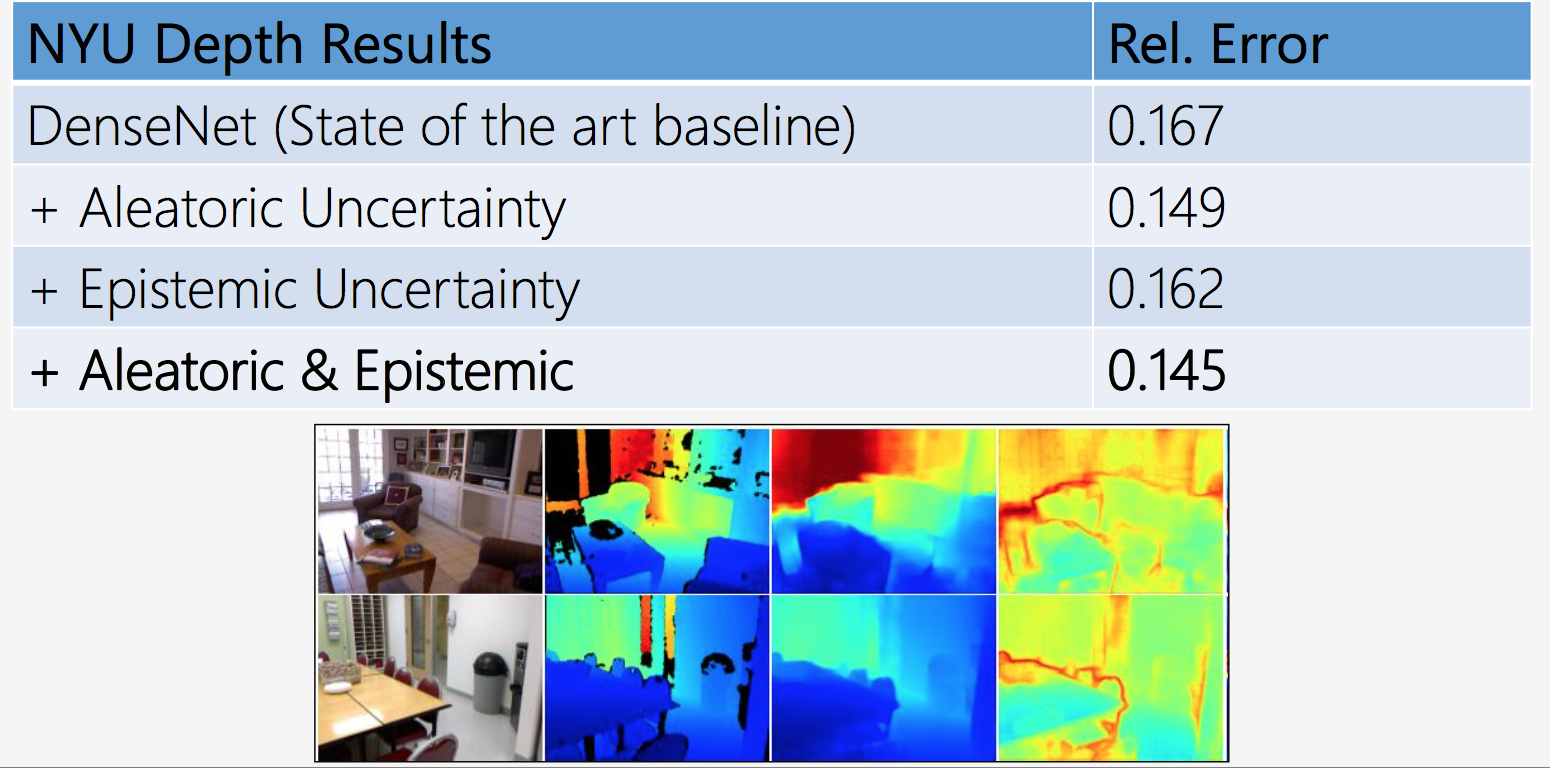

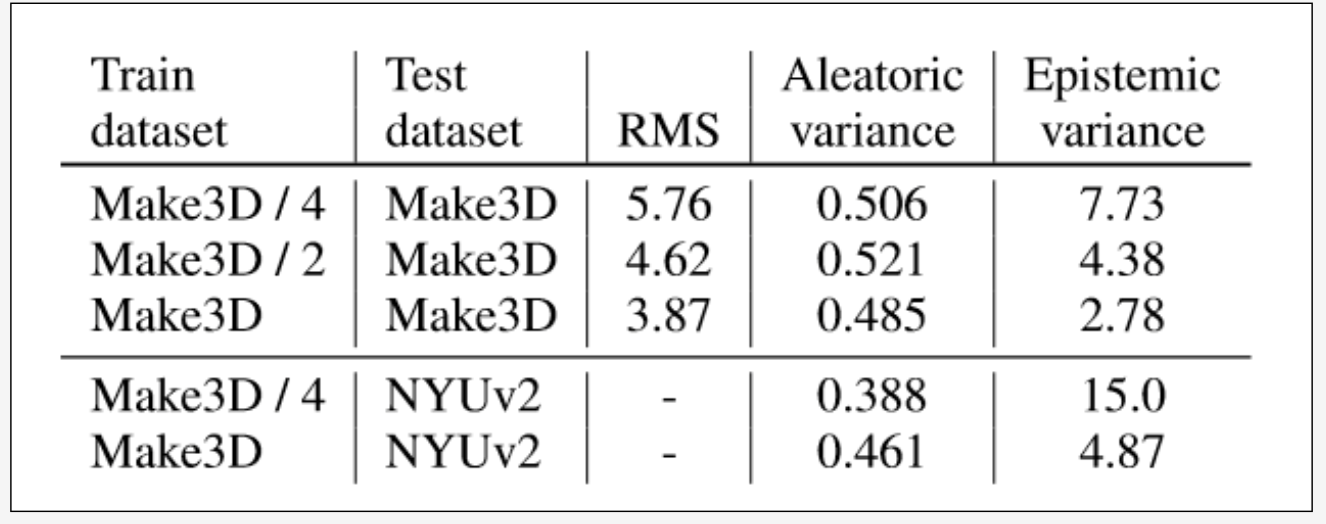

layout: true .center.footer[Marc LELARGE and Andrei BURSUC | Deep Learning Do It Yourself | 15.2 Uncertainty estimation - MCDropout] --- class: center, middle # 15.2 Towards deep learning for the real world .hidden[ ## .italic[i.e.], beyond cats and dogs ] <br/> .center.big.bold[Andrei Bursuc] --- class: center, middle # Towards deep learning for the real world ## .italic[i.e.], beyond cats and dogs <br/> .center.big.bold[Andrei Bursuc] --- class: middle, center # Motivation --- class: middle .grid[ .kol-6-12[ .big.green[Deep Learning is great:] - conceptually simple and modular - scales well with data - awesome software tools - huge community and interest - potentially real world impact ] ] --- class: middle count: false .grid[ .kol-6-12[ .big.green[Deep Learning is great:] - conceptually simple and modular - scales well with data - awesome software tools - huge community and interest - potentially real world impact ] .kol-6-12[ .big.red[ ... but has several problems] - uninterpretable black-boxes - needs a lot of data - mostly empirical - what does a model not know? - can be fooled easily ] ] --- class: middle, center ## The world is a complex environment .center.width-80[] .center[Covering this diversity with (sufficient) data and labels is highly challenging] --- class: middle, center ## Dealing with uncertainty .center.width-40[] --- class: middle, center .bigg[Why should I care about uncertainty?] --- # Motivation In May 2016, there was the **first fatality** from an assisted driving system, caused by the perception system confusing the white side of a trailer for bright sky. .left-column[.center.width-100[]] .right-column[.center.width-60[]] --- # Motivation .center.width-50[] An image classification system erroneously identifies two African Americans as gorillas, raising concerns of racial discrimination. --- class: middle, center .bigg[What do we mean by uncertainty?] --- class: middle ## What do we mean by uncertainty? .grid[ .kol-8-12[ .bigger[ Return a distribution over predictions instead of a single prediction: - *classification*: output a label and its confidence - *regression*: output a mean and a variance ] ] .kol-4-12[ .center.width-90[] ] ] --- class: middle, center .big[Good uncertainty estimates tell us *when we can trust the predictions of our model*.] --- class: middle ## What do we mean by Out-of-Distribution Robustness? .grid[ .kol-6-12[ .big[ **I.I.D**] $\text{ }p\_{\text{test}}(\mathbf{x}, y) = p\_{\text{train}}(\mathbf{x}, y)$ (I.I.D. = Indepedent and Identically Distributed) ] .kol-6-12[ .big[ **O.O.D**] $\text{ }p\_{\text{test}}(\mathbf{x}, y) =\not\ p\_{\text{train}}(\mathbf{x}, y)$ ] ] .hidden[ Examples of dataset shift: - *covariate shift*: distribution of features $p\(\mathbf{x})$ changes and $p\(y \vert \mathbf{x})$, i.e., labels, is fixed - *open-set recognition*: new classes may appear at test time - *label shift*: distribution of labels $p\(y)$ changes and $p\(\mathbf{x} \vert y)$ labels, is fixed ] --- count: false class: middle ## What do we mean by Out-of-Distribution Robustness? .grid[ .kol-6-12[ .big[ **I.I.D**] $\text{ }p\_{\text{test}}(\mathbf{x}, y) = p\_{\text{train}}(\mathbf{x}, y)$ (I.I.D. = Indepedent and Identically Distributed) ] .kol-6-12[ .big[ **O.O.D**] $\text{ }p\_{\text{test}}(\mathbf{x}, y) =\not\ p\_{\text{train}}(\mathbf{x}, y)$ ] ] Examples of dataset shift: - *covariate shift*: distribution of features $p\(\mathbf{x})$ changes and $p\(y \vert \mathbf{x})$, i.e., labels, is fixed - *open-set recognition*: new classes may appear at test time - *label shift*: distribution of labels $p\(y)$ changes and $p\(\mathbf{x} \vert y)$ labels, is fixed --- class: middle ## Varying corruption intensity for dataset shift .center.width-100[] .caption[Samples from ImageNet-C] .citation[D. Hendrycks & T. Dietterich, Benchmarking Neural Network Robustness to Common Corruptions and Perturbations, ICLR 2019 ] --- class: middle ## Varying corruption intensity for dataset shift .center.width-60[] .caption[Corruption types for ImageNet-C] .citation[D. Hendrycks & T. Dietterich, Benchmarking Neural Network Robustness to Common Corruptions and Perturbations, ICLR 2019 ] --- class: middle ## Neural nets do not generalize under covariate shift .grid[ .kol-4-12[ <br/><br/> <br/><br/><br/> - **Accuracy drops** with increasing shift on ImageNet-C <br/><br/> <br/><br/> - **Uncertainty quality degrades**, making overconfident errors ] .kol-8-12[ .center.width-80[] ] ] .citation[Y. Ovadia et al., Can You Trust Your Model's Uncertainty? Evaluating Predictive Uncertainty Under Dataset Shift, NeurIPS 2019 ] --- class: middle ## Neural nets assign high confidence predictions to OOD data .center.width-100[] .caption[Example images where model assigns ${>}99.5\%$ confidence] .citation[A. Nguyen et al., Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images, CVPR 2015 ] --- class: middle ## Neural nets assign high confidence predictions to OOD data .center.width-80[] .citation[J.Z. Liu et al., Simple and Principled Uncertainty Estimation with Deterministic Deep Learning via Distance Awareness, arXiv 2020 ] --- class: middle, center .bigger[ $$\text{Calibration Error} = \vert \underbrace{\text{Confidence}}\_{\text{predicted probability of correctness}} - \underbrace{\text{Accuracy}}\_{\text{observed frequency of correctness}} \vert $$ ] --- class: middle ## Calibration - _Calibration_: of the times your model predicts something with $90\%$ confidence, is it right $90\%$ of the time? <br/> .center.width-80[] .caption[Calibration of weather forecasts] .citation[Nate Silver, The singal and the noise] --- class: middle .big[Most neural networks output probability distributions, e.g., over object categories. Are these calibrated?] --- class: middle ## Measuring calibration: Expected Calibration Error .grid[ .kol-6-12[ <br/> .bigger[ $$\text{ECE} = \sum\_{b=1}^{b}\frac{n\_b}{N}\vert \text{acc}(b) -\text{conf}(b) \vert$$ ] ] .kol-6-12[ - Bin the probabilities into $B$ bins - Compute the within-bin accuracy and within-bin predicted confidence - Average the calibration error across bins (weighted by number of points in each bin) ] ] --- class: middle ## Calibration .grid[ .kol-6-12[ <br/><br/><br/> - Most neural networks output probability distributions, e.g., over object categories. Are these calibrated? ] .kol-6-12[ .center.width-90[] ] ] .citation[C. Guo et al., On Calibration of Modern Neural Networks, ICML 2017] --- ## Why is this happening now? .center.width-100[] .caption[The effect of network depth (far left), width (middle left), Batch Normalization (middle right), and weight decay (far right) on miscalibration, as measured by ECE (lower is better).] .citation[C. Guo et al., On Calibration of Modern Neural Networks, ICML 2017] -- count: false .center.big.red[We kind of got too good at training these beasts] --- class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human .hidden[ - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs - **Chatbots**: detect unknown sentences - **Active Learning**: use model uncertainty to decide which training examples are worth labeling - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off ] --- count: false class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs .hidden[ - **Chatbots**: detect unknown sentences - **Active Learning**: use model uncertainty to decide which training examples are worth labeling - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off ] --- count: false class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs - **Chatbots**: detect unknown sentences .hidden[ - **Active Learning**: use model uncertainty to decide which training examples are worth labeling - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off ] --- count: false class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs - **Chatbots**: detect unknown sentences - **Active Learning**: use model uncertainty to decide which training examples are worth labeling .hidden[ - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off ] --- count: false class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs - **Chatbots**: detect unknown sentences - **Active Learning**: use model uncertainty to decide which training examples are worth labeling - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next .hidden[ - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off ] --- count: false class: middle ## Applications - **Autonomous vehicles**: dataset shift: location, weather, time of day; use model uncertainty to decide when to trust model or hand-over to human - **Healthcare**: model uncertainty for trusting the model or calling doctor; reject low-quality inputs - **Chatbots**: detect unknown sentences - **Active Learning**: use model uncertainty to decide which training examples are worth labeling - **Bayesian Optimization**: optimize an expensive black-box function by finding which configurations to explore next - **Reinforcement Learning**: use uncertainty for exploration vs. exploitation trade-off --- class: center, middle # Sources of uncertainty --- class: middle, center .big[There are two main types of uncertainties each with its own pecularities] --- class: middle, center # Case 1 --- # Case 1 Problems caused by sensor quality, natural randomness, that cannot be explained by our data. .center.width-90[] -- count: false .center.big[__Aleatoric / Data uncertainty__] -- count: false - _.italic[aleator]_ (lat.) = dice player -- count: false - cannot be reduced, but can be learned - useful for: + large data situation, where model uncertainty is low + real-time processing, cheaper to compute than model uncertainty --- # Case 1' Similarly looking objects also fall into this category .grid[ .kol-6-12[ .center.width-80[] ] .kol-6-12[ .center.width-80[] ] ] --- class: middle .grid[ .kol-4-12[ <br/> <br/> <br/> <br/> <br/> Similarly looking objects also fall into this category ] .kol-8-12[ .center.width-60[] ] ] --- # Aleatoric uncertainty ### Distinct classes .center.width-40[] .credit[Credit: A. Malinin] -- ### Overlapping classes .center.width-40[] --- class: middle .big[In urban scenes this type of uncertainty is frequently caused by similarly-looking classes: - .italic[pedestrian - cyclist - person on trottinette/scooter] - .italic[road - sidewalk] ] --- # Aleatoric uncertainty .center.width-70[] .credit[Credit: A. Malinin] --- # Aleatoric uncertainty .center.width-70[] .credit[Credit: A. Malinin] --- # Aleatoric uncertainty .center.width-100[] .grid[ .kol-6-12[ .center.big[Low entropy] ] .kol-6-12[ .center.big[High entropy] ] ] .credit[Credit: A. Malinin] --- class: middle .big[In layman words data uncertainty is called the: __known unknown__] --- class: middle, center # Case 2 --- # Case 2 Lack of knowledge about the process that generated the data .center.width-90[] -- count: false .center.big[__Epistemic/Knowledge uncertainty__] -- count: false - _.italic[episteme]_ (gr.) = knowledge -- count: false - disappears given enough data - useful for: + detecting samples far from the training distribution + small datasets with little annotated data --- # Case 2 - Epistemic error decreases when you gather more points: <br/> .center.width-60[] .credit[Slide credit: Marcin Mozejko] --- class: middle .center.width-50[] .credit[Image credit: Marcin Mozejko] --- # Case 2' Let us consider a neural network model trained with several pictures of dog breeds. .left-column[ .center.width-70[] ] .right-column[ ] --- count: false # Case 2' Let us consider a neural network model trained with several pictures of dog breeds. .left-column[ .center.width-70[] ] .right-column[ .center.width-70[] ] .reset-column[ ] - We ask the model to decide on a dog breed using a photo of a cat. - What would you want the model to do? --- count: false # Case 2' Let us consider a neural network model trained with several pictures of dog breeds. .left-column[ .center.width-70[] ] .right-column[ .center.width-70[] ] .reset-column[ ] .center.big[__Out-of-distribution uncertainty__] --- class: middle .big[In layman words, knowledge uncertainty is called the: __unknown unknown__] --- # Epistemic uncertainty .center.width-70[] .credit[Credit: A. Malinin] --- # Epistemic uncertainty ### Unseen classes .center.width-60[] .credit[Credit: A. Malinin] -- ### Unseen variations of seen classes .center.width-60[] --- class: middle .center.width-70[] .caption["Our model exhibits in (d) increased .bold[aleatoric uncertainty on object boundaries and for objects far from the camera]. .bold[Epistemic uncertainty accounts for our ignorance about which model generated our collected data]. In (e) our model exhibits increased epistemic uncertainty for semantically and visually challenging pixels. The bottom row shows a failure case of the segmentation model when the model fails to segment the footpath due to increased epistemic uncertainty, but not aleatoric uncertainty."] .citation[A. Kendall and Y. Gal, What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, NeurIPS 2017.] --- class: middle, center .bigger[Measuring the quality of the uncertainty can be challenging due to **lack of ground truth**, i.e., no “right answer” in some cases ] --- class: middle, center # MC-Dropout --- # Dropout - First "deep" regularization technique - Remove units at random during the forward pass on each sample - Put them all back during test .center.width-60[] .citation.tiny[Dropout: A Simple Way to Prevent Neural Networks from Overfitting, Srivastava et al., JMLR 2014] --- # Dropout ## Interpretation - Reduces the network dependency to individual neurons and distributes representation - More redundant representation of data ## Ensemble interpretation - Equivalent to training a large ensemble of shared-parameters, binary-masked models - Each model is only trained on a single data point - __A network with dropout can be interpreted as an ensemble of $2^N$ models with heavy weight sharing__ (Goodfellow et al., 2013) --- # Dropout .grid[ .kol-1-12[ ] .kol-10-12[ ```py >>> x = torch.full((3, 5), 1.0).requires_grad_() >>> x tensor([[ 1., 1., 1., 1., 1.], [ 1., 1., 1., 1., 1.], [ 1., 1., 1., 1., 1.]]) >>> dropout = nn.Dropout(p = 0.75) >>> y = dropout(x) >>> y tensor([[ 0., 0., 4., 0., 4.], [ 0., 4., 4., 4., 0.], [ 0., 0., 4., 0., 0.]]) >>> l = y.norm(2, 1).sum() >>> l.backward() >>> x.grad tensor([[ 0.0000, 0.0000, 2.8284, 0.0000, 2.8284] [ 0.0000, 2.3094, 2.3094, 2.3094, 0.0000] [ 0.0000, 0.0000, 4.0000, 0.0000, 0.0000]]) ``` ] ] --- # Dropout .grid[ .kol-1-12[ ] .kol-10-12[ ```py >>> x = torch.full((3, 5), 1.0).requires_grad_() >>> x tensor([[ 1., 1., 1., 1., 1.], [ 1., 1., 1., 1., 1.], [ 1., 1., 1., 1., 1.]]) >>> dropout = nn.Dropout(p = 0.75) >>> y = dropout(x) *>>> y *tensor([[ 0., 0., 4., 0., 4.], * [ 0., 4., 4., 4., 0.], * [ 0., 0., 4., 0., 0.]]) >>> l = y.norm(2, 1).sum() >>> l.backward() >>> x.grad tensor([[ 0.0000, 0.0000, 2.8284, 0.0000, 2.8284] [ 0.0000, 2.3094, 2.3094, 2.3094, 0.0000] [ 0.0000, 0.0000, 4.0000, 0.0000, 0.0000]]) ``` ] ] --- # Dropout For a given network .grid[ .kol-1-12[ ] .kol-10-12[ ```py model = nn.Sequential(nn.Linear(10, 100), nn.ReLU(), nn.Linear(100, 50), nn.ReLU(), nn.Linear(50, 2)); ``` ] ] -- we can simply add dropout layers .grid[ .kol-1-12[ ] .kol-10-12[ ```py model = nn.Sequential(nn.Linear(10, 100), nn.ReLU(), * nn.Dropout(), nn.Linear(100, 50), nn.ReLU(), * nn.Dropout(), nn.Linear(50, 2)); ``` ] ] --- # Dropout A model using dropout has to be set in __train__ or __test__ mode --- # Dropout A model using dropout has to be set in __train__ or __test__ mode The method `nn.Module.train(mode)` recursively sets the flag `training` to all sub-modules. .grid[ .kol-1-12[ ] .kol-10-12[ ```py >>> dropout = nn.Dropout() >>> model = nn.Sequential(nn.Linear(3, 10), dropout, nn.Linear(10, 3)) >>> dropout.training True >>> model.train(False) Sequential ( (0): Linear (3 -> 10) (1): Dropout (p = 0.5) (2): Linear (10 -> 3) ) >>> dropout.training False ``` ] ] --- # Dropout A model using dropout has to be set in __train__ or __test__ mode .grid[ .kol-1-12[ ] .kol-10-12[ ```py >>> dropout = nn.Dropout() >>> model = nn.Sequential(nn.Linear(3, 10), dropout, nn.Linear(10, 3)) >>> x = torch.full((1, 3), 1.0) *>>> model.train() Sequential ( (0): Linear (3 -> 10) (1): Dropout (p = 0.5) (2): Linear (10 -> 3) ) >>> model(x) *tensor([[ 0.5360, -0.5225, -0.5129]], grad_fn=<ThAddmmBackward>) >>> model(x) *tensor([[ 0.6134, -0.6130, -0.5161]], grad_fn=<ThAddmmBackward>) ``` ] ] --- # Dropout A model using dropout has to be set in __train__ or __test__ mode .grid[ .kol-1-12[ ] .kol-10-12[ ```py >>> dropout = nn.Dropout() >>> model = nn.Sequential(nn.Linear(3, 10), dropout, nn.Linear(10, 3)) >>> x = torch.full((1, 3), 1.0) >>> model.train() Sequential ( (0): Linear (3 -> 10) (1): Dropout (p = 0.5) (2): Linear (10 -> 3) ) >>> model(x) tensor([[ 0.5360, -0.5225, -0.5129]], grad_fn=<ThAddmmBackward>) >>> model(x) tensor([[ 0.6134, -0.6130, -0.5161]], grad_fn=<ThAddmmBackward>) >>> *>>> model.eval() Sequential ( (0): Linear (3 -> 10) (1): Dropout (p = 0.5) (2): Linear (10 -> 3) ) >>> model(x) *tensor([[ 0.5772, -0.0944, -0.1168]], grad_fn=<ThAddmmBackward>) >>> model(x) *tensor([[ 0.5772, -0.0944, -0.1168]], grad_fn=<ThAddmmBackward>) ``` ] ] --- class: middle ## How can we get uncertainties from standard networks? .left-column[ ### .center[Standard Neural Network] .center.width-80[] ] .right-column[ ### .center[Bayesian Neural Network] .center.width-80[] ] .reset-column[ ] .citation[Dropout as Bayesian approximation: representing model uncertainty in deep learning, Y. Gal, ICML 2016] --- class: middle .grid[ .kol-1-2[ ### .center[Standard Neural Network] .center.width-100[] ] .kol-1-2[ ### .center[Bayesian Neural Network] .center.width-100[] ] ] .credit[Image credit: Eric Ma] --- class: middle ## From Bayesian Neural Networks to Dropout Gal and Ghahramani build upon the ensembling view of Dropout and show that when **training a network with dropout** with a standard classification or regression objective, one *is actually implicitly doing variational inference* to match the posterior distribution of the weights. .center.width-40[] --- class: middle ## Uncertainty estimates from dropout Proper epistemic uncertainty estimates at $\mathbf{x}$ can be obtained in a principled way using Monte-Carlo integration: - Draw $T$ sets of network parameters $\hat{\theta}\_t$ from $q(\theta;\nu)$. - Compute the predictions for the $T$ networks, $\\{ f(\mathbf{x};\hat{\theta}\_t) \\}\_{t=1}^T$. - Approximate the predictive mean and variance as follows: $$ \begin{aligned} \mathbb{E}\_{p(y|\mathbf{x},\mathbf{X},\mathbf{Y})}\left[y\right] &\approx \frac{1}{T} \sum\_{t=1}^T f(\mathbf{x};\hat{\theta}\_t) \\\\ \mathbb{V}\_{p(y|\mathbf{x},\mathbf{X},\mathbf{Y})}\left[y\right] &\approx \sigma^2 + \frac{1}{T} \sum\_{t=1}^T f(\mathbf{x};\hat{\theta}\_t)^2 - \hat{\mathbb{E}}\left[y\right]^2 \end{aligned} $$ --- class: middle, center .center.width-60[] Yarin Gal's [demo](http://mlg.eng.cam.ac.uk/yarin/blog_3d801aa532c1ce.html). --- class: middle ## Uncertainty estimates from dropout .grid[ .kol-6-12[ ```line-numbers class SimpleModel(nn.Module): def __init__(self, p, decay): super(SimpleModel, self).__init__() self.dropout_p = p self.decay = decay self.f = nn.Sequential( nn.Linear(1,20), nn.ReLU(), nn.Dropout(p=self.dropout_p), nn.Linear(20, 20), nn.ReLU(), nn.Dropout(p=self.dropout_p), nn.Linear(20,1) ) def forward(self, X): return self.f(X) ``` ] .kol-6-12[ ```line-numbers def uncertainty_estimate(X, model, iters=200, l2=0.01): model.train() outputs = np.hstack([model(X[:, np.newaxis]).data.numpy() \ for i in range(iters)]) y_mean = outputs.mean(axis=1) y_variance = outputs.var(axis=1) tau = l2 * (1. - model.dropout_p) / (2. * N * model.decay) y_variance += (1. / tau) y_std = np.sqrt(y_variance) return y_mean, y_std ``` ] ] --- class: middle ## Results .center.width-80[] .citation[Y. Gal, Dropout as Bayesian approximation: representing model uncertainty in deep learning, ICML 2016] --- class: middle ## Results .center.width-80[] .citation[Y. Gal, Dropout as Bayesian approximation: representing model uncertainty in deep learning, ICML 2016] --- class: middle ## Pixel-wise depth regression .center.width-55[] .citation[A. Kendall and Y. Gal, What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, NeurIPS 2017.] --- class: middle ## Combinining heteroscedastic and epistemic uncertainty .center.width-80[] .caption[Semantic Segmentation performance on CamVid] .citation[What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, A. Kendall and Y. Gal, NeurIPS 2017] --- class: middle ## Combinining heteroscedastic and epistemic uncertainty .center[Monocular Depth Regression Performance] .center.width-80[] .citation[What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, A. Kendall and Y. Gal, NeurIPS 2017] --- class: middle ## Comparing heteroscedastic and epistemic uncertainty .center[Aleatoric vs. Epistemic Uncertainty for Out of Dataset Examples] .center.width-60[] - Aleatoric uncertainty remains constant while epistemic uncertainty increases for out of dataset examples! .citation[What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?, A. Kendall and Y. Gal, NeurIPS 2017] --- class: middle ## Applications Multiple follow-up papers by Gal and friends: - __Concrete Dropout__ : learn Dropout probability for each layer using Concrete/ Gumble-Softmax trick - __Active Learning with MC Dropout__: select samples using uncertainty - __MC Dropout for RNNs__: same dropout mask across time-steps - __Data efficiency in RL__ - Stochasticity via __BatchNorm__ perturbation